David Ridlen

Member

I keep being presented with 'earth curvature experiment' videos recently, by flat/concave earth advocates. It seems to be their new favorite "evidence" that Earth is not spherical. Debunking this gets into math which I stink at, regarding refraction. I would like to be sure I have considered all the factors. And I keep looking for a simple rule of thumb, like 'for each mile, the line of sight drops X amount, due to refraction' value.

So for example, this '20 mile laser' experiment comes up-

Source: https://www.youtube.com/watch?v=b8bpPTsPgsU

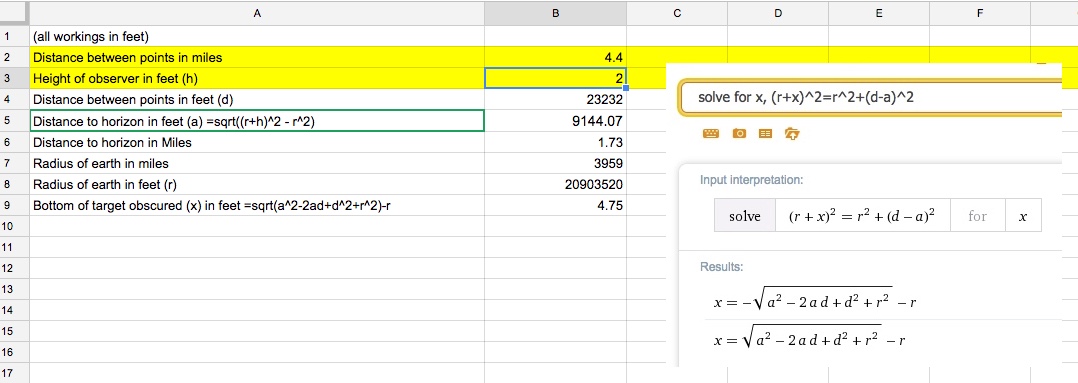

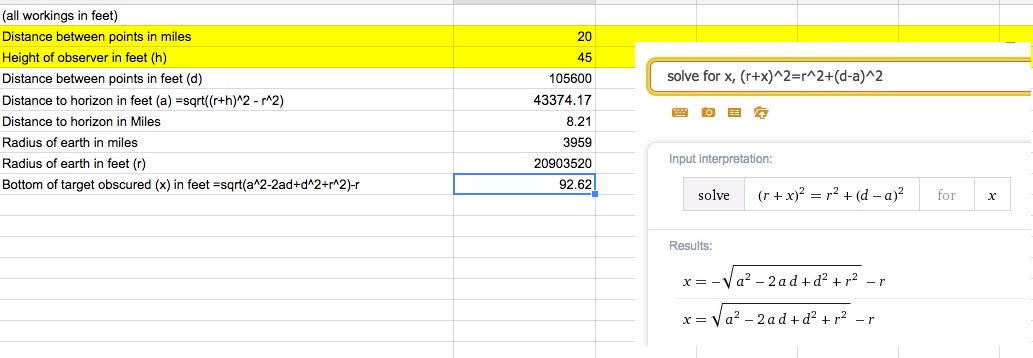

that claims to defy limitations of Earth curvature. It is sloppy, but assuming they are not cheating, the laser's horizontal line of sight is 65 feet above sea level, so does it make sense to be able to see a laser from 20 miles away, over water, at a height of 65 feet above sea level when the geographic (actual) line of sight would prevent the laser from traveling more than 10 miles over the Earth's curved surface? Does atmospheric refraction normally account for the laser distance doubling? And how to explain why it does make sense, in simplest terms?

I am also wondering if the laser could be "skipping" over the surface, kinda like a skipping rock over water, but in a few, long arcs. So the laser might graze the water surface near sea level peak/bulge at around 10 miles, then is reflected back upward, and arcs over the 'hump' another ten miles, or more, due to refraction. Thus the laser covers more distance than if it were traveling over level ground that is non-reflective.

Sites explaining refraction calculation are too mathy for my simple right-brain (figuratively speaking). And I seem to be reading conflicting rules, and I am interpreting different end results. I understand there are several refraction variables, like altitude, temperature, pressure, and moisture, and I understand that the closer to the water, and the greater the distance, the more the laser will refract, or 'super refract.' But I learned from a "Curvature and Refraction" video regarding geodetic surveys, that surveyers use a standard 7% rule-

But subtracting 7% does not seem to account for the laser traveling twice the distance over the curved Earth, as in the 20 mile laser example.

The WikiP entry on "Horizon- effects of refraction" http://en.wikipedia.org/wiki/Horizon#Effect_of_atmospheric_refraction- says something about using a 4/3 ratio, and 15% beyond geometrical horizon. And 'standard' atmospheric refraction is 8%, although that is not 'super' refraction as I assume would apply to the laser example. But these values do not match the 7% rule, and I dont get how all the values are applied to get a definitive refraction value.

I see a simple 'Distance to horizon calculator' here http://www.ringbell.co.uk/info/hdist.htm but it does not figure in refraction.

Another site, "Calculating Altitudes of Distant Objects" http://www-rohan.sdsu.edu/~aty/explain/atmos_refr/altitudes.html has a calculator to determine refraction 'lapse rate' , but no matter what I enter for the values, the lapse rate always comes out "0." It doesnt seem to work.

Any clarification is much appreciated.

So for example, this '20 mile laser' experiment comes up-

Source: https://www.youtube.com/watch?v=b8bpPTsPgsU

that claims to defy limitations of Earth curvature. It is sloppy, but assuming they are not cheating, the laser's horizontal line of sight is 65 feet above sea level, so does it make sense to be able to see a laser from 20 miles away, over water, at a height of 65 feet above sea level when the geographic (actual) line of sight would prevent the laser from traveling more than 10 miles over the Earth's curved surface? Does atmospheric refraction normally account for the laser distance doubling? And how to explain why it does make sense, in simplest terms?

I am also wondering if the laser could be "skipping" over the surface, kinda like a skipping rock over water, but in a few, long arcs. So the laser might graze the water surface near sea level peak/bulge at around 10 miles, then is reflected back upward, and arcs over the 'hump' another ten miles, or more, due to refraction. Thus the laser covers more distance than if it were traveling over level ground that is non-reflective.

Sites explaining refraction calculation are too mathy for my simple right-brain (figuratively speaking). And I seem to be reading conflicting rules, and I am interpreting different end results. I understand there are several refraction variables, like altitude, temperature, pressure, and moisture, and I understand that the closer to the water, and the greater the distance, the more the laser will refract, or 'super refract.' But I learned from a "Curvature and Refraction" video regarding geodetic surveys, that surveyers use a standard 7% rule-

But subtracting 7% does not seem to account for the laser traveling twice the distance over the curved Earth, as in the 20 mile laser example.

The WikiP entry on "Horizon- effects of refraction" http://en.wikipedia.org/wiki/Horizon#Effect_of_atmospheric_refraction- says something about using a 4/3 ratio, and 15% beyond geometrical horizon. And 'standard' atmospheric refraction is 8%, although that is not 'super' refraction as I assume would apply to the laser example. But these values do not match the 7% rule, and I dont get how all the values are applied to get a definitive refraction value.

I see a simple 'Distance to horizon calculator' here http://www.ringbell.co.uk/info/hdist.htm but it does not figure in refraction.

Another site, "Calculating Altitudes of Distant Objects" http://www-rohan.sdsu.edu/~aty/explain/atmos_refr/altitudes.html has a calculator to determine refraction 'lapse rate' , but no matter what I enter for the values, the lapse rate always comes out "0." It doesnt seem to work.

Any clarification is much appreciated.

Last edited by a moderator: