OneWhiteEye

Senior Member

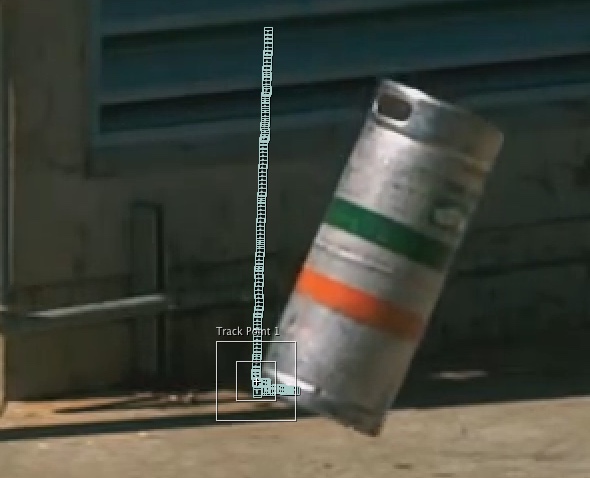

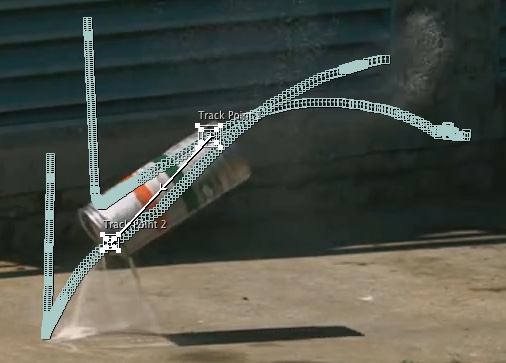

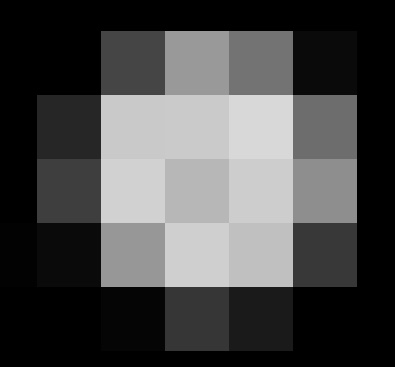

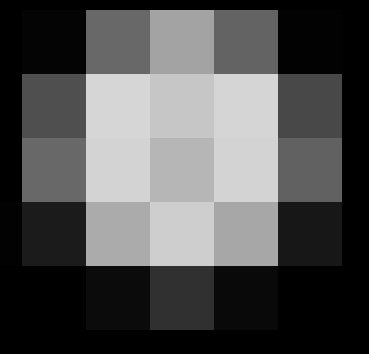

First, I have to get out of calibration wonderland. I fell down this rabbit hole yesterday. Do you realize how hard it is to make a perfect circle? I didn't. This is as close as I can get:Mick, OWE, planning to experiment with video-recorded real falls?

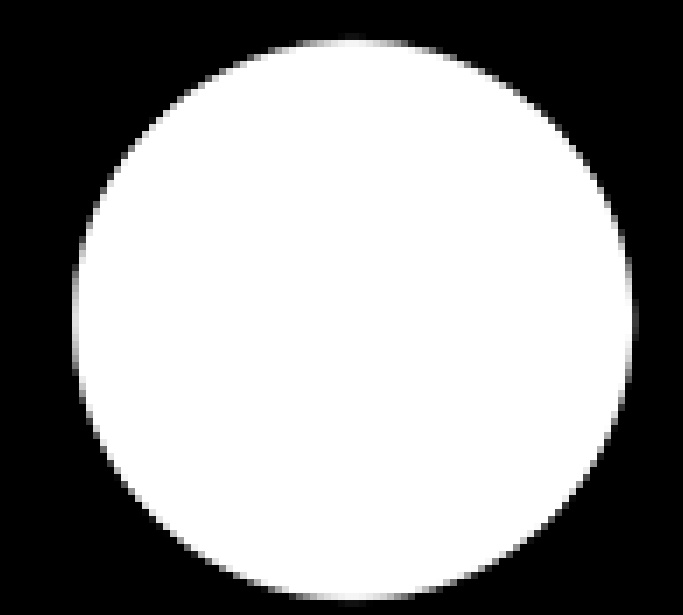

But, if you do anything to it, and I mean anything at all (including just resize/resample), it's not perfect anymore. Inkscape made this, I've yet to find anything that can honestly resize it. GIMP does the best, but it's not quite as good as direct subpixel output from Inkscape (which is not perfect). Move the 1000px radius circle one pixel to the side and reduce by 10x, expecting a good symmetrical anti-aliased edge, and NO.