OneWhiteEye

Senior Member

Awesome! Thank you. I'll be coming back with the same thing in a bit, after I get my frame iterator done.

Or, whatever you're using to draw it doesn't draw it very accurately...So I guess After Effects cannot really track that case very well.

Mick, AE did okay in my book. You can't get something for nothing, even with sophisticated algorithms.

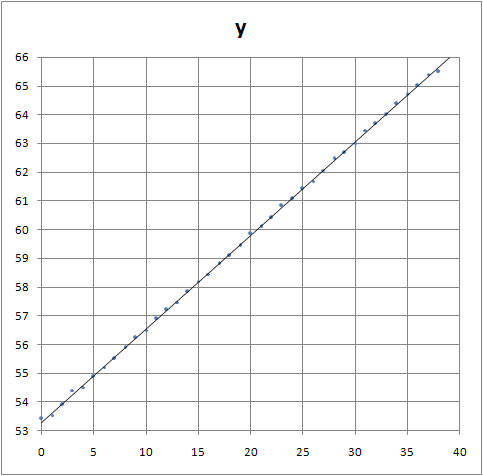

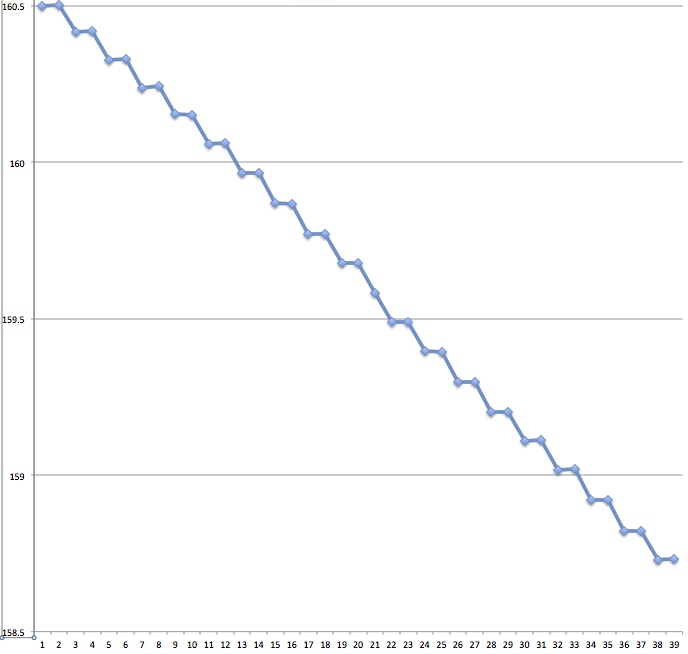

Chances are I can do better than this. This is the simplest selection filter and weighting there is.

Perhaps you can, too, by more careful placement of the tracking hints?

Really? I knew right away it would affect my results significantly. Even in the case of selecting only one color with even weights. I was initially surprised the first few frames would be that bad. Now I know the first three frames form a much straighter line, that they were thrown off the average by the bars and it made them look as bad.Not without removing the transparent band. Something I had not really intended when contrived the example.

Yes, but isn't that the case most of the time in the real world? This was one of your objections to my method, hence the contrived example which you didn't believe possible for me to track effectively.There's too much going on there, effectvely changing the shape of the objet.

True. Mainly because I have some parameters to adjust in my algorithm. AE also does -- two boxes, is that all? Moreover, the act of selecting a single color and weighting evenly is the second simplest strategy, next to averaging the entire image.You get around that by selecting the middle color only.

Really? I knew right away it would affect my results significantly.

And it does. But you assumed AE would do better?I knew it would affect your results, that what I set it up to illustrate.

Yes. But it didn't. I though it take color into account. Apparently not.And it does. But you assumed AE would do better?

I did think it could do better, didn't know if it would.Yes. But it didn't. I though it take color into account. Apparently not.

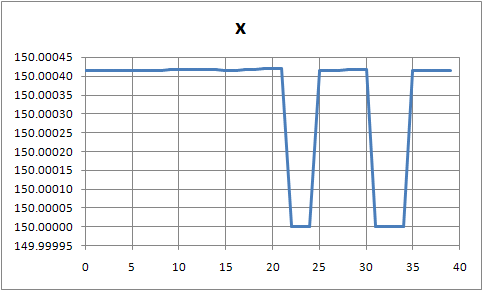

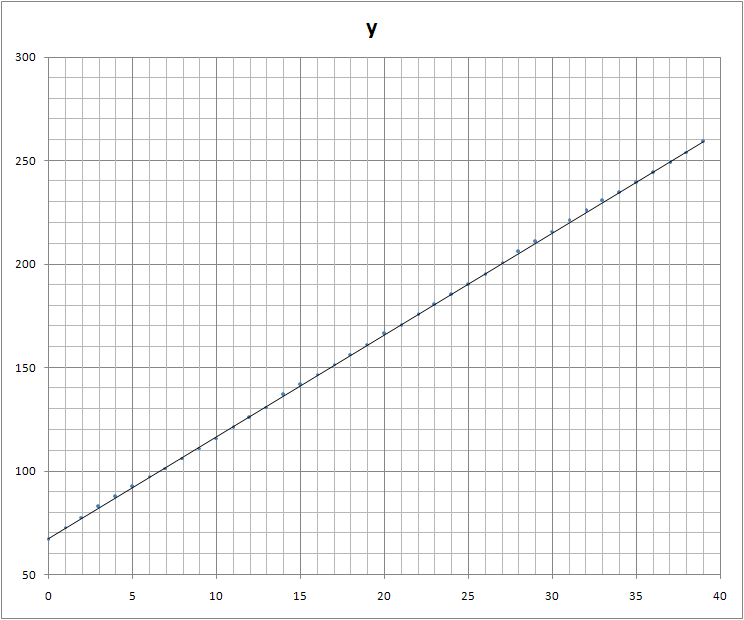

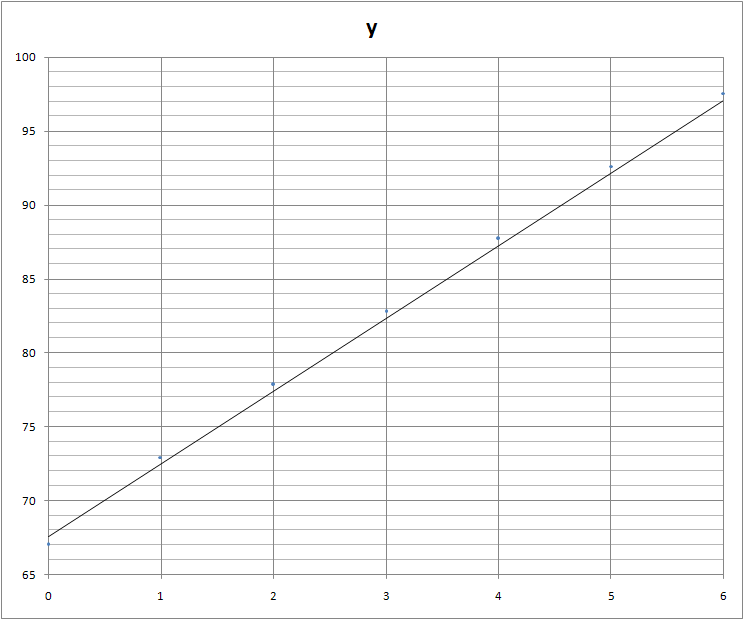

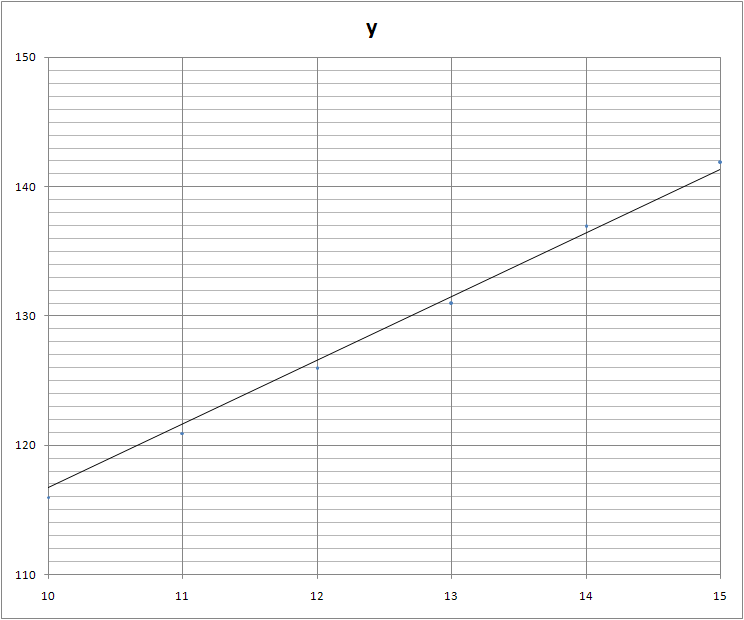

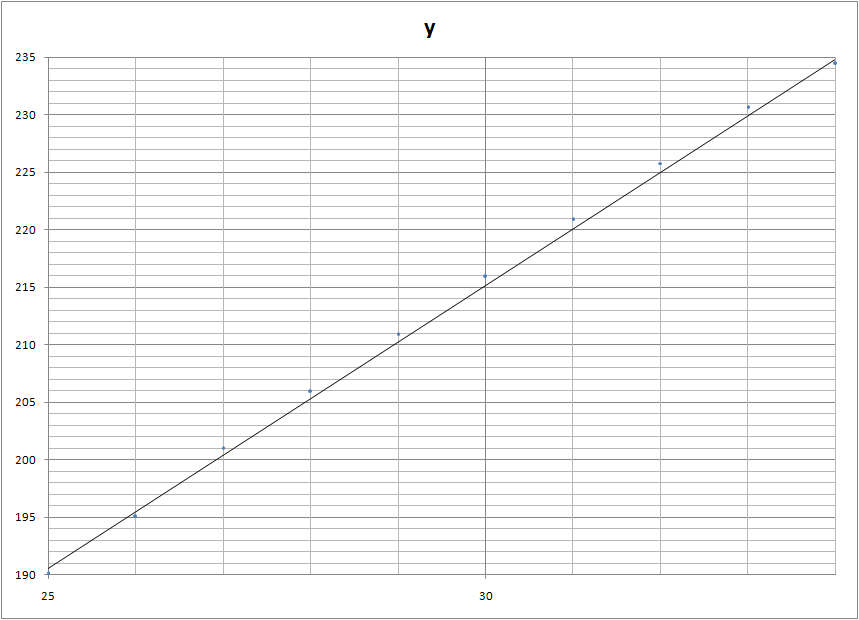

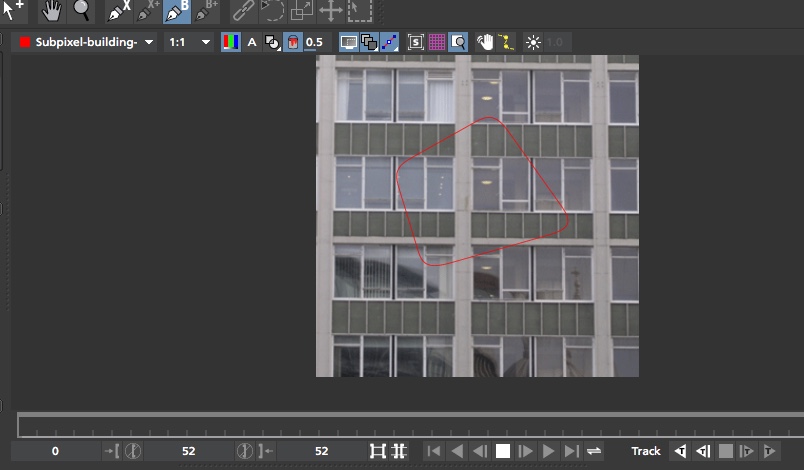

Here's a subpixel moving ball over a bar. AE fails on this.

Should be exactly 45 degree motion, steps only accurate to 1/10th of a pixel.

Just looked into the Mocha platform and it seems like its planar tracking algorithm could potentially help remove from measurements of vertical displacement the errors introduced by lateral movements and distortions. It would be very interesting to see that applied to some definable portion of the face of WTC 7. Mick, I'm assuming your version doesn't have that capability?

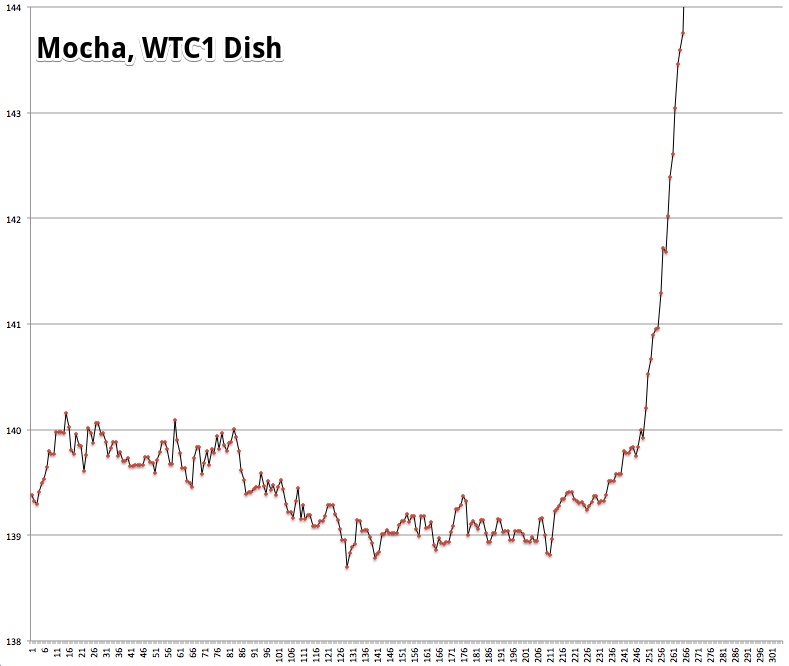

Although I barely understand what I'm doing with it at this point. Here's a quick attempt at tracking the WTC1 antenna dishes.External Quote:mocha AE CC is licensed by Adobe and ships free inside After Effects Creative Cloud.This free version is launched from within AE and features mocha's planar tracking and masking functions, limited to support for After Effects Creative Cloud.

I'm inclined to go back to playing guitar now. Cool with that?

We can hit a little from time to time, it is interesting. Just need to attend to other things for a while.I'm still a little interested in if your method is more accurate in some cases...

Most likely....but I suspect that with tweaking that AE or Mocha could duplicate the accuracy in those cases with a bit of filtering.

Not a shred of doubt. But it was fun, wasn't it?I think all this effort would probably have been far more usefully applied to accurately determining the feet/pixel in the videos

It does. You can see some distortion of the antenna from time to time. It will show up in the data if it happens. The positive is that it's noise; the magnitude is limited and the deviations are about the mean, Unfortunately, it's low frequency, too, and is right in the band of interest.The camera were quite are away... there was hot air from fires... wouldn't this introduce a distortion of even static images... like a mirage for example?

I think you see that my methods can be both accurate and useful in the real world. Just not practical when there are tools like these around. Which makes them the natural choice for any further investigation into over-g.

I think all this effort would probably have been far more usefully applied to accurately determining the feet/pixel in the videos

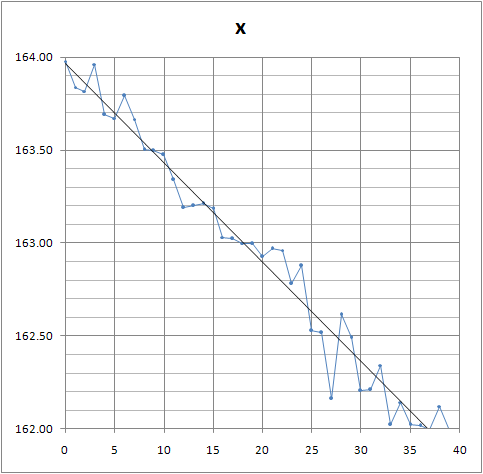

All I can say is noise in and of itself is not a deal-killer, generally. It might be in this case, don't know; it certainly is on the margin. This is one of the reasons I can't throw a lot of confidence into the result.How can we argue, with straight faces, the "accuracy" of subpixel tracking in this scenario, when the data obtained by this method is so ridiculously noisy.

Surely the fact that the data is so unrepresentative of reality, having the building accelerating upwards and downwards, proves that the method is flawed?

It would be interesting, but you do want me to live, don't you?It would perhaps be interesting to re-evaluate tracing accuracy using shared inkscape image collections with known motion (to whomever generates the shared image set) in blind tests across OWE custom, SE and AE platforms.

That parameter could be negotiable.It would be interesting, but you do want me to live, don't you?

It would perhaps be interesting to re-evaluate tracing accuracy using shared inkscape image collections with known motion (to whomever generates the shared image set) in blind tests across OWE custom, SE and AE platforms.

It would be interesting, but you do want me to live, don't you?

Please can you explain why this graph shows "a coupling action between core and perimeter".Note the comparison between the dark blue and magenta velocity profiles which show a coupling action between core and perimeter.