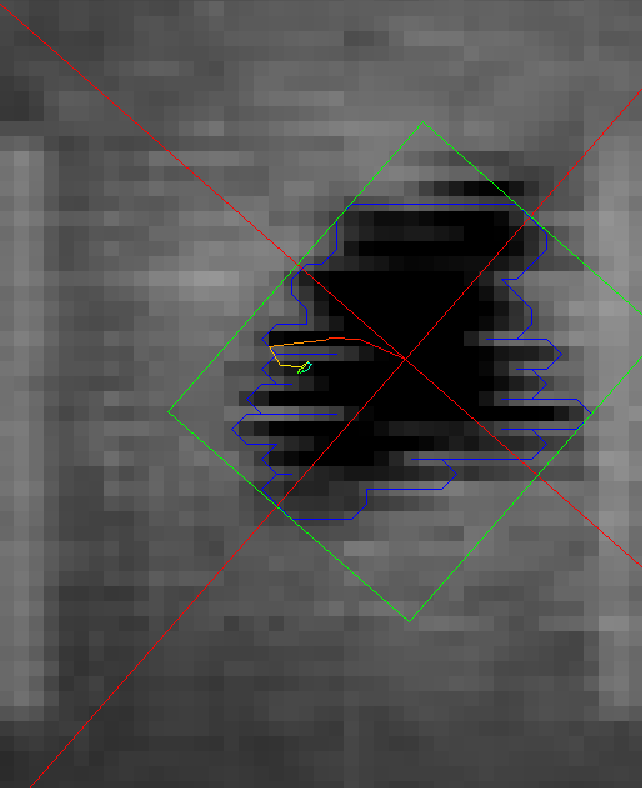

This is what frame 984 of Gimbal looks like, showing the object's geometric properties detected by the method described here.

Clearly the accuracy of the method is heavily degraded on such frames where the object makes sudden movements leading to these more pronounced combing artifacts due to interlacing. The automated motion tracking of the clouds filters and averages errors over a large area to counteract this to a degree but the accuracy of that is also affected by this. So what follows is a lengthy but still inconclusive investigation into the question of what can be done in order to improve their accuracy in the face of such artifacts. This post mainly focuses on describing the problem in detail, figuring out what happened to the video.

Many different deinterlacing algorithms exist that can often do a decent job of alleviating such artifacts. From among the methods supported natively by FFMPEG for example I tried BWDIF (Bob Weave Deinterlace Filter).

The frames from the original WMV file were extracted to the current folder, cropped and stored in a lossless image format with the following FFMPEG command:

With BWDIF deinterlacing the command is as follows. Note that the command requires some of the variables from above to be defined.

Here's a comparison between the original frame 1002 and the same frame deinterlaced with BWDIF:

And for frame 984:

So BWDIF helps but the results are still significantly wavy.

QTGMC is another widely recommended deinterlacing method that I tried. It has many dependencies but for Windows someone made a package that contains all of them so you can just extract it all and start using it. I changed the last four lines of "qtgmc.avs", going for the highest quality possible, although many settings remain to be tweaked:

Then the following command runs the filter:

It does substantially improve the results on some frames. Here's a comparison between BWDIF and QTGMC on frame 1002:

But on other frames like 984 the difference is much smaller.

None of the methods I tried were able to completely remove the artifacts and tended to blur the images. Perhaps state of the art deinterlacing methods like this one could do even better, but after digging deeper it quickly became clear that the video actually has artifacts that these methods were not designed to handle.

Normally interlaced video should just consist of one snapshot of the scene on the even lines and another snapshot at a different time on the odd lines of the frames. But that is not the case in Gimbal and GoFast. Mick illustrated this by overlaying a grill of alternating horizontal lines over frame 1002.

Alternatively we can use the following commands to extract the top/bottom fields of the video:

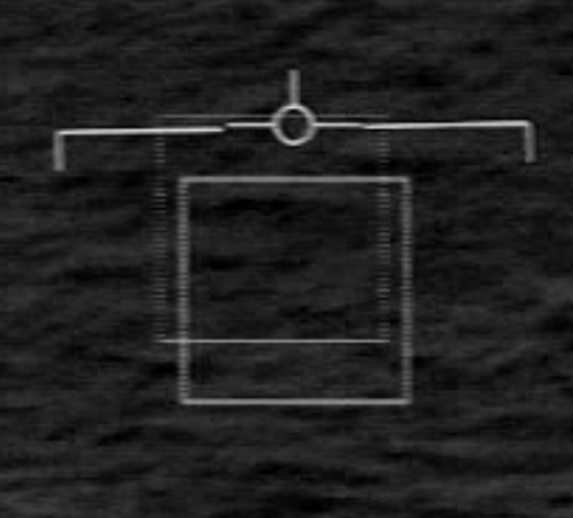

The object shows some degree of combing artifacts on both the top and bottom fields, but the track bar is solid on the the bottom field, and in the top field it fades in and out.

Arranging the fields to appear in sequence, we can see that the bottom field (B) of a frame always shows events that happened before the top field (T) of the same frame. This appears to match what the Video4Linux documentation says about NTSC:

Presumably the object is constantly moving to the left during this time, but it remains unclear why the combing distortion affecting it is always much greater in the top fields.

Comparing the top field of frame 980 vs 981 we see alternating dark and bright horizontal bands that are separated by about 7-8 lines.

But comparing the bottom fields of the same two frames we don't see the same bands (maybe some barely noticeable ones). Instead we just see a significant change in the overall brightness of the image.

In fact those clear bands related to illumination changes never appear on the bottom field. This observation already has actionable consequences. Tracking algorithms should work a bit better without these illumination bands, so they can either be run on the bottom field only, or the illumination changes can be more accurately measured on the bottom field and that information might allow the illumination bands to be removed from the top fields as well.

There's an oddity related to the top field of frame 373, just when the video changes from white hot to black hot. We can see alternating bands affecting most of the image but it's unclear why the last 22 rows, the bottom ~10% of the field is unaffected. It looks like the recording is not synchronized with the cockpit display, so it already starts displaying part of the next frame while recording the current one. Here I show the fields of frames 372-374 in sequence:

The above is where this desync is most clearly visible, but it does also occur over the rest of the video if we look more closely. For example on frame 1022, just before a change in brightness, we see the lower half of the following is darker than the top half:

We see the same interlacing patterns in GoFast as well. Here's frame 38:

In FLIR1 the compression makes it more difficult to see these patterns, but they do appear on some frames:

I came across a thread in another forum where someone had a similar problem. The difference is that in their clip the alternating pattern is more jagged, rather than smoothly fading in and out, but there are thick bands showing a combing pattern instead of alternating lines, vaguely similar to Gimbal's top/bottom fields.

In that case they found the cause and were even able to write some code to correct the artifact.

After some investigation it seems very likely that this is indeed what happened in Gimbal as well, just involving a different interpolation method used while resizing. The sequence of events for how the video originated was summarized here based on an ATFLIR manual. A paper describing the ATFLIR also confirms that its sensor has 640x480 pixels. So the original frames might've been 480x480 in size, recorded onto modified RS-170 video, but in Gimbal the cropped frames are 428x428 in size. The video has 30 progressive frames per second but it originated from an interlaced video with 60 fields per second. It's unclear why it got resized or by whom, but while doing so perhaps some hardware/software was used that did not properly take into account that the video is interlaced, that downscaled interwoven frames instead of scaling the fields separately or deinterlacing first.

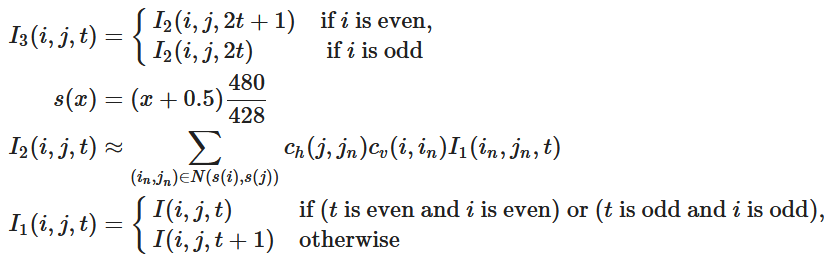

The theory is that 60 full frames (480x480) in image I were recorded as 60 fields (480x240). They were converted to 60 interlaced frames (480x480) in image I1 by simply weaving together the fields. Each of those 60 interlaced frames were downscaled to 428x428 in image I2 with some interpolation that smoothly blended together the alternating lines. Finally the video was reinterlaced, weaving alternating lines from the current and next frame into image I3, padding to 640x480 and discarding every other frame to arrive at the 30 FPS Gimbal video. More precisely this is described by the following formulas:

Here t is a frame number with I2, I1 and I having twice as many frames as I3. Going from I2 to I1 the coordinates for the center of each pixel (+0.5 offset) are scaled up. I2 samples pixels (in,jn) from I1 in a neighborhood N around those scaled coordinates. The image scaling is typically implemented as a horizontal scaling followed by a vertical scaling with lookup tables for the horizontal and vertical scaling coefficients ch and cv. The size of the neighborhood depends on the scaling method used. For ffmpeg's "bilinear" scaling mode it varies, typically 2x2 but up to 3x3 blocks of pixels with nonzero coefficients. Whether it's the even or odd rows of I1 that samples the next frame of I alternates between frames.

To simulate the effect I first created the following 480x480 2FPS test video. I posted the code for all of this here, on Github, with links to run it on either Google Colab or Kaggle.

Then I applied the following filters to it, with tests to make sure that their result matches the formula above:

And here's a video of its top/bottom fields in the same temporal order as Gimbal:

This appears to reproduce most of the effects seen in the Gimbal frames above. We can see clear horizontal bands in the top fields when the background brightness changes. The bottom field doesn't show those clear bands since I made sure to only change the background color on even frames when generating the input video. But the WMV1 codec also adds some faint horizontal lines to the bottom fields since the full interlaced image is compressed in blocks and the information from one line bleeds over into the next. When the bar moves it appears duplicated and one of them is more solid. In the top field the bar is fading in and out and it's always solid in the bottom one. I made the object only move on even input frames, leading to much greater distortion of the object in the top field with some slight distortion in the bottom field as well due to the compression but some further research is needed to see if we can get closer to the degree of combing seen in Gimbal's bottom fields. The idea is that the overlay, e.g the position of the bars is only updated 30 times per second or less, so you only see it move during the period captured by the top field. Similarly the pod's auto level gain (ALG) algorithm might only be updating the level/gain settings at most 30 times per second. But it's unclear whether the ATFLIR's sensor is only able to capture 30 full images of the scene per second.

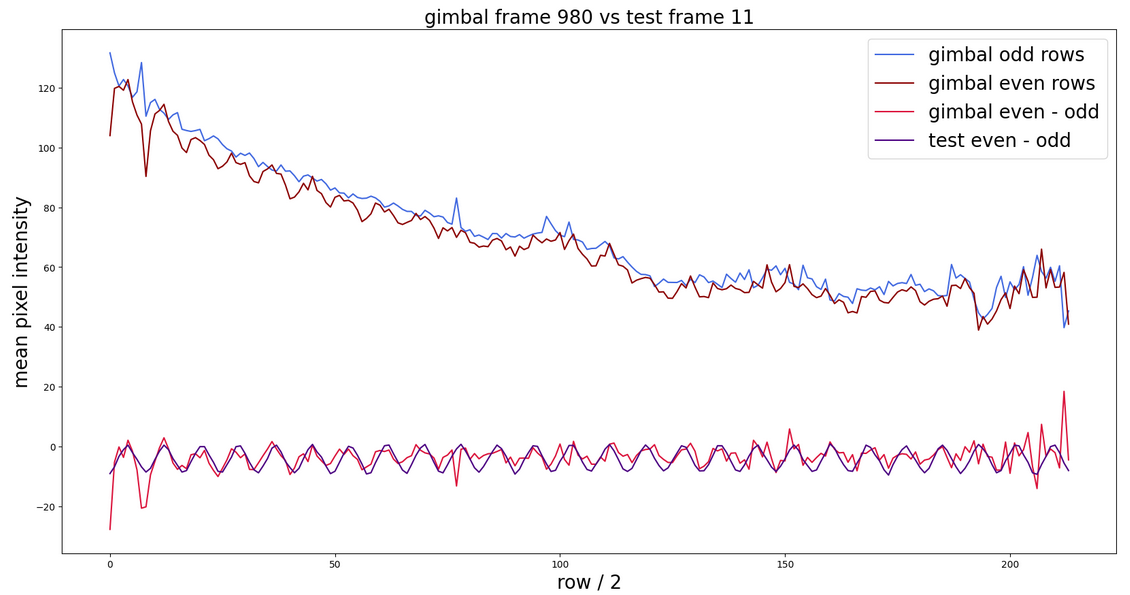

To quantify whether the simulation is comparable to Gimbal's actual frames I looked at the horizontal bands seen when, in both cases, the image suddenly becomes less bright. At the top of the following plot I show the mean pixel intensity over even and odd rows of Gimbal's frame 980. We can see that the even row intensity periodically dips below the odd row intensity. At the bottom of the plot I show the difference between consecutive even/odd rows of Gimbal, and compare that to the same difference calculated for the simulated test frame. In the test video I made the background color change a lot more than in Gimbal, so I scaled the amplitude and shifted the mean of the test signal for a better match, but it's a remarkable result that the frequency and phase of the test signal matches what is observed in Gimbal.

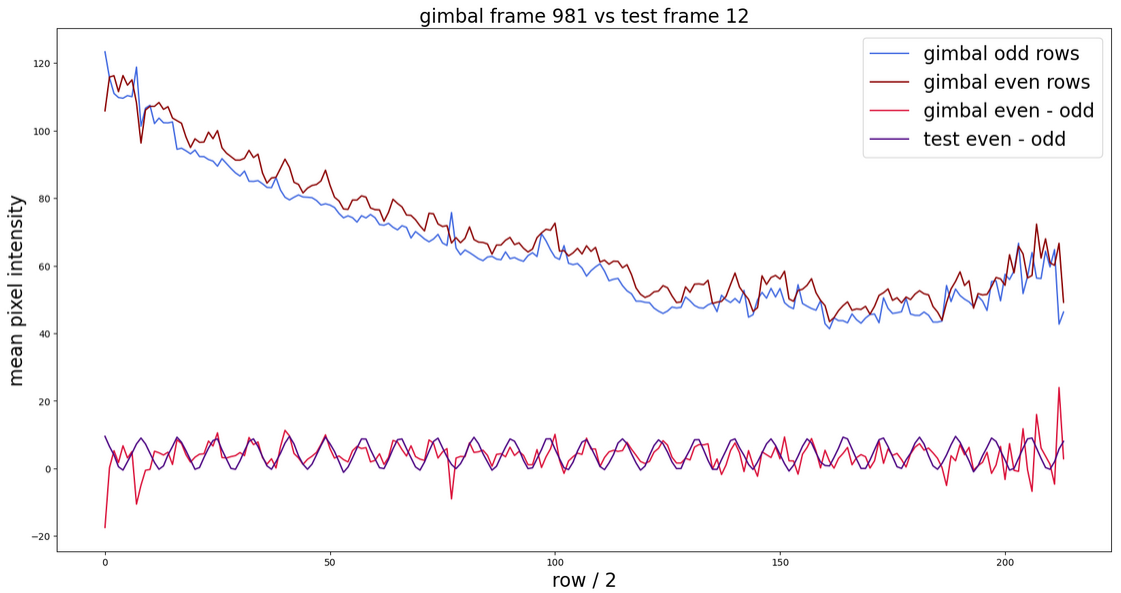

In Gimbal's frame 981 and the test frame 12 the image is about to become brighter again so the phase of the signals is reversed.

The frequency depends on the ratio between the source and destination height of the image, 480/428. If either of those dimensions were off by just a few pixels we'd see the peaks of the test signal drifting away from the peaks in the observed signal over time. The phase also depends on whether it's the even or odd rows of I1 that sample pixels from the next frame. So in the filter chain for the ffmpeg command above I chose two opposing filters, "interleave_top" at first then "interleave_bottom" later. If the first one were also "interleave_bottom" then the phase of the test signal would be the exact opposite, with the peaks of the test signal matching the troughs of the observed signal. The reason for this difference in filters remains unclear. One possibility is that the final interlacing is done on the full 640x480 frames, and the Gimbal frames start from an odd offset (27) so the even rows of the cropped Gimbal frames are the odd rows of the full video, and the first interlacing of the 480x480 frames would've happened without this padding. But that would lead to a temporal order in which the top fields are transmitted first, not how NTSC should work, so it's unclear. Another vague idea is that it might somehow be related to the desync in the recording of the frames that is most prominent when switching from WH to BH, affecting the bottom 10% of the fields as shown above. If the frames had already been resized (improperly) but then a recording of that happened to start on an odd frame rather than an even one, then perhaps that might also cause the phase reversal that we see.

Clearly the accuracy of the method is heavily degraded on such frames where the object makes sudden movements leading to these more pronounced combing artifacts due to interlacing. The automated motion tracking of the clouds filters and averages errors over a large area to counteract this to a degree but the accuracy of that is also affected by this. So what follows is a lengthy but still inconclusive investigation into the question of what can be done in order to improve their accuracy in the face of such artifacts. This post mainly focuses on describing the problem in detail, figuring out what happened to the video.

1. Attempts to use existing deinterlacing algorithms

Many different deinterlacing algorithms exist that can often do a decent job of alleviating such artifacts. From among the methods supported natively by FFMPEG for example I tried BWDIF (Bob Weave Deinterlace Filter).

The frames from the original WMV file were extracted to the current folder, cropped and stored in a lossless image format with the following FFMPEG command:

Bash:

FORMAT_CROP="format=gray, crop=428:428:104:27"

OUT_IMAGES="-start_number 0 gimbal%04d.png"

ffmpeg -i "../2 - GIMBAL.wmv" -vf "$FORMAT_CROP" $OUT_IMAGES

Bash:

BWDIF="bwdif=mode=send_frame:parity=bff:deint=all" # send_frame keeps the same frame rate, and bottom frame first (bff) looks better on frame 984 than parity=tff

ffmpeg -i "../2 - GIMBAL.wmv" -vf "$FORMAT_CROP, $BWDIF" $OUT_IMAGESHere's a comparison between the original frame 1002 and the same frame deinterlaced with BWDIF:

And for frame 984:

So BWDIF helps but the results are still significantly wavy.

QTGMC is another widely recommended deinterlacing method that I tried. It has many dependencies but for Windows someone made a package that contains all of them so you can just extract it all and start using it. I changed the last four lines of "qtgmc.avs", going for the highest quality possible, although many settings remain to be tweaked:

Code:

FFMpegSource2("2 - GIMBAL.wmv")

QTGMC(preset="Very Slow", ShowSettings=false, NNSize=3, NNeurons=4, EdiQual=2)

SelectOdd()

Bash:

../ffmpeg.exe -i ../qtgmc.avs -vf "$FORMAT_CROP" $OUT_IMAGESIt does substantially improve the results on some frames. Here's a comparison between BWDIF and QTGMC on frame 1002:

But on other frames like 984 the difference is much smaller.

None of the methods I tried were able to completely remove the artifacts and tended to blur the images. Perhaps state of the art deinterlacing methods like this one could do even better, but after digging deeper it quickly became clear that the video actually has artifacts that these methods were not designed to handle.

2. A closer look at the frames

Normally interlaced video should just consist of one snapshot of the scene on the even lines and another snapshot at a different time on the odd lines of the frames. But that is not the case in Gimbal and GoFast. Mick illustrated this by overlaying a grill of alternating horizontal lines over frame 1002.

Alternatively we can use the following commands to extract the top/bottom fields of the video:

Bash:

SCALE_VERT="scale=iw:2*ih:sws_flags=neighbor"

FIELD_TOP="setfield=tff, field=0" # make sure the field dominance is set to 'tff' to get consistent results

FIELD_BOTTOM="setfield=tff, field=1"

ffmpeg -i "../2 - GIMBAL.wmv" -vf "$FORMAT_CROP, $FIELD_TOP, $SCALE_VERT" -start_number 0 gimbal%04d_0.png

ffmpeg -i "../2 - GIMBAL.wmv" -vf "$FORMAT_CROP, $FIELD_BOTTOM, $SCALE_VERT" -start_number 0 gimbal%04d_1.pngThe object shows some degree of combing artifacts on both the top and bottom fields, but the track bar is solid on the the bottom field, and in the top field it fades in and out.

Arranging the fields to appear in sequence, we can see that the bottom field (B) of a frame always shows events that happened before the top field (T) of the same frame. This appears to match what the Video4Linux documentation says about NTSC:

Article: The first line of the top field is the first line of an interlaced frame, the first line of the bottom field is the second line of that frame. ... The temporal order of the fields (whether the top or bottom field is first transmitted) depends on the current video standard. M/NTSC transmits the bottom field first, all other standards the top field first.

Presumably the object is constantly moving to the left during this time, but it remains unclear why the combing distortion affecting it is always much greater in the top fields.

Comparing the top field of frame 980 vs 981 we see alternating dark and bright horizontal bands that are separated by about 7-8 lines.

But comparing the bottom fields of the same two frames we don't see the same bands (maybe some barely noticeable ones). Instead we just see a significant change in the overall brightness of the image.

In fact those clear bands related to illumination changes never appear on the bottom field. This observation already has actionable consequences. Tracking algorithms should work a bit better without these illumination bands, so they can either be run on the bottom field only, or the illumination changes can be more accurately measured on the bottom field and that information might allow the illumination bands to be removed from the top fields as well.

There's an oddity related to the top field of frame 373, just when the video changes from white hot to black hot. We can see alternating bands affecting most of the image but it's unclear why the last 22 rows, the bottom ~10% of the field is unaffected. It looks like the recording is not synchronized with the cockpit display, so it already starts displaying part of the next frame while recording the current one. Here I show the fields of frames 372-374 in sequence:

The above is where this desync is most clearly visible, but it does also occur over the rest of the video if we look more closely. For example on frame 1022, just before a change in brightness, we see the lower half of the following is darker than the top half:

We see the same interlacing patterns in GoFast as well. Here's frame 38:

In FLIR1 the compression makes it more difficult to see these patterns, but they do appear on some frames:

3. Simulating the artifacts

I came across a thread in another forum where someone had a similar problem. The difference is that in their clip the alternating pattern is more jagged, rather than smoothly fading in and out, but there are thick bands showing a combing pattern instead of alternating lines, vaguely similar to Gimbal's top/bottom fields.

In that case they found the cause and were even able to write some code to correct the artifact.

Article: Artifacts like this are caused by taking an interlaced source and resizing down with a scaler that expects progressive input.

After some investigation it seems very likely that this is indeed what happened in Gimbal as well, just involving a different interpolation method used while resizing. The sequence of events for how the video originated was summarized here based on an ATFLIR manual. A paper describing the ATFLIR also confirms that its sensor has 640x480 pixels. So the original frames might've been 480x480 in size, recorded onto modified RS-170 video, but in Gimbal the cropped frames are 428x428 in size. The video has 30 progressive frames per second but it originated from an interlaced video with 60 fields per second. It's unclear why it got resized or by whom, but while doing so perhaps some hardware/software was used that did not properly take into account that the video is interlaced, that downscaled interwoven frames instead of scaling the fields separately or deinterlacing first.

The theory is that 60 full frames (480x480) in image I were recorded as 60 fields (480x240). They were converted to 60 interlaced frames (480x480) in image I1 by simply weaving together the fields. Each of those 60 interlaced frames were downscaled to 428x428 in image I2 with some interpolation that smoothly blended together the alternating lines. Finally the video was reinterlaced, weaving alternating lines from the current and next frame into image I3, padding to 640x480 and discarding every other frame to arrive at the 30 FPS Gimbal video. More precisely this is described by the following formulas:

Here t is a frame number with I2, I1 and I having twice as many frames as I3. Going from I2 to I1 the coordinates for the center of each pixel (+0.5 offset) are scaled up. I2 samples pixels (in,jn) from I1 in a neighborhood N around those scaled coordinates. The image scaling is typically implemented as a horizontal scaling followed by a vertical scaling with lookup tables for the horizontal and vertical scaling coefficients ch and cv. The size of the neighborhood depends on the scaling method used. For ffmpeg's "bilinear" scaling mode it varies, typically 2x2 but up to 3x3 blocks of pixels with nonzero coefficients. Whether it's the even or odd rows of I1 that samples the next frame of I alternates between frames.

To simulate the effect I first created the following 480x480 2FPS test video. I posted the code for all of this here, on Github, with links to run it on either Google Colab or Kaggle.

Then I applied the following filters to it, with tests to make sure that their result matches the formula above:

Bash:

# using tinterlace filters (https://ffmpeg.org/ffmpeg-filters.html#tinterlace)

# that implement going from I to I1 and going from I2 to I3

INTERLACE_BOTTOM="tinterlace=interleave_bottom, tinterlace=interlacex2"

INTERLACE_TOP="tinterlace=interleave_top, tinterlace=interlacex2"

# Using the 'bilinear' method for the scaling, but it remains to be seen which one matches best

SCALE="scale=428:428:sws_flags=bilinear"

SELECT_EVEN="select='eq(mod(n,2),0)'"

FULL_FILTERS="$INTERLACE_TOP, $SCALE, $INTERLACE_BOTTOM, $SELECT_EVEN"

INPUT_PNG="-start_number 0 -i test_%04d.png"

ENCODE_WMV="-codec wmv1 -b:v 3M" # try to match the original quality

ffmpeg -r 2 $INPUT_PNG -vf "$FULL_FILTERS" $ENCODE_WMV -r 1 test_vid_full.wmvAnd here's a video of its top/bottom fields in the same temporal order as Gimbal:

This appears to reproduce most of the effects seen in the Gimbal frames above. We can see clear horizontal bands in the top fields when the background brightness changes. The bottom field doesn't show those clear bands since I made sure to only change the background color on even frames when generating the input video. But the WMV1 codec also adds some faint horizontal lines to the bottom fields since the full interlaced image is compressed in blocks and the information from one line bleeds over into the next. When the bar moves it appears duplicated and one of them is more solid. In the top field the bar is fading in and out and it's always solid in the bottom one. I made the object only move on even input frames, leading to much greater distortion of the object in the top field with some slight distortion in the bottom field as well due to the compression but some further research is needed to see if we can get closer to the degree of combing seen in Gimbal's bottom fields. The idea is that the overlay, e.g the position of the bars is only updated 30 times per second or less, so you only see it move during the period captured by the top field. Similarly the pod's auto level gain (ALG) algorithm might only be updating the level/gain settings at most 30 times per second. But it's unclear whether the ATFLIR's sensor is only able to capture 30 full images of the scene per second.

To quantify whether the simulation is comparable to Gimbal's actual frames I looked at the horizontal bands seen when, in both cases, the image suddenly becomes less bright. At the top of the following plot I show the mean pixel intensity over even and odd rows of Gimbal's frame 980. We can see that the even row intensity periodically dips below the odd row intensity. At the bottom of the plot I show the difference between consecutive even/odd rows of Gimbal, and compare that to the same difference calculated for the simulated test frame. In the test video I made the background color change a lot more than in Gimbal, so I scaled the amplitude and shifted the mean of the test signal for a better match, but it's a remarkable result that the frequency and phase of the test signal matches what is observed in Gimbal.

In Gimbal's frame 981 and the test frame 12 the image is about to become brighter again so the phase of the signals is reversed.

The frequency depends on the ratio between the source and destination height of the image, 480/428. If either of those dimensions were off by just a few pixels we'd see the peaks of the test signal drifting away from the peaks in the observed signal over time. The phase also depends on whether it's the even or odd rows of I1 that sample pixels from the next frame. So in the filter chain for the ffmpeg command above I chose two opposing filters, "interleave_top" at first then "interleave_bottom" later. If the first one were also "interleave_bottom" then the phase of the test signal would be the exact opposite, with the peaks of the test signal matching the troughs of the observed signal. The reason for this difference in filters remains unclear. One possibility is that the final interlacing is done on the full 640x480 frames, and the Gimbal frames start from an odd offset (27) so the even rows of the cropped Gimbal frames are the odd rows of the full video, and the first interlacing of the 480x480 frames would've happened without this padding. But that would lead to a temporal order in which the top fields are transmitted first, not how NTSC should work, so it's unclear. Another vague idea is that it might somehow be related to the desync in the recording of the frames that is most prominent when switching from WH to BH, affecting the bottom 10% of the fields as shown above. If the frames had already been resized (improperly) but then a recording of that happened to start on an odd frame rather than an even one, then perhaps that might also cause the phase reversal that we see.

4. Future work

The next step should be to try to turn the information above into something that can correct some of the observed artifacts and improve the accuracy of some of the methods used to analyze Gimbal. It should be possible to correct the horizontal bands. I found a thread where someone was able to do something like that, in their case due to upscaling interlaced footage. It should be possible to correct the recording desync by taking information from a previous frame/field for the bottom 10% of the image. Just running most algorithms on the bottom field might help. While still imperfect it's at least combining information from fewer consecutive frames. A brute force method of figuring out how some things moved might be to generate several motion hypotheses, resize/interlace the generated frames with the method above, then compare that with the observations until the best match is found. It remains an open question whether it's possible to actually deinterlace the image and fully remove the artifacts from the object itself, whether it's possible to generate temporally consistent frames, or whether it's possible to modify the equations of the motion tracking algorithms in such a way that they produce more accurate results in spite of blending together information from consecutive frames.

Last edited by a moderator: