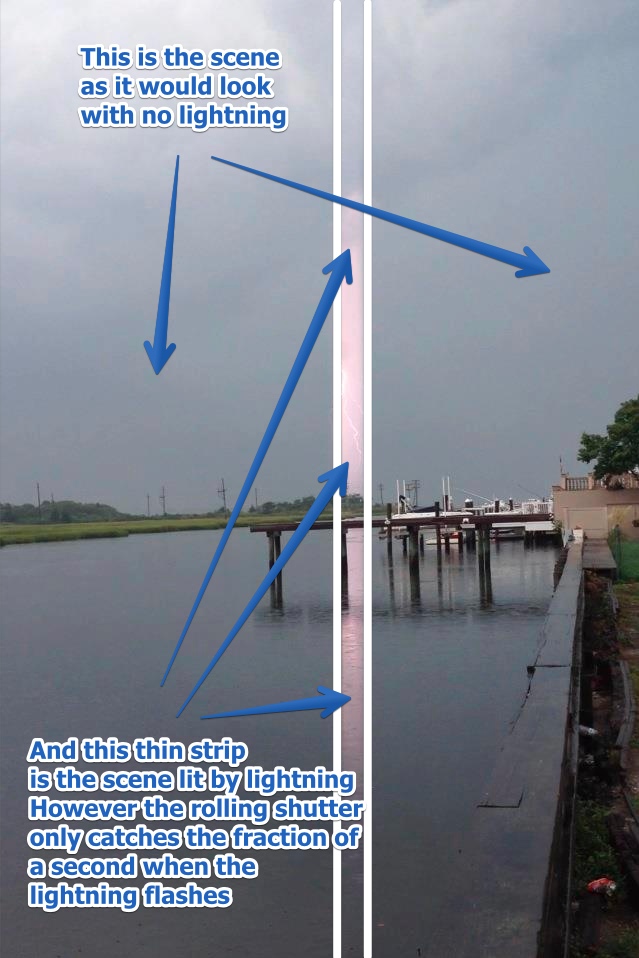

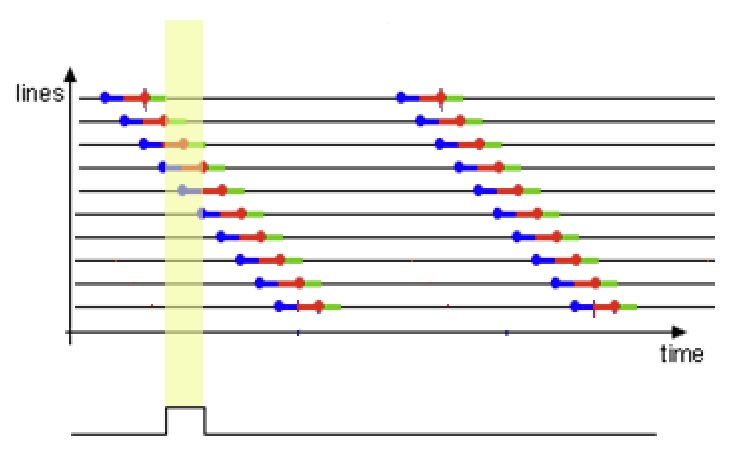

The above images are examples of a rolling shutter artifact. A "rolling shutter" means that the image is recorded one row (or column in portrait orientation) at a time, and not simultaneously. This means if the exposure and the flash are of very short duration, then the flash will only illuminate some pixel rows (or columns) of the image. Slowed down it looks a bit like this:

The green rectangle is the rolling shutter window - what the camera sees of the scene at any one time, and it builds up the actual photo by reading from this rectangle. The flash of lighting is very short, but still lights up all the pixels in the rolling shutter window, causing the "beam".

Here's what's going on in the first image. Notice the beam also shows up in the reflection.

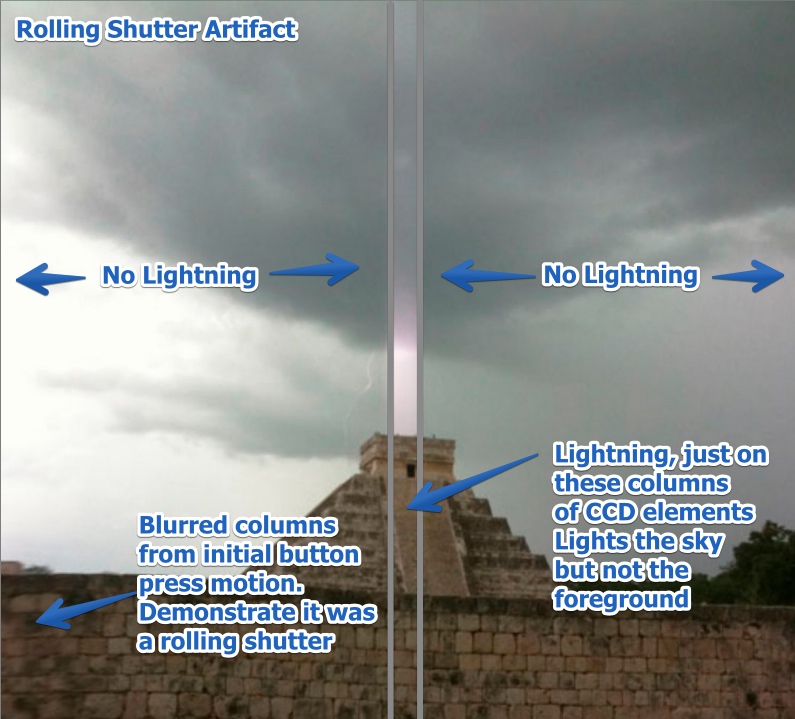

And with the Mayan temple, the lighting is behind the temple, so it only lights up the sky, and not the temple itself. So it looks like the "beam" goes behind the temple.

This can be replicated with a flash set to strobe, and taking lots of photos or video with an iPhone:

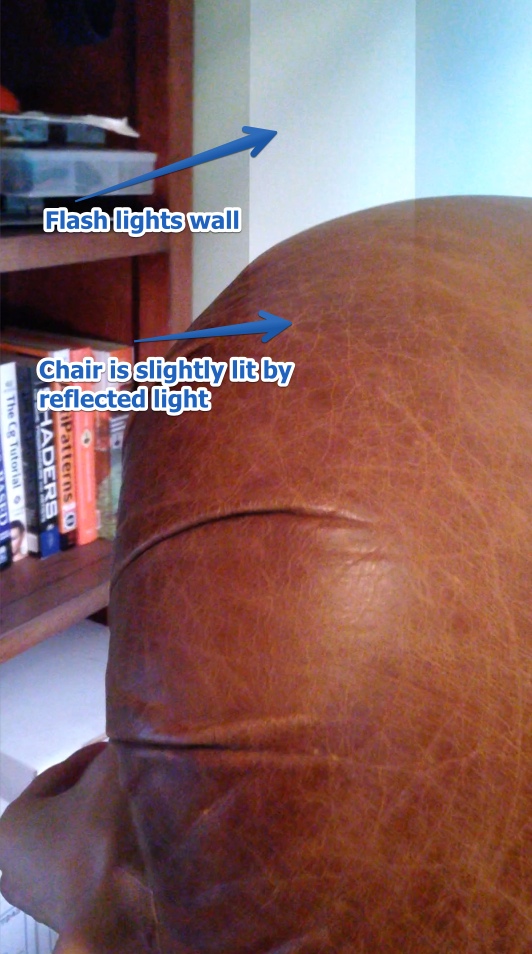

The fact that the "beam" can go "behind" the clouds can seem confusing in a still image. But it's really just that the lightning is behind the clouds, and they are thick enough to block the light. I simulated a similar effect using the flash and a foam pool toy. If the flash is in front of the toy then it illuminates it, and you get the stripe across the toy. If the flash is moved behind the toy, then the toy is not illuminated, so the "beam" (the stripe of the image) looks like it's going behind the toy.

It looks like the flash is not actually working, however you have to remember that for most of the frame, it's actually off. The only time the flash is on is during the white bar.

In my image the flash gives a white light (as a camera flash is obviously intended to), but lightning often shows up as a purple or pink tinge:

Typical lightning photography with a rolling shutter looks more like half the image being lit by lightning, rather than the column we see above. Here's a more typical lightning shot at night with this problem:

http://www.productionapprentice.com/tutorials/ccd-vs-cmos/

Notice it's horizontal. The image is scanned one row at a time, but when you take a photo in portrait mode, then those rows are the columns.

Here I've repeated the flash experiment in a darker setting, and we get the half-illuminated image:

Update Aug 15 2015, a new example:

source, location

Here the illusion of it being a beam behind the clouds is particularly striking, as a band of dark clouds is partially obscuring the illuminated clouds behind. You can see part of the lightning bolt just below the power lines. Remember it's not actually a beam of light, it's just a slice of a scene as it would look illuminated by lightening.

This can be especially confusing when you notice the reflection of the "beam" on the hood of the car. Your brain naturally interpret this as a reflection of the scene, and so you think there's actually a beam in the sky. But if you look closely, you'll see the reflection in the hood is actually of a slightly different are of the sky. The "reflection" lines up perfectly vertically, whereas the nearby traffic signals are sloped. If this actually was a reflection of a beam in the sky, then it would also be sloped.

So while it's a reflection of sky illuminated by lighting, it's not the same area of the sky. It's a little unintuitive.

So again, what we are seeing here is essentially one normal image overlaid with a slice of another image that's illuminate by lighting. (And note that what looks like the reflection of a building in the lower right is actually the reflection of the air conditioning vent inside the car)

Warning: Complicated Explanation Ahead!

A very unintuitive topic here is the width of the band. Why does a long exposure (in a dark setting) give a wide band, but the short exposure (in sunlight) give a narrow band? You would think that if the exposure is short, then more of that exposure would be happening during the flash, so the band would be wider.

The first thing here is that the flash I use only has a duration of 1/5000th of a second (0.2 ms), so if things work the way the previous paragraph suggested, then the most we could illuminate would be a band 1/166th the width of the image (in portrait orientation), and yet we see that we can get half or more of the image illuminated. So what's going on? How can a 1/166th of a frame exposure illuminate half the lines in a frame? And why does it it get narrower for shorter exposures?

The answer is complicated. CMOS sensors can only be read one line at a time, and yet the exposures can happen simultaneously (or at least overlap). So the reset of each line (the start of when it begins to record) is staggered to match the read-out speed, and the exposure length is actually on an individual line basis.

The diagram below is showing the "flash window" - i.e. the portion of a frame in which you can fire the flash and have it affect all the rows. Normally when you use the flash, then it's quite dark and so you have a long exposure, and hence quite a big window in which you can fire the flash, If you were to go outside of this window, you'd get the top or the bottom of the image only illuminated by the flash.

Source

In brighter conditions, the per-line exposures are shorter, so it's more like:

Notice the read-out of the lines (green)is the same angle, as that can't get any faster. This also dictates when the reset (blue) happens. But now that the exposure time (red) is much shorter, only a few lines can get exposed during the flash. Hence the "beam" is narrower for a shorter exposure.

So the width (or height in landscape) of the beam are actually dependent on three factors

- The exposure duration - the red lines above.

- The rolling speed - how quickly does the "rolling shutter" move across the sensor. Determined here by read-out speed - the green line.

- The flash duration (the yellow bar)

Why sharp edges? The start and end of the flash might also occur during the reset or read-out phase of the lines, meaning that line only gets partially exposed to the flash. This means the edges of the "beam" are not always sharp.

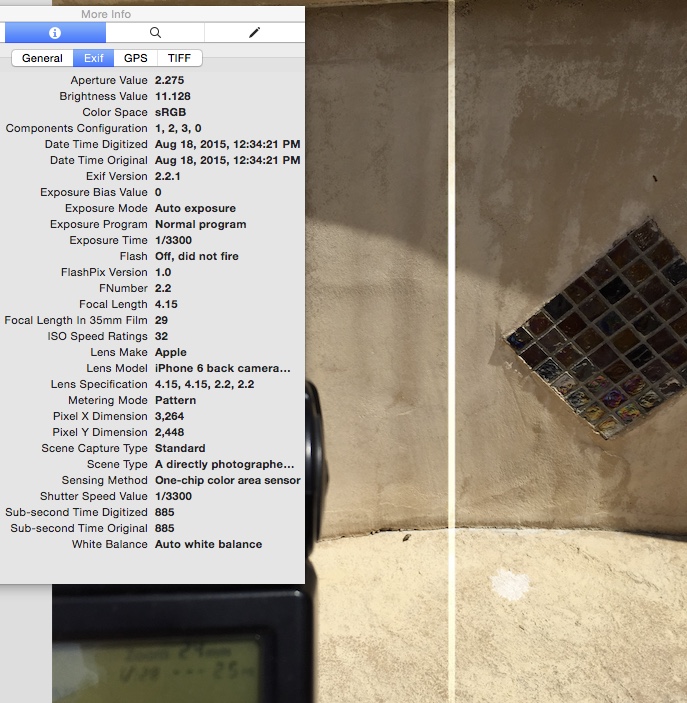

The narrowest possible beam comes from a combination of short exposure and short flash. Here I took individual photos in bright sunlight, which gave an Exposure Time of 1/3300. The flash is 0.2ms, or 1/5000 of a second, about 2/3 the duration of a single Exposure time. Here's what we got:

Zoomed in:

So we have a 36 pixel wide beam on a 2488 wide image. There are really lines (as the camera is just rotated 90 degrees). Only half the pixels in the middle are a solid bright color - that would be where the flash fell entirely within the exposure time. The colored pixels on either side indicate where the flash only partially was within the actual exposure time, with the different colors indicating different contributions from the red, green and blue sensors as they are read at out different times.

For a discussion of Rolling Shutters in SLR cameras, see:

https://www.metabunk.org/will-an-sl...ts-like-a-beam-of-light-with-lightning.t6718/

Note: The above post is a summary post from material in the discussion thread below, hence the subsequent discussion might seem somewhat redundant. Updated with the Florida photo on 8/15/2015

Last edited: