For the laymen, would you describe Himawari-8 full-disk images as "composites"?

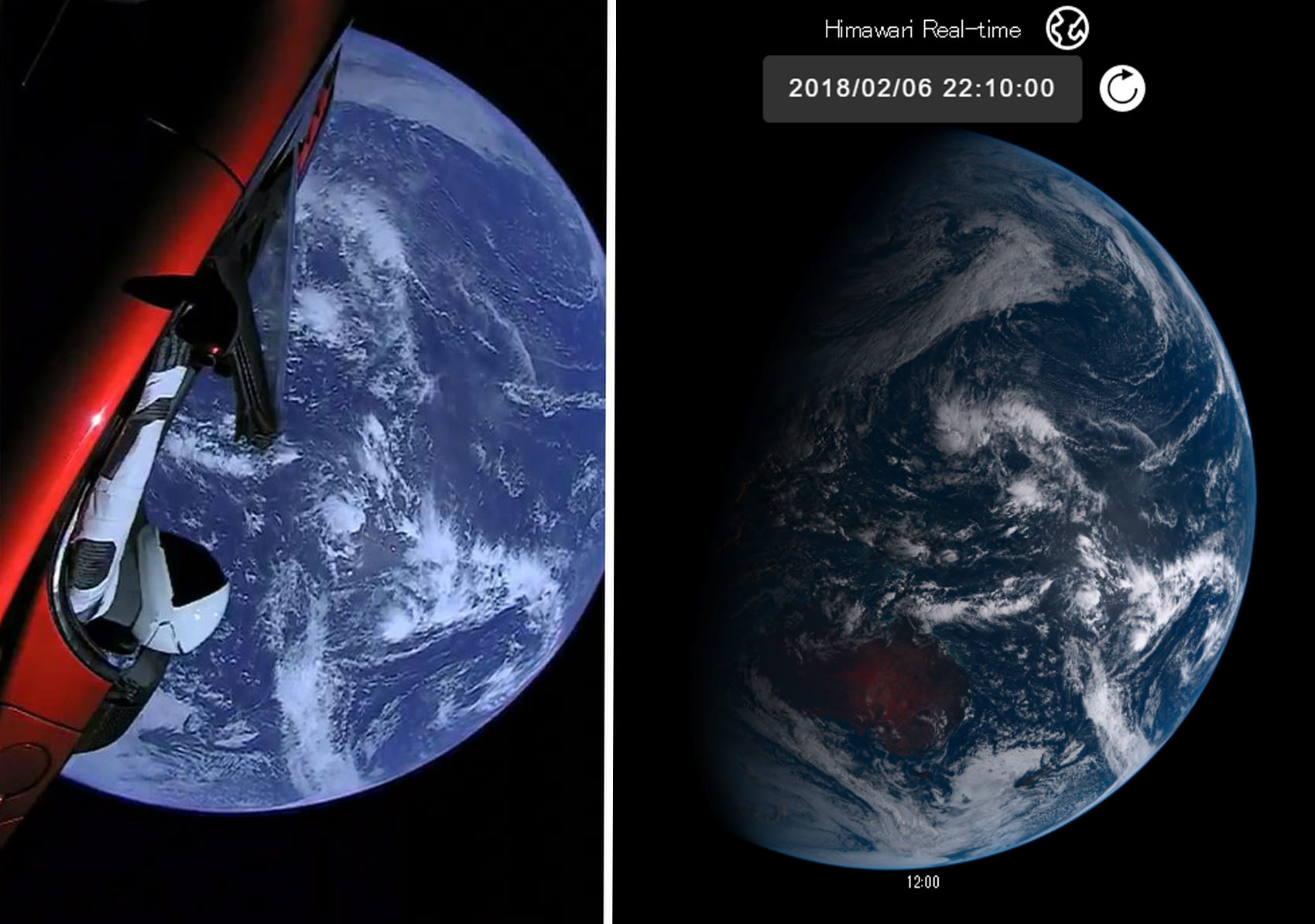

I think there's a danger of confusion in asking if something fits the meaning of a word without actually specifying the meaning of the word. This is especially true when you know that the Flat Earth and Fake Space folk want to claim that all space images are fake. If you say an image is a "composite" then that could be interpreted as saying it's fake.

All the satellites mentioned in this thread use a monochrome camera (or cameras) and filters (and/or beam splitters). for example, here's the GOES-16/17 setup:

Source:

https://www.goes-r.gov/downloads/resources/documents/GOES-RSeriesDataBook.pdf

The visible light images come from the VNIR (Visible & Near Infrared) sensor on the right, which is actually responsible for six bands.

So if you are seeing a color image then it's a "composite" of two or more channels (bands, wavelengths, colors).

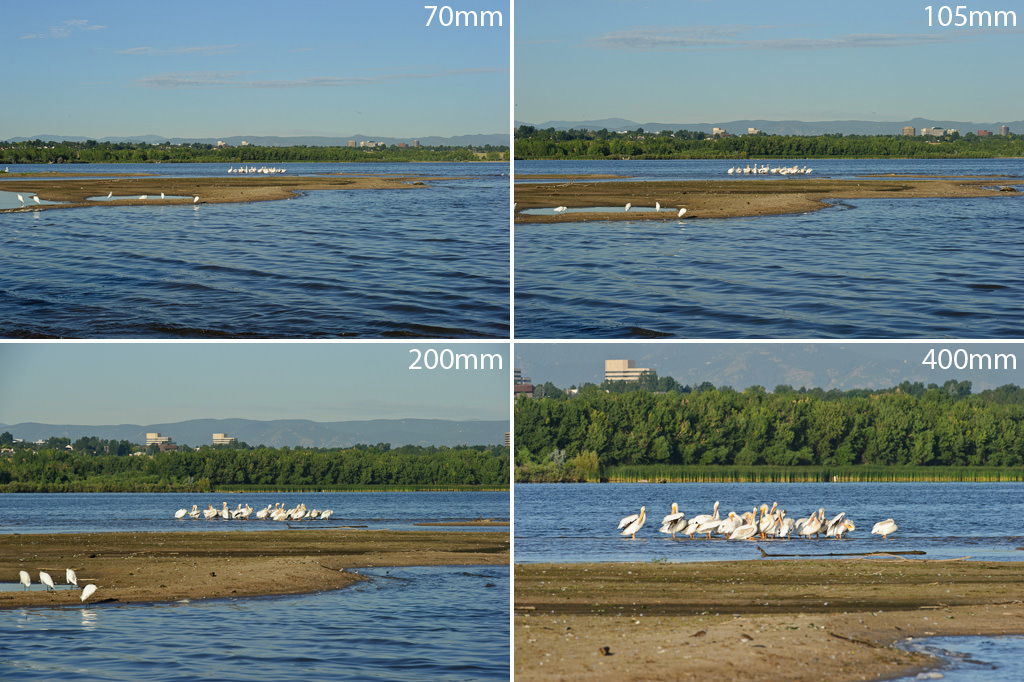

Most of them "scan" the image in a couple of ways. Firstly, they divide a region into "swathes" which they image individually and then stitch those swathes into a set of single-band images (which can then be combined into color images, or processed to highlight or measure certain things, like cirrus clouds). This is somewhat analogous to the "panorama" mode on a phone camera (but with less overlap). This large scale scanning is done by aiming with two mirrors which obviously can be moved much easier than the camera itself.

Then, many of the satellites "scan" the image in way more like a simple scanner. Instead of the full image being registered on a single CCD, like on a conventional camera, the satellite imagers have "Focal Plane Modules" which have multiple columns of pixels, different columns for different bands. As the east/west mirror moves across the image this registers one column at a time

Here's the resolution of the

While they have multiple columns, only one pixel per row is used (it picks the best one). The first two are visible wavelengths. The highest resolution on is the 0.64µm (640nm, red) channel at 1460 pixels high. The lower resolution 0.47µm channel is blue. Notably absent is green (which would be around 0.51µm). So you can't get a natural color image by combining red, green, and blue (which is what most cameras do). Green has to be calculated from the other channels - maybe including the IR 0.86 band, which detects vegetation, as a partial green band. (Himawari-8 has a green band)

Are the GOES or Himawari images "composite?" They produce full disk single band images that are

scanned and

stitched, but I would not describe those as

composite unless you are being particularly pedantic

. Here's the red image

I'd be quite happy in describing that as a full-disk image of the Earth from space.

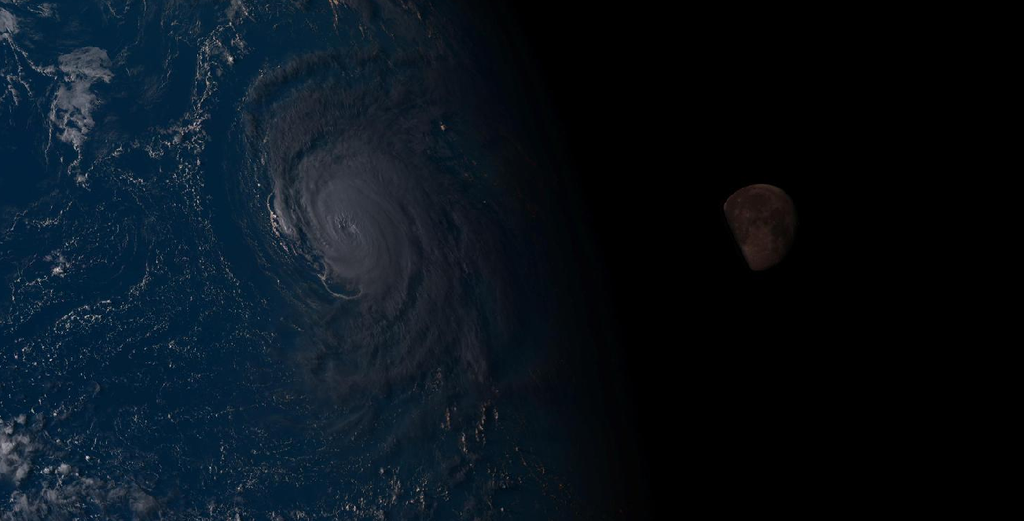

Once the individual band data is on the ground, it is used to make composite images referred to as "products". For example the "GeoColor" product

Notice half the planet is in night, and you can see clouds and city lights in the dark half - here the day and night parts of the image come from different bands. The night clouds probably from the longwave radiation (outgoing heat) I think the city lights seem to be from a single image, possibly the 2012 Night Lights image from the Blue Marble project. So that's a composite image. Other products composite images in different ways, including compositing the longwave clouds with the Blue Marble land image to produce an image with no night time.

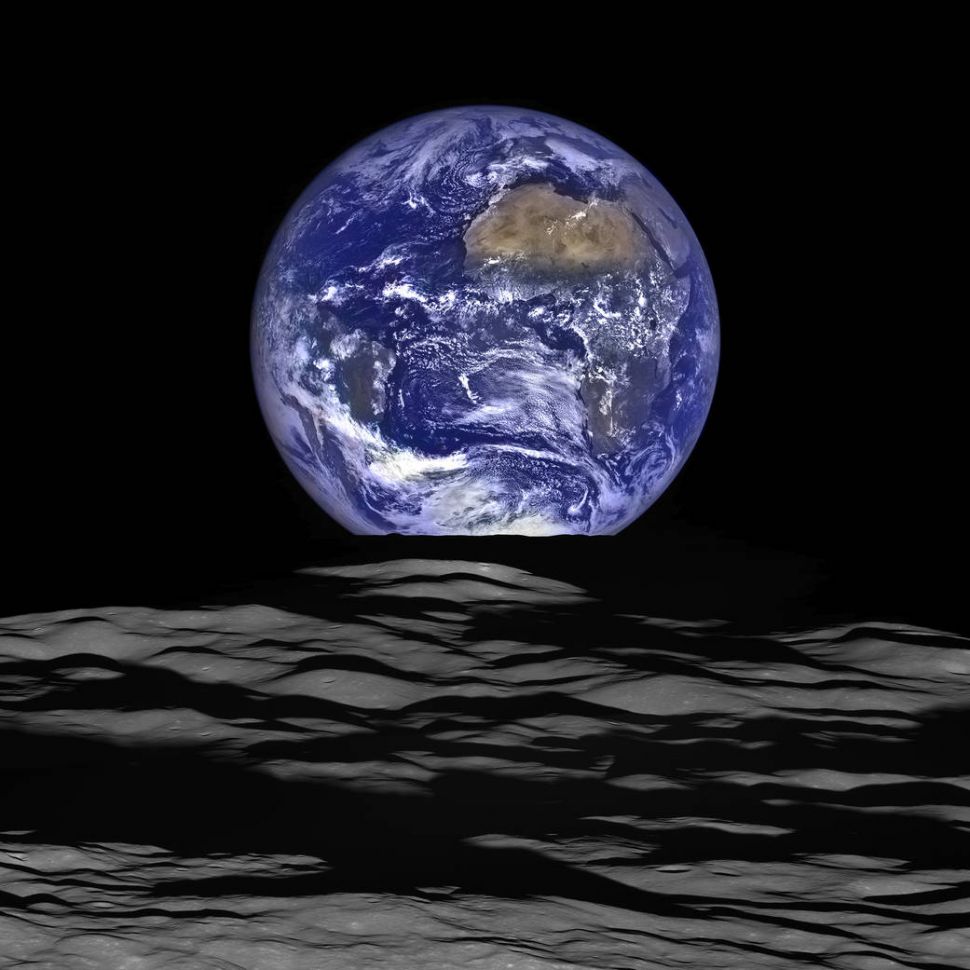

And if you really want a non-scanned, non-stitched, and certainly non-composite image, the best place is the DSCOVR, with the EPIC (Earth Polychromatic Imaging Camera), which has a more conventional 2048x2048 pixel CCD, although it uses a filter wheel to get red, green, and blue bands. But a single band from the EPIC is, in fact, a photo of the earth from space by even the strictest definition (unless you only accept actual chemical film photos).