TheCholla

Senior Member

Your observations are based on assumptions that may or may not be true and that you definitely haven't proven to be true. Zaine should scrap this model and try to rebuild it from the ground up using the same process and I want to see if he gets the same results. Because you can align these images in hundreds of ways that make sense physically because of all the different factors of both the camera moving and the platform moving while recording.

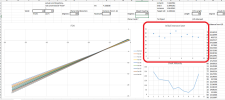

I would suggest scrapping it running the numbers again using whatever process you did and seeing if you get the same results. Because right now your argument hinges on how you stitch together these frames to create a 2-D representation panorama of a three-dimensional space. I'm not sure why you think it matches better other than "I looked at it and I thought it matched better." is that enough proof to overturn the old hypothesis? I'm not so sure.

The problem that I point about Mick/LBF model is independent from the stitching, these are two different things.

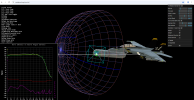

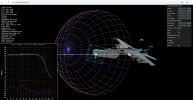

Their model makes a prediction that doesn't happen. The stitching problem is something else entirely. Their model is not a stitching image.

But stitching reveals why the "refinement to the Gimbal sim", that was done in their model, seems not justified, especially with the contradiction it leads to.

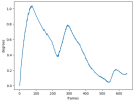

Going back to stitching, following a simple method (leveling the frames, then recreating the by-design distorted scene) points to change in elevation angle. What would be a good formula for stitching? They have all been done by trying to get a continuous cloud line, but they all end up with some warping of some sort because it's a moving camera in a 3D scene. Here there is a clear method and justification behind what's been done (level-up then follow the clouds).

I have a hard time to see how you get horizonal clouds after leveling up the frames like Zaine, with slanted clouds in there? Can you show an example?

Last edited: