This discussion has been very helpful in understanding the artificial/real horizon mismatch (at least for me). My take on all this as of now :

1) the whole image is expected to rotate CW, not only during banking episodes, but also from a gradual realignment of the image with horizon indicator as the Az gets closer to 0 (the cos(Az) term)

2) the close flight path predicts a slow CCW rotation, with perspective changes as it tilts upwards (assuming the object rotates along the path). The glare hypothesis predicts no rotation other than pod roll, because the glare must be fixed in the camera.

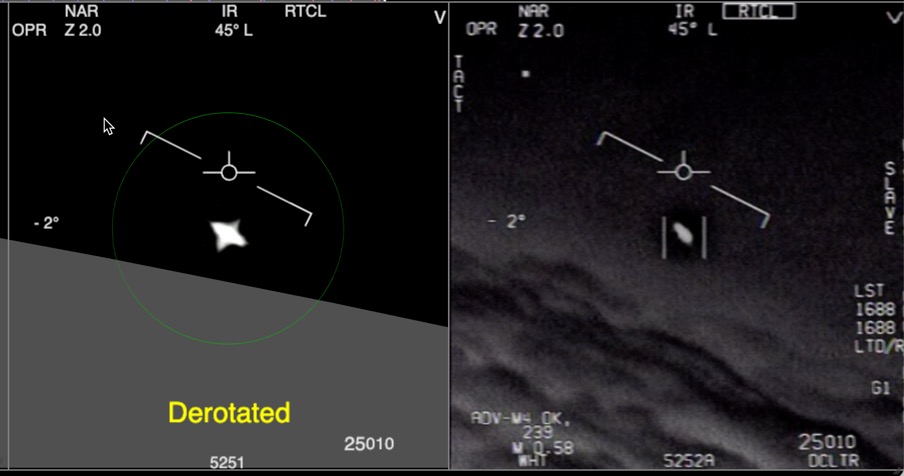

If we loop frames 11 and 372 (beginning/end of white hot segment), we get this :

Defining the object's axis is ambiguous, but I think there is a discernible CW rotation here (but less than the clouds). Two options :

- it's a glare, and this means the pod has had a bit of CW rotation

- it's a physical object, it rotated CCW along flight path, but just a bit less than the CW rotation of the image. In other words the two rotations counteracted each other, but realignment of the image "won" by a bit.

Now, let's compare the black hot segment, before any "step" rotation (frames 401-720):

Again, the shape changes so its not clear if the object's axis is exactly fixed or not. As before, there may be a bit of CW rotation here (look at the axis of the little spikes on each side), that could be due to slight CW pod roll if a glare, or cancellation of CCW object's rotation along flight path versus CW realignment of the image, as before.

My take on this :

- The 1st observable is ambiguous, because what we see is not incompatible with how a real physical object would look throughout the vid, accounting for cancellation between predicted CCW rotation of the object, and CW realignment of the image.

- A glare fits what we see, at least for the "fixed in camera frame part", provided that there is a bit of CW rotation in pod roll over the first 24s.

Now I agree this would be a strange coincidence that the object rotates CCW while the image realigns CW, making it appear fixed in the image.

But the close flight path predicts a tilt of ~10-15° CCW over the first 24s (see below, it starts aligned with the blue line), which is not far from the difference of rotation between the clouds and the object (clouds tilt by ~18°).

As you know I favor the close flight path to the distant plane scenario, because it explains what the aviators report. I'm not discussing if it is likely or not, I simply want to verify if what we see in the vid may fit this scenario. Like I said before it does for flight path and SA stop/reverse, and accounting for CCW rotation of the object along flight path, it kinda does too. This can be another coincidence and another "trick of the glare". Or the object is in fact rotating in the scene. I keep an open mind.

To go further on this, I have asked Mick to add a 3D flying saucer model in Sitrec, as he did with a F-18 for FLIR1. Why not, after all? This way we can check if the shape we see at the end (saucer-like), would match the shape we see earlier, based on what change in perspective along the close flight path is predicted by the 3D recreation. If it's not a physical object, but a glare, there should be no match, or this would be another weird coincidence.

A loose estimation of how a physical object would rotate along close flight path, if it tilts by 10-20° :

It'd be cool to check this on Sitrec, with precise changes in distance/angle of view included.

Thanks Mick for agreeing to do it, I think it's a fun thing to look at.