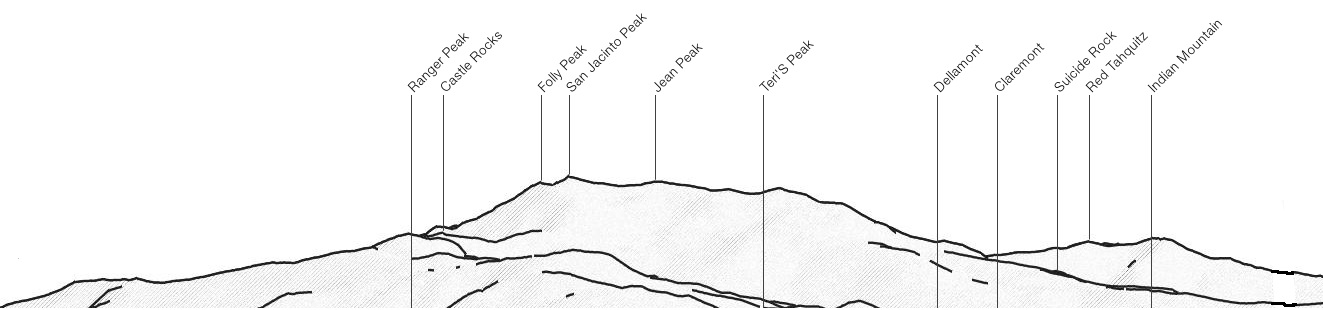

Rory

Closed Account

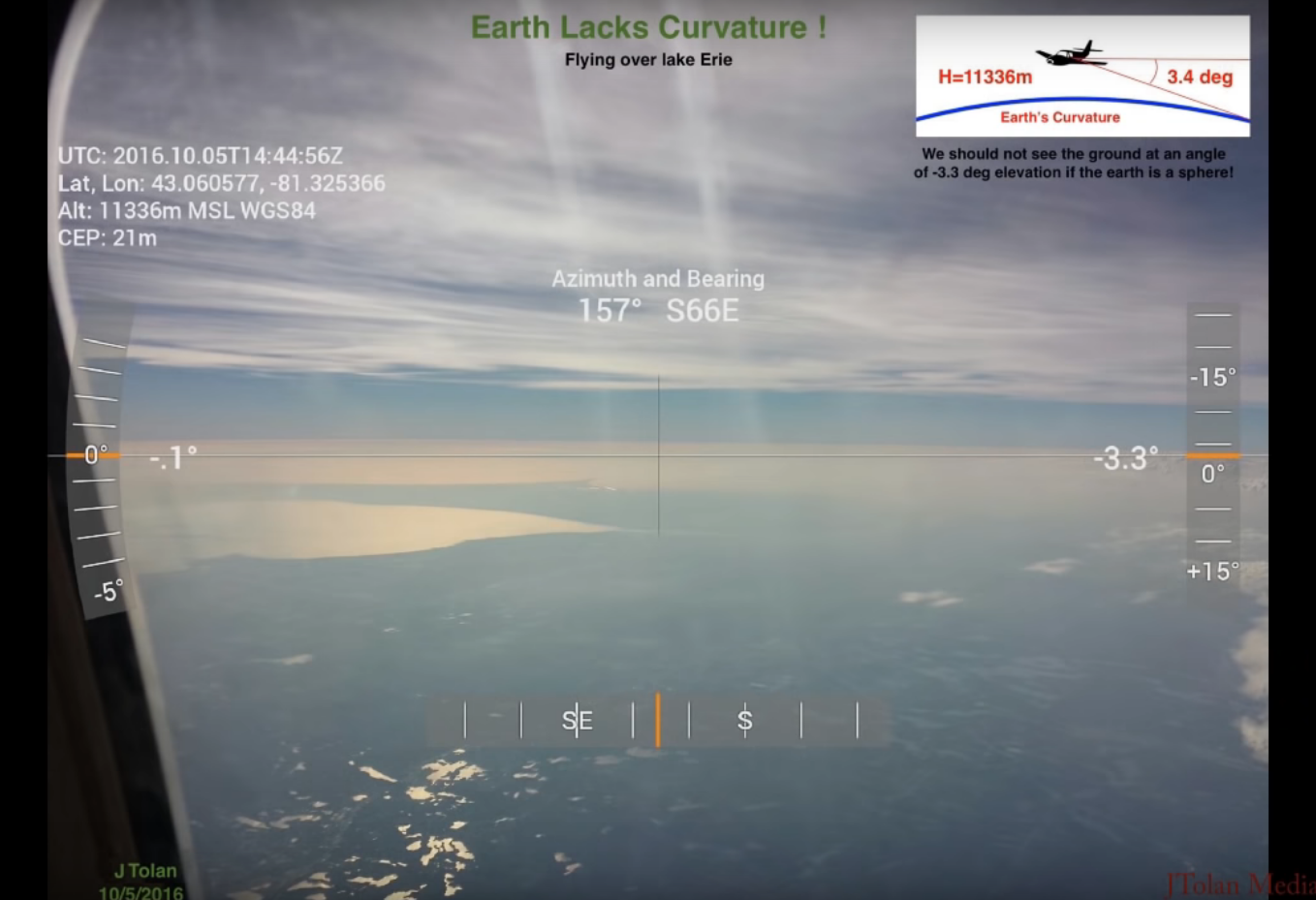

YouTube user JTolan Media 1 has uploaded a video which shows images of San Jacinto Peak in California taken from Malibu, 120 miles away. He believes this is proof that the Earth is flat:

Source: www.youtube.com/watch?v=7-pXWRn_wfk (start at around 9:48 - before that is preamble)

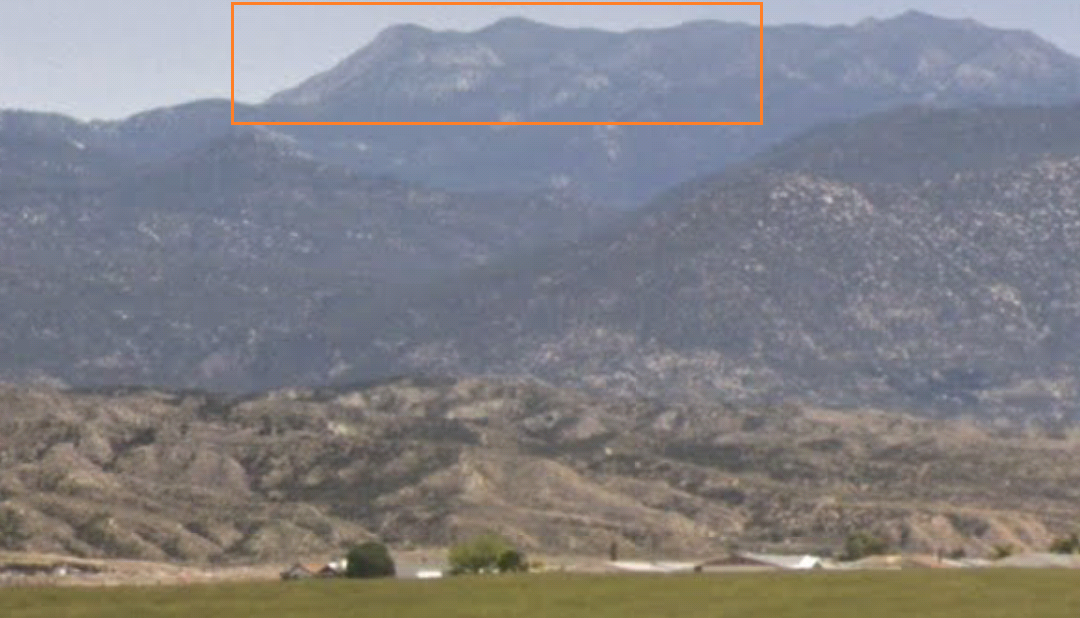

His main piece of evidence is this image here (contrast adjusted):

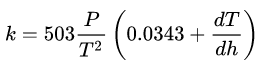

He gives his observation location as 34.032204, -118.702984 and his altitude as 150 feet. This seems accurate enough. So where has he gone wrong in believing he's seeing something that shouldn't be seen on a spherical Earth?

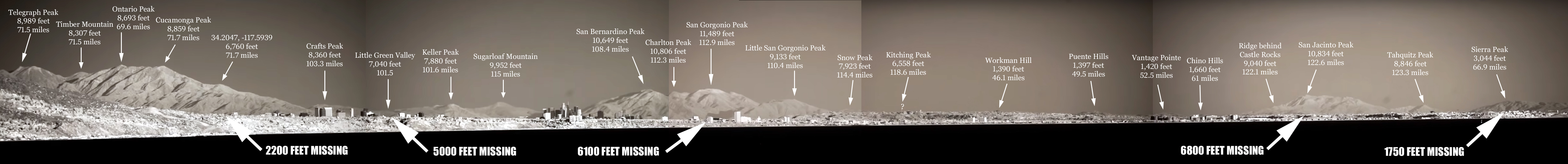

The curve calculator predicts a hidden amount for those figures of 6,158 feet. San Jacinto Peak is 10,834 feet above sea level. This leaves a predicted visible amount of 4,676 feet.

Likewise, peakfinder has no problem with San Jacinto being visible from that spot:

Source: peakfinder.org

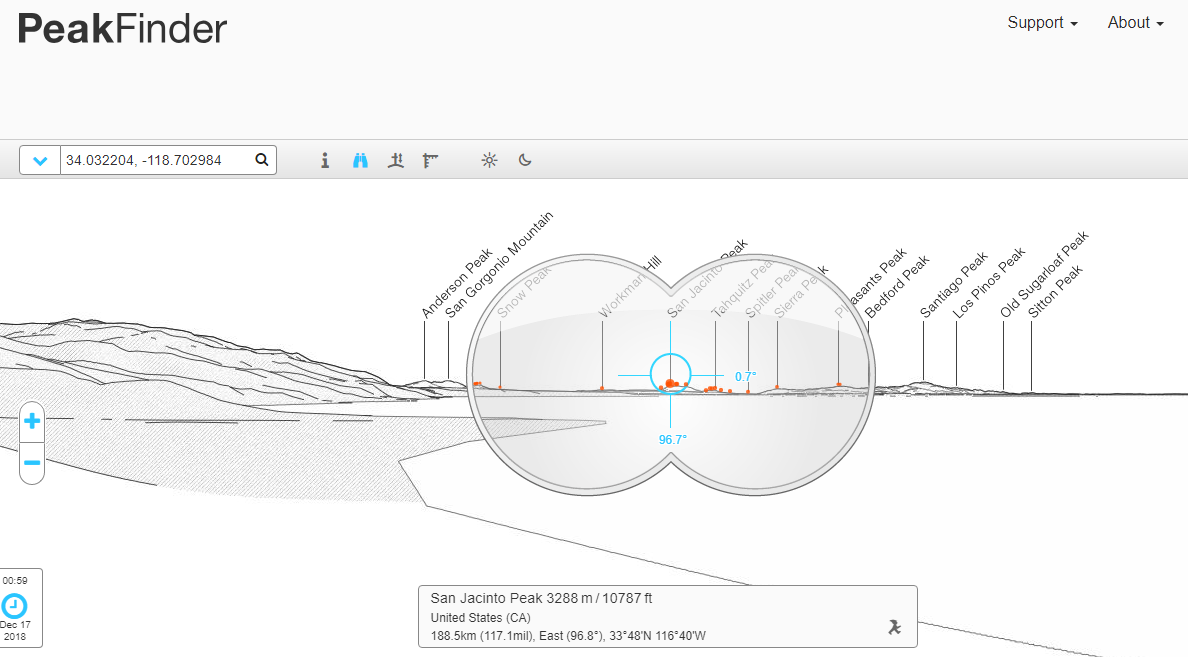

And this is a fuller image of that mountain range, taken from an elevated location much closer to San Jacinto, to give a picture of what the mountain actually looks like, free from obscuration:

Source: peakfinder.org

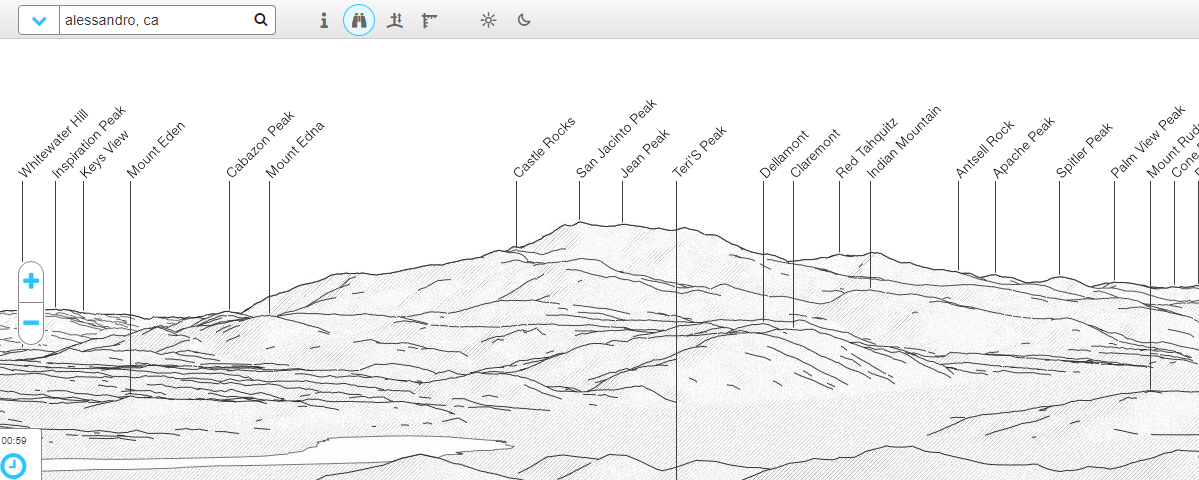

Indian Mountain, to the right of San Jacinto, summits at 5,790 feet, so what we're seeing in JTolan's video should be the portion of the mountain range a few hundred feet above that. Basically, this:

As there are hills in the way, though, what we're actually seeing is merely the very top portion of the range, from around Castle Rocks, at 8600 feet, upwards:

Working out which hills are obscuring the range and calculating the predicted visible amount would be the final step here. It's probably about 2,200 feet for the sphere Earth, but substantially more for the hypothetical flat.

Source: www.youtube.com/watch?v=7-pXWRn_wfk (start at around 9:48 - before that is preamble)

His main piece of evidence is this image here (contrast adjusted):

He gives his observation location as 34.032204, -118.702984 and his altitude as 150 feet. This seems accurate enough. So where has he gone wrong in believing he's seeing something that shouldn't be seen on a spherical Earth?

The curve calculator predicts a hidden amount for those figures of 6,158 feet. San Jacinto Peak is 10,834 feet above sea level. This leaves a predicted visible amount of 4,676 feet.

Likewise, peakfinder has no problem with San Jacinto being visible from that spot:

Source: peakfinder.org

And this is a fuller image of that mountain range, taken from an elevated location much closer to San Jacinto, to give a picture of what the mountain actually looks like, free from obscuration:

Source: peakfinder.org

Indian Mountain, to the right of San Jacinto, summits at 5,790 feet, so what we're seeing in JTolan's video should be the portion of the mountain range a few hundred feet above that. Basically, this:

As there are hills in the way, though, what we're actually seeing is merely the very top portion of the range, from around Castle Rocks, at 8600 feet, upwards:

Working out which hills are obscuring the range and calculating the predicted visible amount would be the final step here. It's probably about 2,200 feet for the sphere Earth, but substantially more for the hypothetical flat.

Last edited: