Giddierone

Active Member

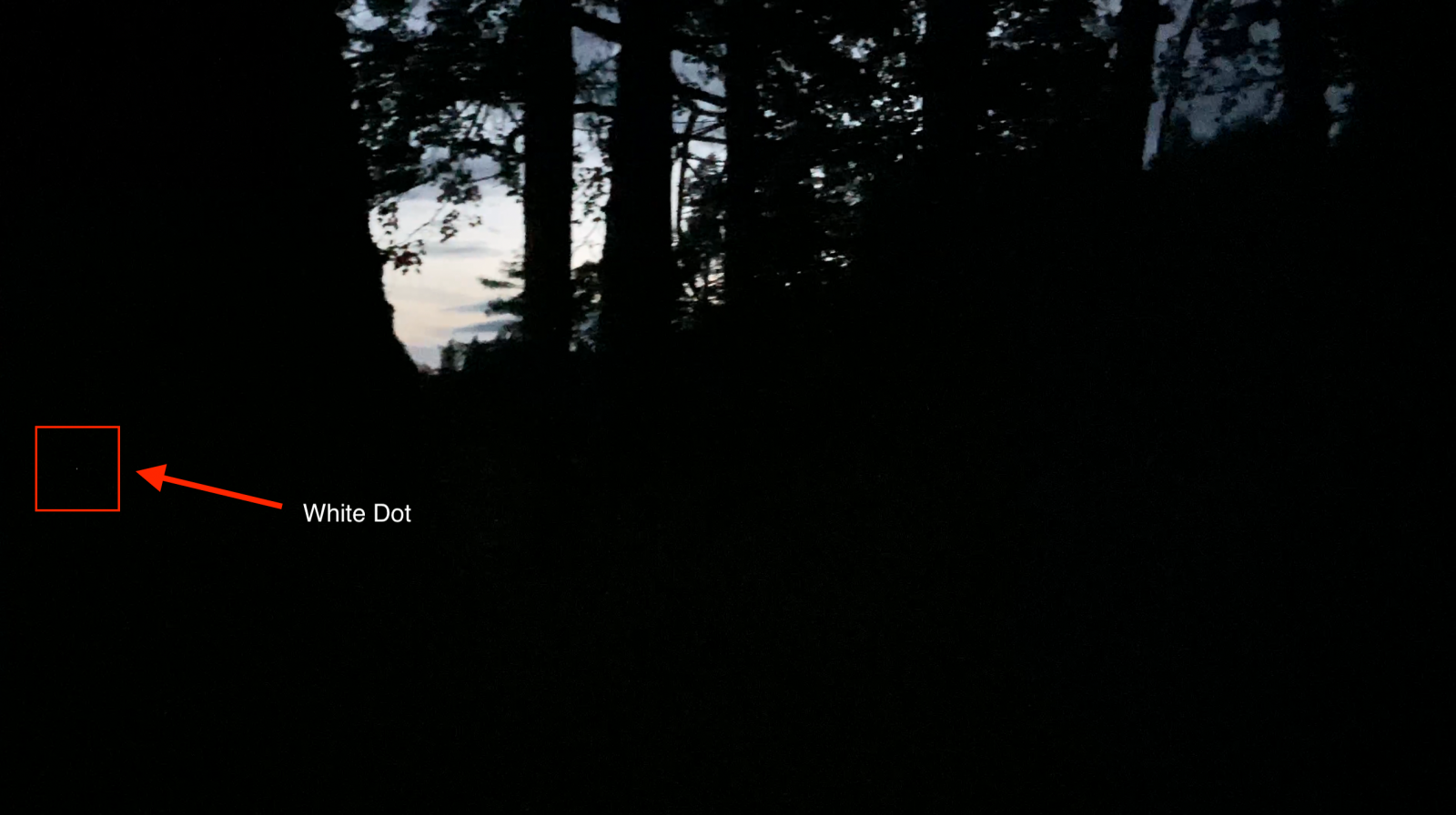

I’ve noticed on several videos taken in very low light a small white dot in the frame. I thought it was a stuck pixel but it doesn’t stay in exactly the same place. It’s always generally on the left side of the image but it hops around the frame as I move the camera. At one point I walk past a tree that is only a few inches from the camera but the dot remains visible. There are lots of other flecks of white but they a momentary, this one is visible over nearly the whole video.

Is there some moving part in the camera that creates this effect? (or maybe something broken, it's been dropped quite a few times)

Is the lens seeing a part of itself related to low light image capture / auto focus?

Could it be a reflection from my phone case nearest the camera lens? (see photo).

Again, this doesn't just appear in this video but several taken with the same camera in similar lighting conditions.

Note: if you want to analyse the video don’t get freaked out if you see a figure my daughter was there wandering around amongst the trees.

Camera used is an iPhone SE Second Generation.

Seems relevant because if I were filming out of an aeroplane at night I might mistake this for a tic tac UFO.

Video without alterations is here the dot is only visible at the highest resolution:

Source: https://youtu.be/XZxC2-nttPI

Is there some moving part in the camera that creates this effect? (or maybe something broken, it's been dropped quite a few times)

Is the lens seeing a part of itself related to low light image capture / auto focus?

Could it be a reflection from my phone case nearest the camera lens? (see photo).

Again, this doesn't just appear in this video but several taken with the same camera in similar lighting conditions.

Note: if you want to analyse the video don’t get freaked out if you see a figure my daughter was there wandering around amongst the trees.

Camera used is an iPhone SE Second Generation.

Seems relevant because if I were filming out of an aeroplane at night I might mistake this for a tic tac UFO.

Video without alterations is here the dot is only visible at the highest resolution:

Source: https://youtu.be/XZxC2-nttPI