When you take a photo or video of a bright light source (or even just look at it) the light often appears a lot bigger than it actually is. The phenomenon is well known, but not really something I'd thought about. Why does it happen? What determines the shape of this glare and how big it is.

Glare comes up in several topics here in Metabunk, but there are two in particular. Firstly there's the glare around the Sun, which has led some Flat Earth people to claim that the sun gets visibly bigger during the day. However, it actually stays the same size and is just brighter.

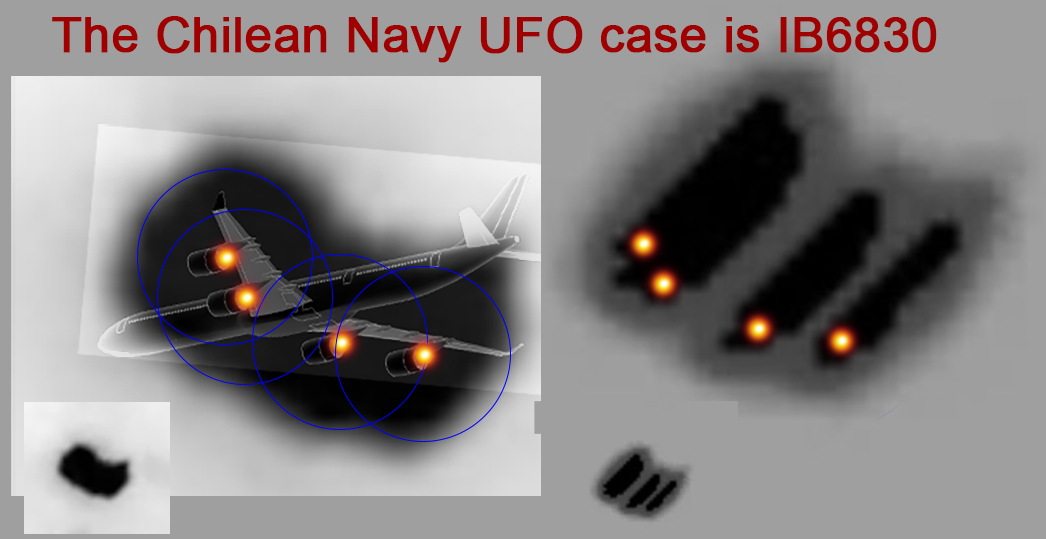

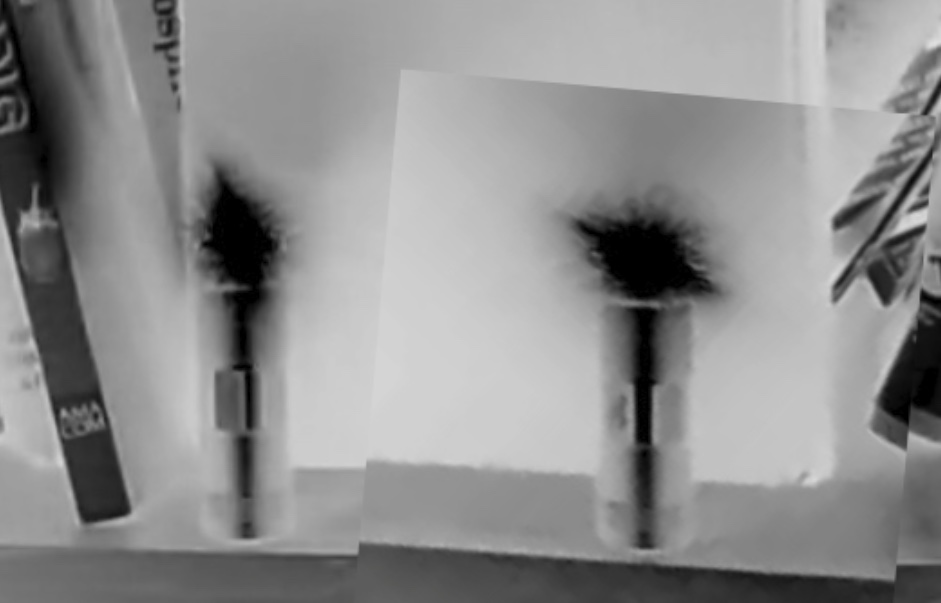

Secondly, there are UFOs on thermal cameras. Jet engines are very hot, and the glare around those engines can be bigger than the plane itself, resulting in an off shape. The classic example is the Chilean Navy UFO

Here the actual "light" (the heat source) is quite small, but the glare is very large. Multiple glares combine together and overlay, obscuring the actual shape of the planes.

That example was proven by radar tracks to be that particular plane. A hypothesized example is the "Gimbal" UFO video:

This was discussed at some length in that thread and continues to be discussed on Facebook. The notable things about the Gimbal video are:

#1 and #3 are discussed at length in the other thread. Both things can be seen in this animation by @igoddard :

The aura is simply something you get around any bright glare in an IR image. It does not indicate anthing of interest.

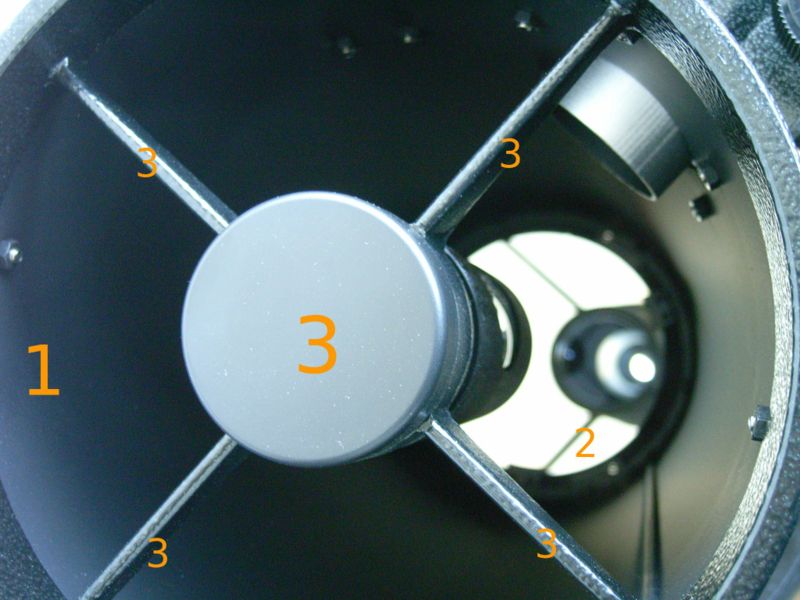

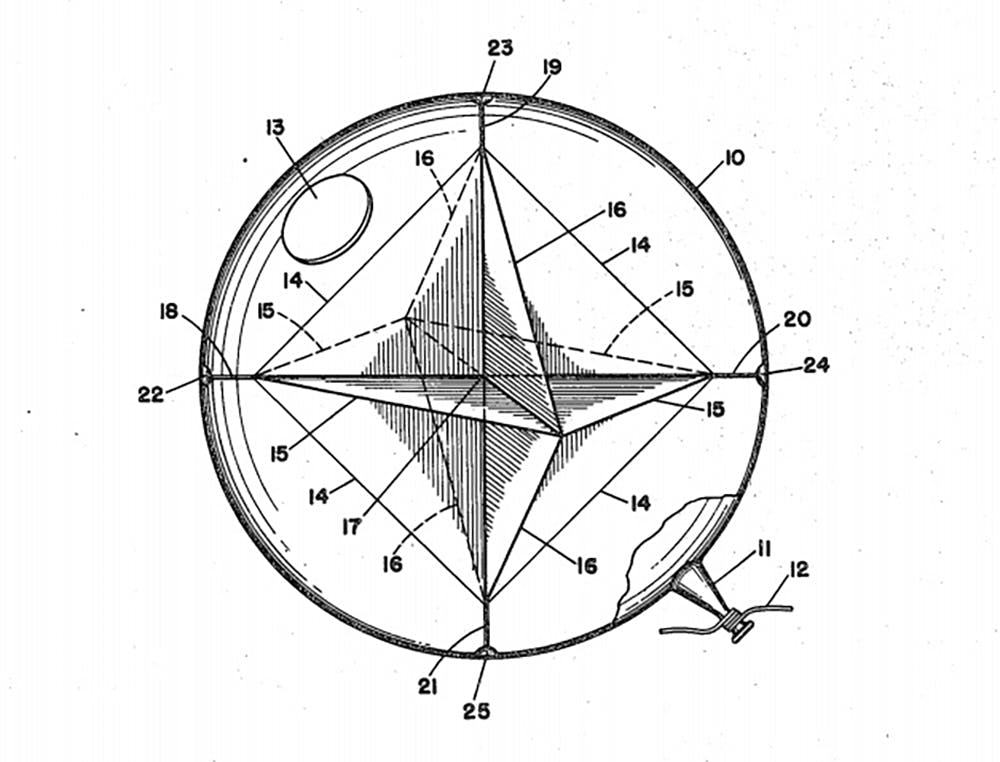

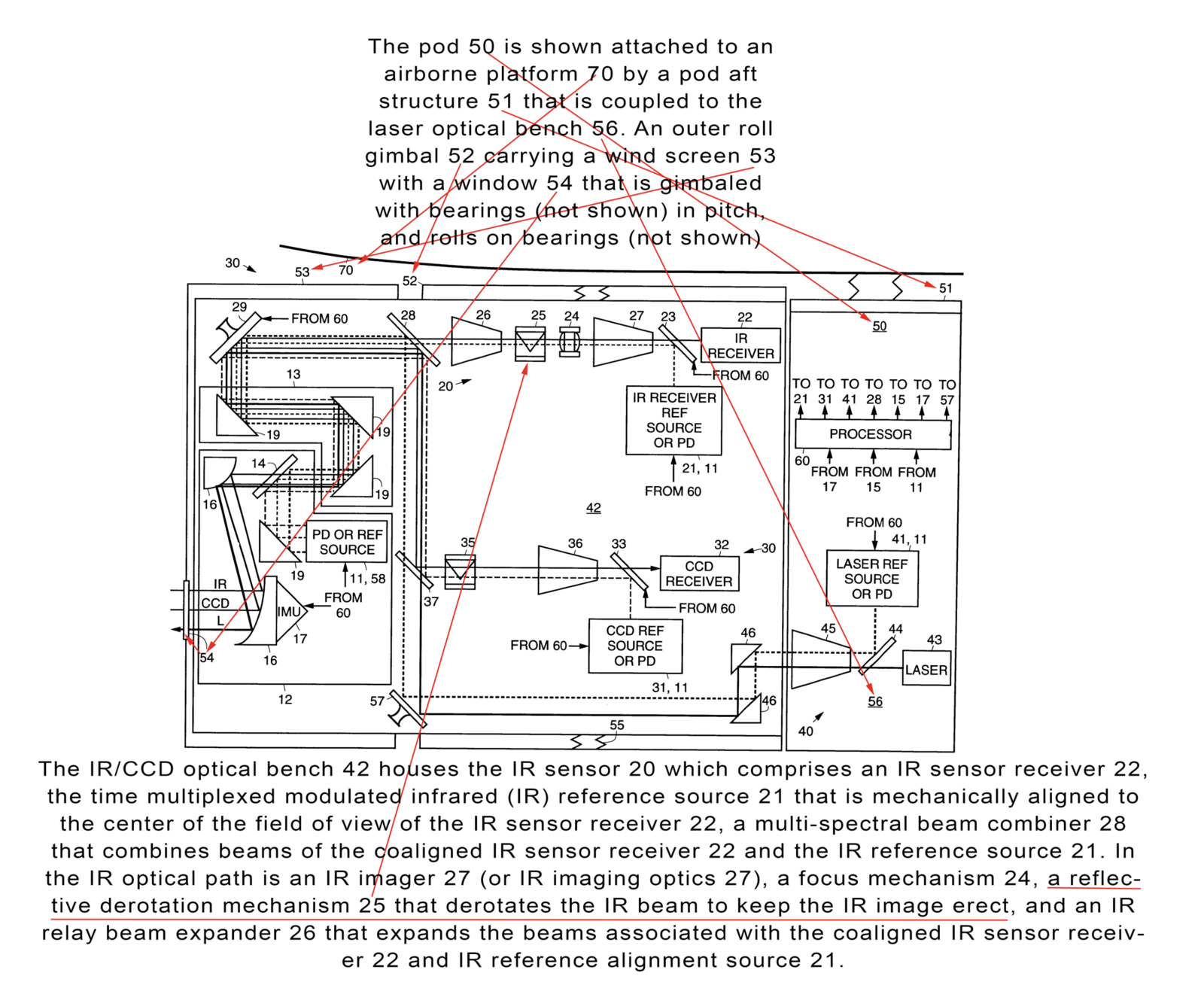

The rotation of the glare is due to the rotation of the camera relative to the horizon. The image is then derotated to keep the horizon level with the plane. Since the glare is an artifact of the optical system it rotates relative to the horizon, even when there's nothing else rotating. This derotation is described in the patent for the ATFLIR system used for the GIMBAL video:

This derotation, and the potential for rotating glare/flare is discussed here. It's not really disputed, but can be difficult to explain. However it's clear that rotating glares can happen.

Glare or Flare

So with the rotation and aura explained, the question remaining with the Gimbal video is: "why is it shaped like that?" and "what is the underlying object."

The first question raised would be "is it even a glare?" Perhaps instead this is the actual shape of the object?

I think not, largely because of the way the shape changes. Is the above the true shape, or is this early shape?

Of the more dramatically different "white-hot" image at the start?

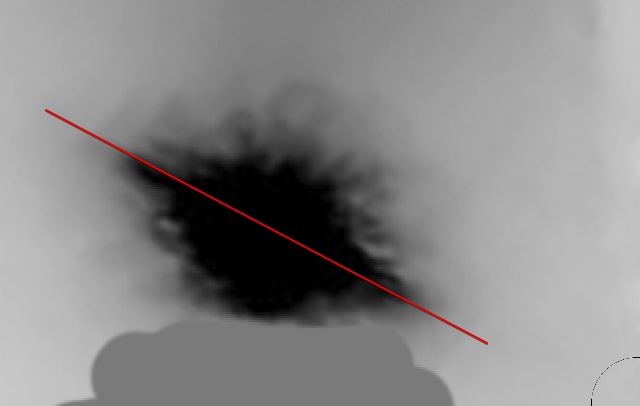

But perhaps the biggest problem with this hypothesis is: how do we get a glare with this shape? In the Chilean Navy case, the shape of the glare came from the configuration of the engines. But here the shape rotates, which suggests it is an artifact of the optical system. Can we get saucer-shaped glares that rotate? Yes, we can:

This again was discussed in the other thread. A flashlight with the reflector removed was used a bright light source. Images were taken with an infrared camera at different angles, and the result was a saucer-shaped flare with a distinct long axis that rotated based on the camera.

This is nothing new, but with the renewed discussion on Facebook I set out to investigate more what actually makes these shapes, and if the Gimbal shape was plausible.

For one experiment I set up a bright light and videoed it with a camera that I rotated by hand, then derotated in software (the equivalent of the ATFLIR mirror derotator)

The result again was a saucer-shaped glare that rotated.

Now, this is still a ways from replicating the Gimbal glare shape, but it's a start. Given the complexity of the gimbal optical path, it seems plausible a more complex shape would emerge.

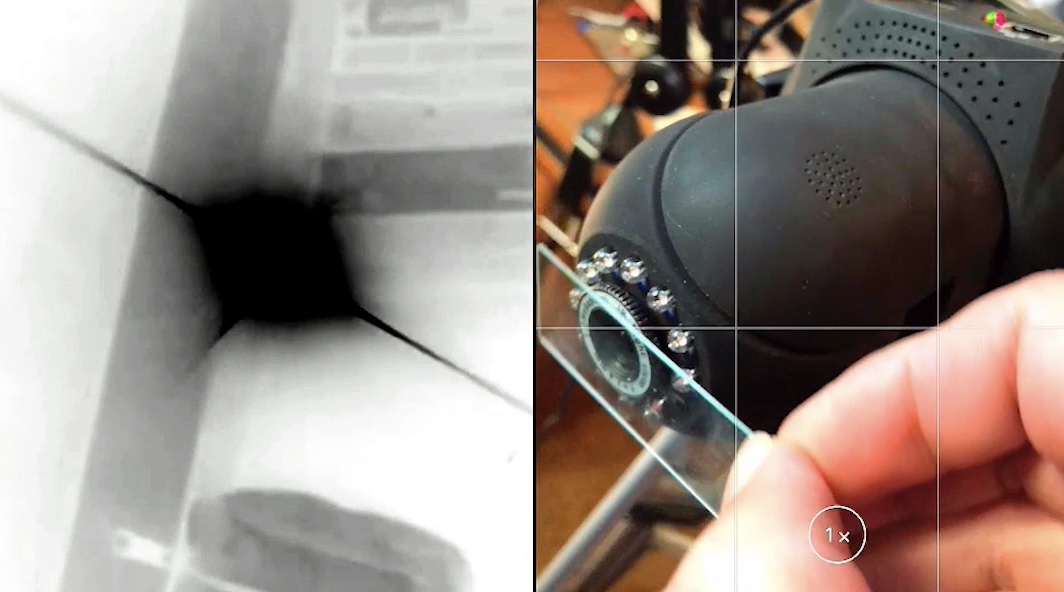

Something that struck me was that I consistently got this saucer shape with different cameras. My initial idea was that the glare was due to streaks on the glass at the front of the ATFLIR. This is probably what leads to rotating glare like this:

http://www.military.com/video/opera...trikes/f-18-takes-out-insurgents/658386321001

Streaky glass tends to give these long flare though, which is not what we see. While I did replicate a rotating saucer-shaped glare by rotating streaked glass, it still had some long streaks.

Note though that it also had some short streaks, which kind of matches the short axis of the Gimbal:

So it's possible that the streaks we just too thin to be recorded in the video.

This whole issue kind of got lost in the other thread, so I'd like to revive (and hopefully resolve) it here. What could cause this rotating glare shape? Can it be replicated?

Glare comes up in several topics here in Metabunk, but there are two in particular. Firstly there's the glare around the Sun, which has led some Flat Earth people to claim that the sun gets visibly bigger during the day. However, it actually stays the same size and is just brighter.

Secondly, there are UFOs on thermal cameras. Jet engines are very hot, and the glare around those engines can be bigger than the plane itself, resulting in an off shape. The classic example is the Chilean Navy UFO

Here the actual "light" (the heat source) is quite small, but the glare is very large. Multiple glares combine together and overlay, obscuring the actual shape of the planes.

That example was proven by radar tracks to be that particular plane. A hypothesized example is the "Gimbal" UFO video:

This was discussed at some length in that thread and continues to be discussed on Facebook. The notable things about the Gimbal video are:

- The rotation, which seems very unlike a normal aircraft.

- The shape of the glare - a flatter oval, with protuberances

- The "aura" around the object (which is actually a darkening, as the video is black=hot)

#1 and #3 are discussed at length in the other thread. Both things can be seen in this animation by @igoddard :

The aura is simply something you get around any bright glare in an IR image. It does not indicate anthing of interest.

The rotation of the glare is due to the rotation of the camera relative to the horizon. The image is then derotated to keep the horizon level with the plane. Since the glare is an artifact of the optical system it rotates relative to the horizon, even when there's nothing else rotating. This derotation is described in the patent for the ATFLIR system used for the GIMBAL video:

This derotation, and the potential for rotating glare/flare is discussed here. It's not really disputed, but can be difficult to explain. However it's clear that rotating glares can happen.

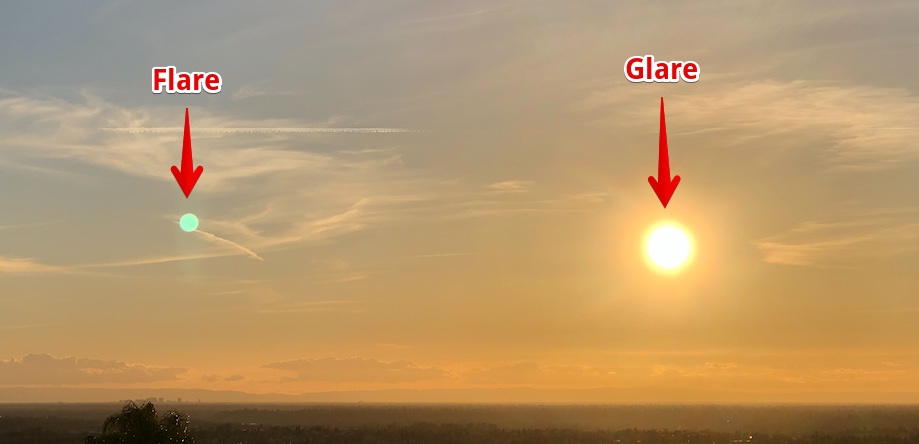

I'd previously referred to this as a "flare" or "glare" interchangeably. However "flare" is more commonly associated with "lens flare", which is a reflection inside the lens resulting in multiple images of the light - often as a line of different circles, or sometimes as a single reflection. To avoid confusion I'm going to try to stick to "glare" - meaning an enlarged and distorted shape of the light viewed directly.

So with the rotation and aura explained, the question remaining with the Gimbal video is: "why is it shaped like that?" and "what is the underlying object."

The first question raised would be "is it even a glare?" Perhaps instead this is the actual shape of the object?

I think not, largely because of the way the shape changes. Is the above the true shape, or is this early shape?

Of the more dramatically different "white-hot" image at the start?

But perhaps the biggest problem with this hypothesis is: how do we get a glare with this shape? In the Chilean Navy case, the shape of the glare came from the configuration of the engines. But here the shape rotates, which suggests it is an artifact of the optical system. Can we get saucer-shaped glares that rotate? Yes, we can:

This again was discussed in the other thread. A flashlight with the reflector removed was used a bright light source. Images were taken with an infrared camera at different angles, and the result was a saucer-shaped flare with a distinct long axis that rotated based on the camera.

This is nothing new, but with the renewed discussion on Facebook I set out to investigate more what actually makes these shapes, and if the Gimbal shape was plausible.

For one experiment I set up a bright light and videoed it with a camera that I rotated by hand, then derotated in software (the equivalent of the ATFLIR mirror derotator)

The result again was a saucer-shaped glare that rotated.

Now, this is still a ways from replicating the Gimbal glare shape, but it's a start. Given the complexity of the gimbal optical path, it seems plausible a more complex shape would emerge.

Something that struck me was that I consistently got this saucer shape with different cameras. My initial idea was that the glare was due to streaks on the glass at the front of the ATFLIR. This is probably what leads to rotating glare like this:

http://www.military.com/video/opera...trikes/f-18-takes-out-insurgents/658386321001

Streaky glass tends to give these long flare though, which is not what we see. While I did replicate a rotating saucer-shaped glare by rotating streaked glass, it still had some long streaks.

Note though that it also had some short streaks, which kind of matches the short axis of the Gimbal:

So it's possible that the streaks we just too thin to be recorded in the video.

This whole issue kind of got lost in the other thread, so I'd like to revive (and hopefully resolve) it here. What could cause this rotating glare shape? Can it be replicated?

Attachments

Last edited: