I think we've been too naïve and old-fashioned. I've been looking at info on the Internet specific to modern low altitude digital aerial photography.

I think this is an artifact that was produced by the

radiometric correction of images.

Radiometric correction is also used for satellite images and so on.

If I'm getting this right, this is a method for correcting the brightness of objects in the entire image simultaneously.

But radiometric correction of images is an evolving technology, and can be haywired by the reflectance effects from objects at the anti-solar point. (And maybe Rayleigh scattering caused by atmospheric haze in the anti-solar point of the camera in each individual frame? I'm not sure.)

This can produce an artifact known as a

hotspot due to bidirectional reflectance effects.

Now bear with me, because this image was not at the camera's anti-solar point, but I think this is all apropos because it demonstrates that radiometric correction can be haywired by unexpected reflectance effects. Especially since this is an evolving method, and this image may be the result of a particular method used only in the 2016 timeframe.

What's bidirectional reflectance? It's not just about what things look like at the anti-solar point of an observer.

https://snr.unl.edu/agmet/brdf/brdf-definition.asp

The reflectance from a surface depends upon the direction of incident radiation (and its characteristics), the surface radiative properties and the direction from which the surface is being viewed.

In vegetative canopies, the distribution of leaves, the amount of leaf material and viewed fraction of leaf material in the direction of the sun and view affect the reflectance.

https://www.umb.edu/spectralmass/terra_aqua_modis/modis

The BRDF is the "Bidirectional Reflectance Distribution Function." It gives the reflectance of a target as a function of illumination geometry and viewing geometry. The BRDF depends on wavelength and is determined by the structural and optical properties of the surface, such as shadow-casting, multiple scattering, mutual shadowing, transmission, reflection, absorption, and emission by surface elements, facet orientation distribution, and facet density.

What's the anti-solar point?

https://personal.math.ubc.ca/~cass/courses/m309-03a/m309-projects/endersby/Antisolarpoint.html

If we look at the ground on a sunny day, the shadow of our head marks the point called the antisolar point, 180° away from the sun. If the sun is in the sky, the antisolar point is below the horizon. If the sun has set, the antisolar point is above the horizon.

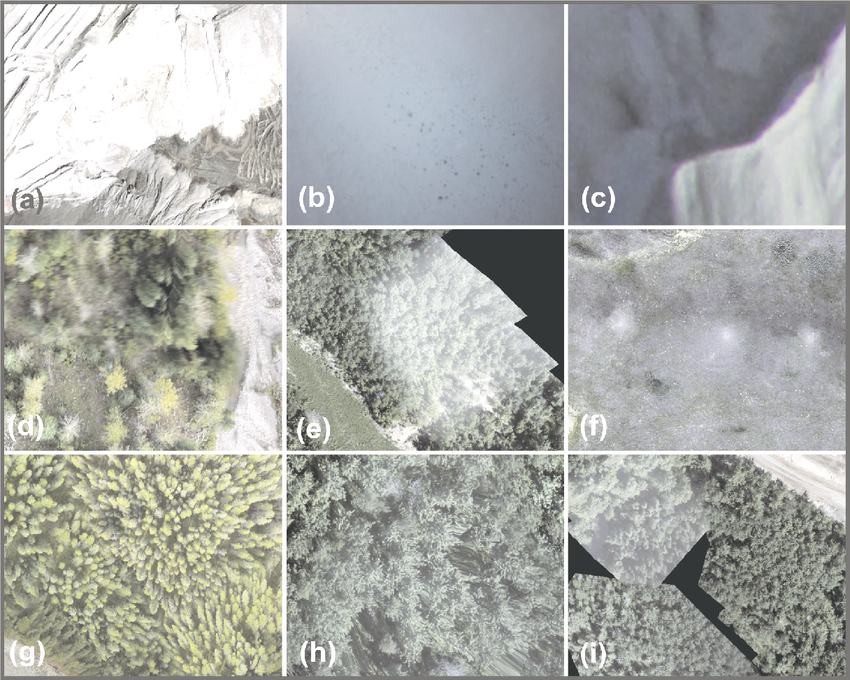

https://www.researchgate.net/figure...te-sensing-a-saturated-image-b_fig5_270726348

https://www.researchgate.net/figure...te-sensing-a-saturated-image-b_fig5_270726348

The recent development and proliferation of unmanned aircraft systems (UASs) has made it possible to examine environmental processes and changes occurring at spatial and temporal scales that would be difficult or impossible to detect using conventional remote sensing platforms. This review article highlights new developments in UAS-based remote sensing, focusing mainly on small UASs (<25 kg). Because this class is generally less expensive and more versatile than larger systems the use of small UASs for civil, commercial, and scientific applications is expected to expand considerably in the future. To highlight different environmental applications, we provide an overview of recent progress in remote sensing with small UASs, including photogrammetry, multispectral and hyperspectral imaging, thermal, and synthetic aperture radar and LiDAR. We also draw on the literature and our own research experience to identify some key research challenges, including limitations of the current generation of platforms and sensors, and the development of optimal methodologies for processing and analysis.

(f) hotspots on mosaic due to bidirectional reflectance effects

Another commonly seen illumination effect is the presence of image hotspots, where a bright spot appears in the image (Fig. 6). These are due to the effects of bidirectional reflectance, which is dependent on the relative position of the image sensor and the sun (Hakala et al. 2010; Grenzdörffer and Niemeyer 2011; Laliberte et al. 2011). Hotspots occur at the antisolar point, which is the point where the line defined by the sensor position and the sun intersects with the ground.

Quality Assessment of the Bidirectional Reflectance Distribution Function for NIR Imagery Sequences from UAV

Received: 30 July 2018 / Revised: 19 August 2018 / Accepted: 21 August 2018 / Published: 24 August 2018

https://www.mdpi.com/2072-4292/10/9/1348

Imaging from low altitudes is nowadays commonly used in remote sensing and photogrammetry. More and more often, in addition to acquiring images in the visible range, images in other spectral ranges, e.g., near infrared (NIR), are also recorded. During low-altitude photogrammetric studies, small-format images of large coverage along and across the flight route are acquired that provide information about the imaged objects. The novelty presented in this research is the use of the modified method of the dark-object subtraction technique correction with a modified Walthall’s model for correction of images obtained from a low altitude. The basic versions of these models have often been used to radiometric correction of satellite imagery and classic aerial images. However, with the increasing popularity of imaging from low altitude (in particular in the NIR range), it has also become necessary to perform radiometric correction for this type of images. The radiometric correction of images acquired from low altitudes is important from the point of view of eliminating disturbances which might reduce the capabilities of image interpretation. The radiometric correction of images acquired from low altitudes should take into account the influence of the atmosphere but also the geometry of illumination, which is described by the bidirectional reflectance distribution function (BRDF). This paper presents a method of radiometric correction for unmanned aerial vehicle (UAV) NIR images.

BTW is this a NIR image?

Don't know

Was this this taken from a UAV? Don't know.

I'm just trying to convey the message that processing methods have been evolving.

Now then: Could this be an artifact produced by

radiometric correction of images due to a specular reflection of the Sun from an object on the ground?

As pointed out previously in this thread, this could not be a specular reflection of the Sun from the surface of ponding water. The angles aren't right for that.

But it could be a reflection from an irregular object. It doesn't have to be a car or highly reflective. It might not even be something producing a specular reflection, but a bright diffuse reflection. Just something producing an

unexpected reflectance effect.

Even, perhaps a dried water spot on a glass camera shield?

I think this is all evidence that this is simply a processing artifact

that is unique to most of us, because it was caused by a new processing method

.

Or perhaps by a method that was only briefly used in the 2016 timeframe.

Same source as above, from 2018

Simple Dark Pixel Subtraction:

–the model takes into account the shift of histograms in the specific channels depending on the angle of image acquisition [

22]. It is caused by reflected light from outside the field of view of the sensor, which reaches the field of view of the sensor even if the ground reflection coefficient is equal to zero.

Modified Chavez Method: In some cases when the image content was not homogeneous but it contained urban areas, forests or flat areas, excessive correction was observed in the RED and NIR channels [

23]. In such cases, the λ-κ rule was proposed for the atmospherically scattered radiance. The value of the κ coefficient was in the range from 0.5 for haze in the atmosphere to 4.0 for a clear Rayleigh-type atmosphere. Due to the fact that the blue band shift represents the greatest impact of the atmosphere, it may be expected that the values determined for the channel will be the most accurate ones. The determined value of the shift (calibrated) enables the determination of the value of κ. The greater the shift in the DN values of the pixels, the thicker the haze of the atmosphere. Moreover, the κ-coefficient rule depends on the altitude of flight. In the case of images acquired from low altitudes, the useful range of altitudes will usually be within 100 to 300 m. The values of the shift for other channels including NIR.

We can't identify this unique kind of artifact, so it's mysterious.

The typical UFOlogist leap of logic is that if it's mysterious it must be otherworldly, or supernatural, or extradimensional, or whatever you want to say.