A new analysis, published by 3AF (also has some minor descriptions of the Chilean and US Navy cases)

https://www.3af.fr/global/gene/link.php?doc_id=4234&fg

Section start on page 48. PDF is in French, I attach an auto-translated version

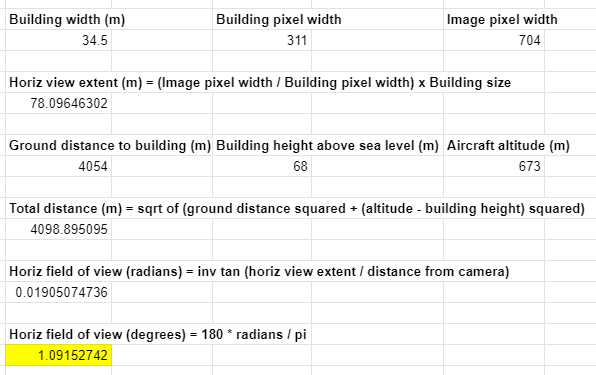

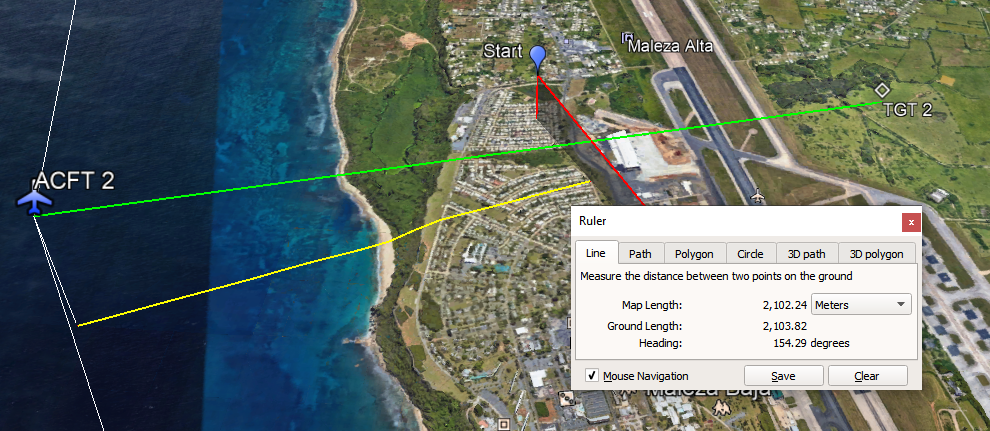

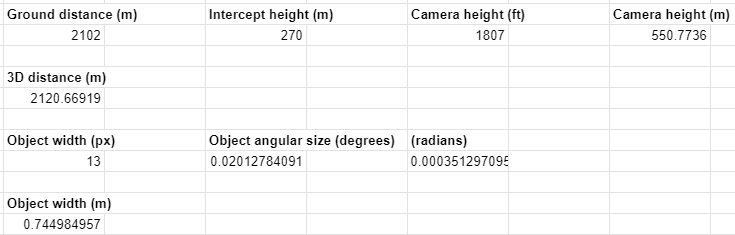

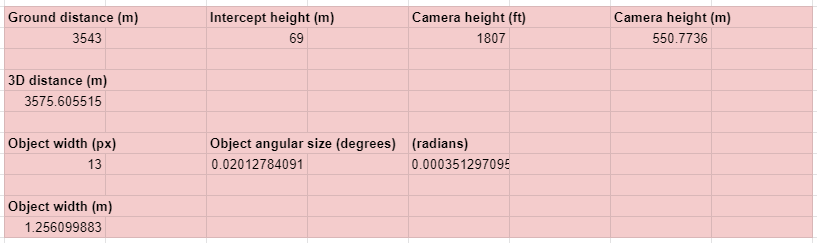

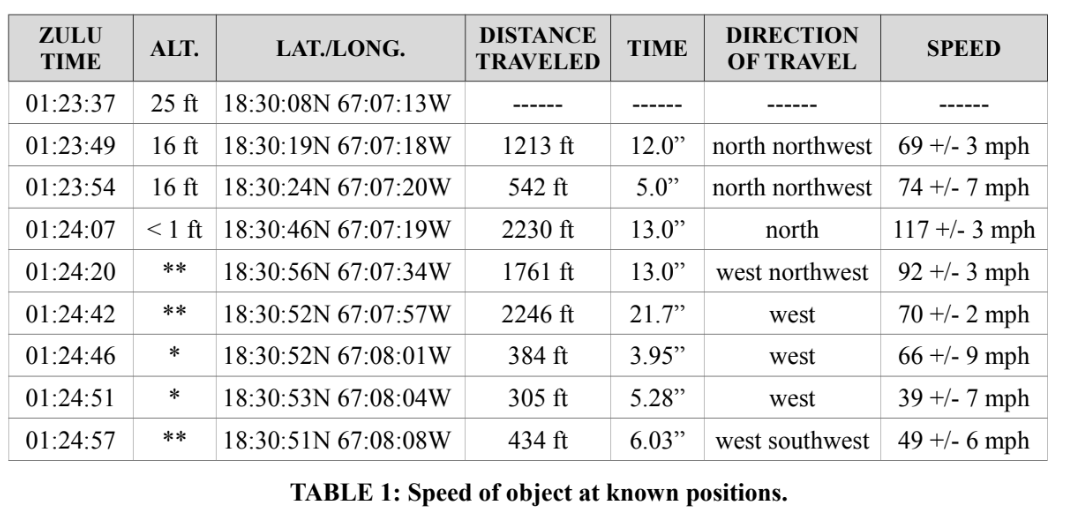

They consider three hypotheses that match the data - an object close to the ground/ocean (the SCU hypothesis), a slow-moving object close to the airport at around 1,000 feet (the mundane hypothesis), and an object following the plane (the "just for completeness" hypothesis). They narrow it down to the first two.

https://www.3af.fr/global/gene/link.php?doc_id=4234&fg

Section start on page 48. PDF is in French, I attach an auto-translated version

They consider three hypotheses that match the data - an object close to the ground/ocean (the SCU hypothesis), a slow-moving object close to the airport at around 1,000 feet (the mundane hypothesis), and an object following the plane (the "just for completeness" hypothesis). They narrow it down to the first two.

External Quote:

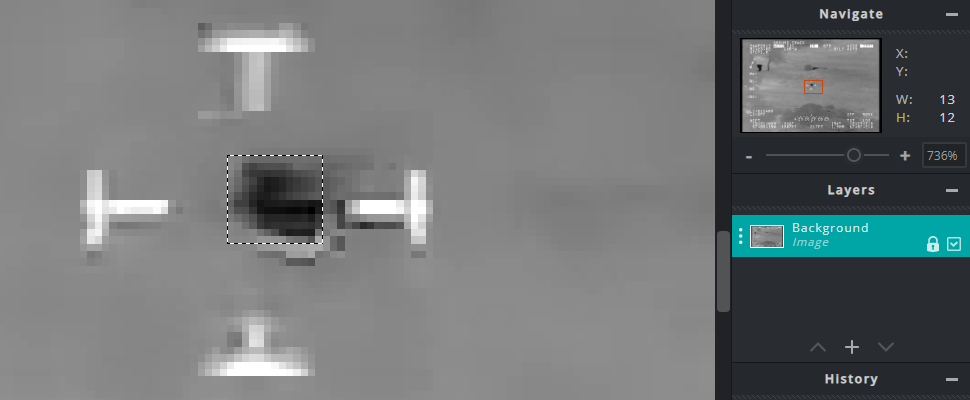

From a kinematic point of view two hypotheses emerge. One corresponds to a local path in the vicinity of the airport in slow descent (2 ft/s) between 1000 and 800 ft, compatible with that of a balloon or a Chinese lantern, or even a micro-drone, drifting at low speed while being carried by the wind. But this hypothesis, which would have the merit of corresponding to a simple scenario and a classical kinematics, is not consistent with radiometric data (hot spot, occultation). The other hypothesis could be 100 ft field monitoring, at least in the second part of the trajectory; which could explain some observed phenomena (hot spot, temporary occultation of the signature in flight grazing over the sea). It could be a micro-drone with extreme high-speed capabilities (nearly 300 km/h) at the beginning of the trajectory, as there are some prototypes. However, the use scenario of such a drone seems very atypical. A flight level change hypothesis with rapid descent could potentially change the initial speed peak but does not resolve issues such as duplication.

There is nothing to confirm a case of extraordinary PAN (Phénomènes Aérospatiaux Non-identifiés, UAP), even if we are faced with indeterminations on the restitution of trajectories and therefore of the type of flying object, or even with questions on certain IR phenomena (occultations, duplication).

Both assumptions have advantages and disadvantages.