Ann K

Senior Member.

Another point from Szydagis' lengthy discussion supporting the possibility of alien visitors:

The phrase "Putting the fuel issue aside" struck me as hand-waving away a problem that is generally considered insurmountable. But I admit my expertise in physics is insufficient to articulate it clearly, so I'd like to open the question to more knowledgeable members. Is it possible to ignore fuel as a consideration for his postulated near-light-speed travel? He blithely assumes engineering can do it.

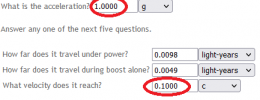

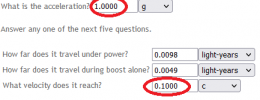

https://uapx-media.medium.com/addre...-criticisms-against-studying-uap-5663335fe8c8Consider a craft with 1g of acceleration (9.8 m/s²) as well achievable with our current tech, just not sustainable long term due to fuel requirements. Putting the fuel issue aside, we could do this today without killing the occupant. This 1g acceleration is sufficient to achieve near-light speed in 1 year

The phrase "Putting the fuel issue aside" struck me as hand-waving away a problem that is generally considered insurmountable. But I admit my expertise in physics is insufficient to articulate it clearly, so I'd like to open the question to more knowledgeable members. Is it possible to ignore fuel as a consideration for his postulated near-light-speed travel? He blithely assumes engineering can do it.

I question his sunny optimism.The next naysayer argument is about how hard this would be and the fuel it would take. I am sorry, but that is a question of very clever engineering, not of new physics, and engineers are renowned for finding clever loopholes within the “known laws” of physics.