Article: The Main Astronomical Observatory of NAS of Ukraine conducts an independent study of UAP also. For UAP observations, we used two meteor stations installed in Kyiv and in the Vinarivka village in the south of the Kyiv region. Observations were performed with colour video cameras in the daytime sky. We have developed a special observation technique, for detecting and evaluating UAP characteristics. According to our data, there are two types of UAP, which we conventionally call: (1) Cosmics, and (2) Phantoms. We note that Cosmics are luminous objects, brighter than the background of the sky. We call these ships names of birds (swift, falcon, eagle). Phantoms are dark objects, with contrast from several to about 50 per cent. We present a broad range of UAPs. We see them everywhere. We observe a significant number of objects whose nature is not clear. Flights of single, group and squadrons of the ships were detected, moving at speeds from 3 to 15 degrees per second.

There are a variety of observations, basically it seems like they pointed cameras at the sky, and waited for things to fly across the field of view. They estimated the distances to be quite large, but I'm a bit suspicious. Especially since the "phantom" objects look very like flies.

What do they say about "phantoms"

Article: Phantom shows the colur [sic] characteristics inherent in an object with zero albedos. It is a completely black body that does not emit and absorbs all the radiation falling on it. We see an object because it shields radiation due to Rayleigh scattering. An object contrast makes it possible to estimate the distance using colorimetric methods. Phantoms are observed in the troposphere at distances up to 10 - 12 km. We estimate their size from 3 to 12 meters and speeds up to 15 km/s.

Phantoms are dark objects, with a contrast, according to our data, from 50% to several per cent.

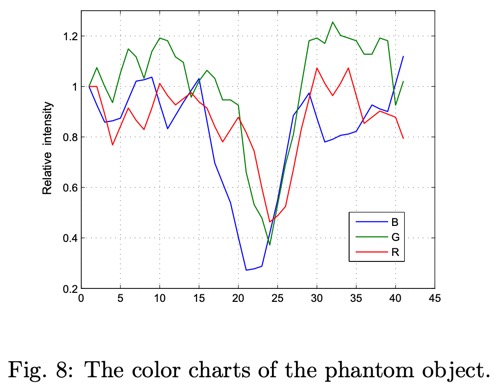

Figures 7 and 8 show the image and color charts of the phantom object. The object is present in

only one frame, which allows us to determine its speed of at least 52 degrees per second, taking into

account the angular dimensions of the frame.

Fig. 8 shows the color characteristics inherent in an object with zero albedo. This means that the

object is a completely black body that does not emit and absorbs all the radiation falling on it. We see

an object only because it shields radiation in the atmosphere due to Rayleigh scattering. An object

contrast of about 0.4 makes it possible to estimate the distance to the object as about 5 km. The

estimate of the angular velocity given above makes it possible to estimate the linear velocity not less

than 7.2 km/s.

The claim that Fig 7 shows an object with zero albedo seems specious. The image is entirely consistent with a normal dark object like a fly, and there's no comparison with such an object to eliminate it.

The spacing of the object in fig 13 is also suspect. If it were a high fast object, the frames would be equally spaced. That they are not, suggests a curved trajectory, consistent with a fly, like this:

Attachments

Last edited: