When I finished to read MH17 report I was disgusted with the method of searching for launch position. My main pretensionis “mathcing”. My opinion is necessary to alter the work in the right way.

However, to show the essence of the objection I had to do a calculation to find the starting position. I do not strive for accuracy, on the contrary, I used a very rough approximation for brevity and laconic calculation. But the results are qualitatively similar to the true (If I dont make a dumbest mistake in my calculation like a wrong sign).

The calculation is VisualStudio C++ project, so if a simplest programming is a fear for you, don’t read more.Also, do not need to tell me about the bad code, the simplest code is our choice.

If anyone wants to make better, the first step is refuse by brut force minimization. About accuracy, I don’t see a big sense to make some parameters better, when other is still rough. To make all it correct is a big job

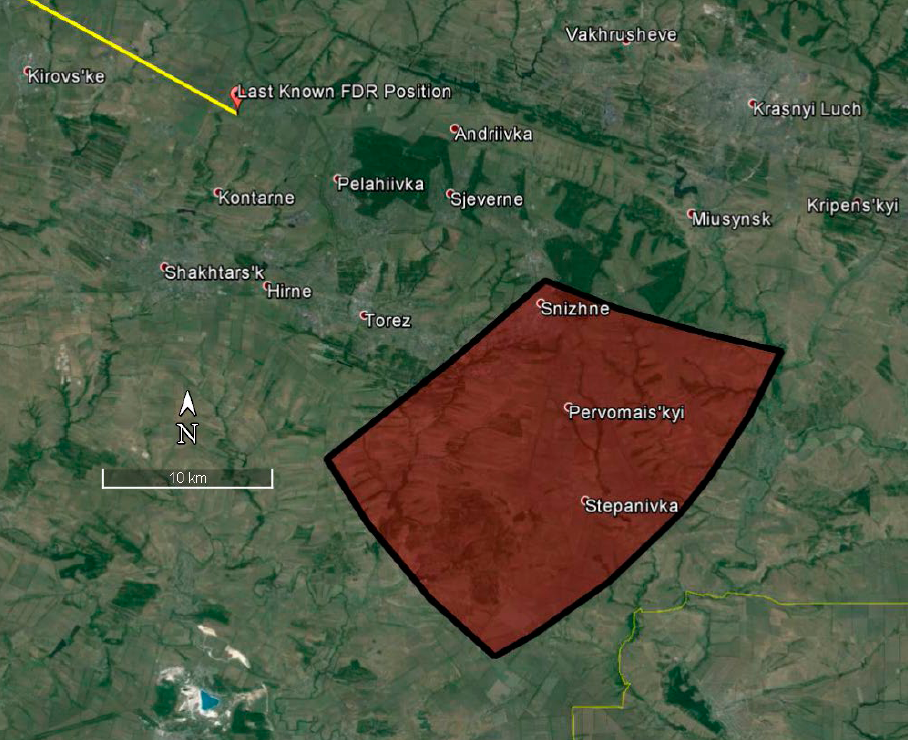

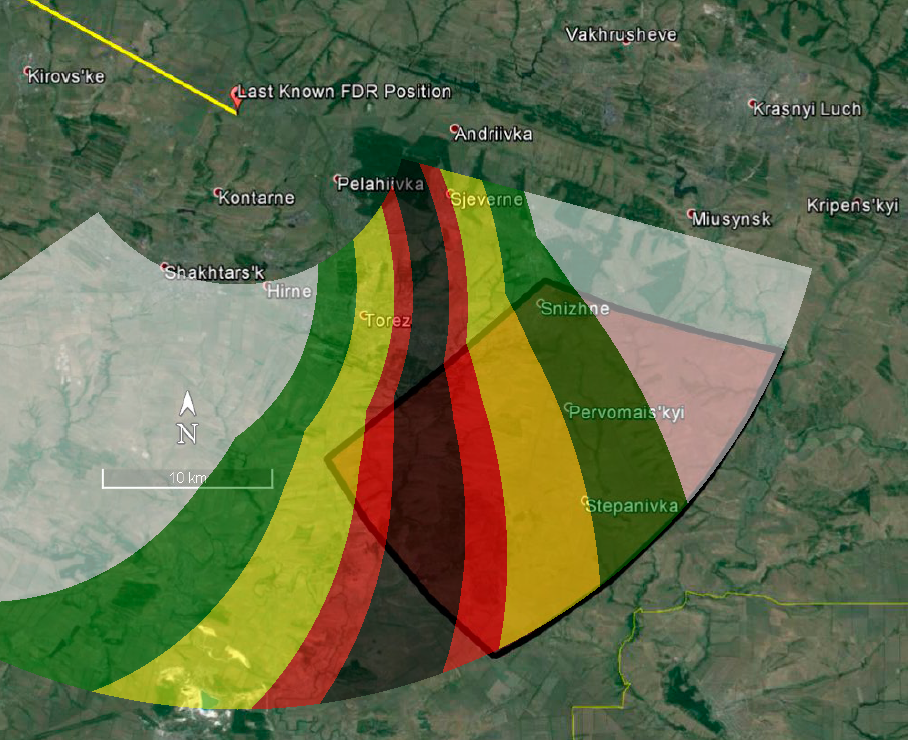

First is a map. I make screenshot with a launch position picture from MH17 report.

It is our map, 908*740 pixels. The last FDR position I found in Paint as [237;114].

int X_FDR=237;

int Y_FDR=114;

The scale is 10 km / (272-102). Forget about a map projection, ground is plane.

double x_scale=10./(272-102);

double y_scale=x_scale;

We will find a launch area by fingering each pixel to south of FDR position.

for(int X_Launch=0; X_Launch<=907; X_Launch++)

{

for(int Y_Launch=Y_FDR+1; Y_Launch<=739; Y_Launch++)

{

}

}

For each pixel, distance by axis to FDR point is:

double dx= x_scale*(X_FDR-X_Launch);

double dy=-y_scale*(Y_FDR-Y_Launch);

Y with “-“ because pixels orientation for Y is reverse to latitude.

A line distance is:

double l=sqrt(dx*dx+dy*dy);

Azimuth is (in degrees as it is for all other angles further):

double az=asin(dx/l)*180/M_PI;

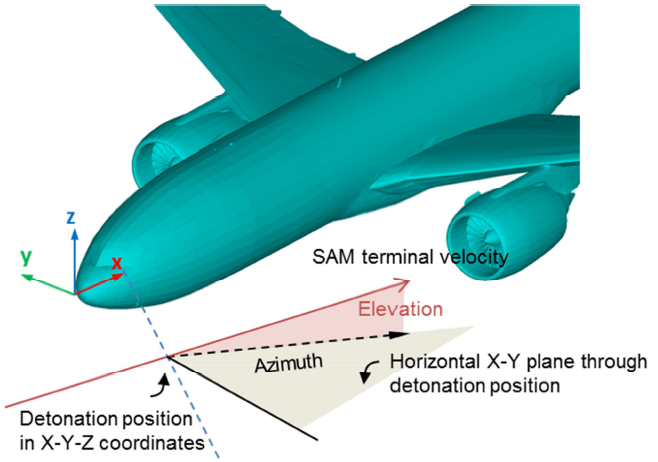

Beta is angle of crossing Boeing axis, like 72-78 from AA presentation.

double beta_nominal=(az+(180-115));

This is conditions that set limitations to possible area on map by the distance and the angle:

if(l>35||l<20) continue;

if(beta_nominal>50||beta_nominal<0) continue;

This is code that joint the pixel color to white color making the selected area more white:

Color additionalColour;

additionalColour=additionalColour.White;

Color pixelColour=Map->GetPixel(X_Launch, Y_Launch);

pixelColour=pixelColour.FromArgb(JointColours(pixelColour, additionalColour));

Map->SetPixel(X_Launch, Y_Launch, pixelColour);

And now, all of is entirely:

Bitmap^ Map = gcnew Bitmap(pictureBox1->Image);

int X_FDR=237;

int Y_FDR=114;

double x_scale=10./(272-102);

double y_scale=x_scale;

for(int X_Launch=0; X_Launch<=907; X_Launch++)

{

for(int Y_Launch=Y_FDR+1; Y_Launch<=739; Y_Launch++)

{

double dx= x_scale*(X_FDR-X_Launch);

double dy=-y_scale*(Y_FDR-Y_Launch);

double l=sqrt(dx*dx+dy*dy);

double az=asin(dx/l)*180/M_PI;

double beta_nominal=(az+(180-115));

if(l>35||l<20) continue;

if(beta_nominal>50||beta_nominal<0) continue;

Color additionalColour;

additionalColour=additionalColour.White;

Color pixelColour=Map->GetPixel(X_Launch, Y_Launch);

pixelColour=pixelColour.FromArgb(JointColours(pixelColour, additionalColour));

Map->SetPixel(X_Launch, Y_Launch, pixelColour);

}

}

pictureBox1->Image=Map;

Map->Save("MH17_map_test.bmp");

The result is that map:

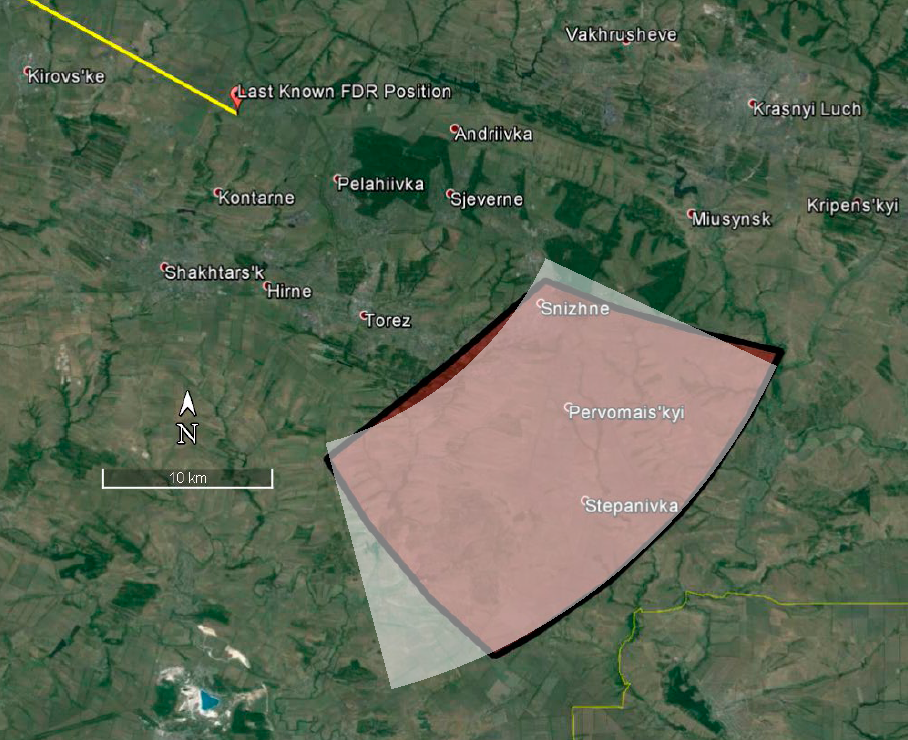

It is look like our selected area 20-35 km and 0-50 degree close to MH17 report launch area.

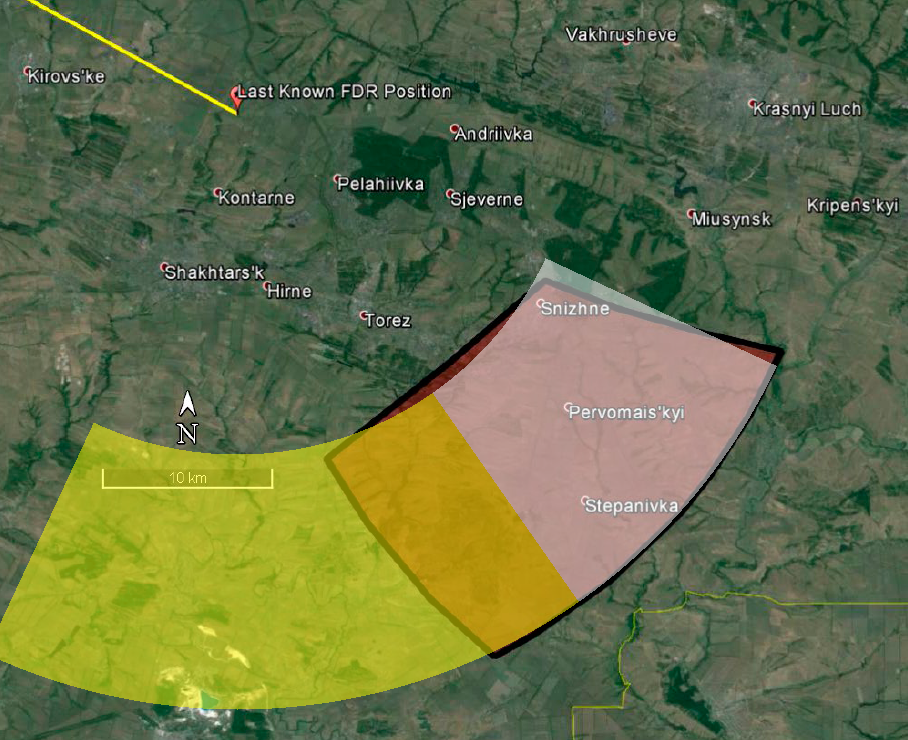

This map have increased angle range to 90, and the area with beta>30 degrees colored with yellow.

The 30 degrees beta direction (to Saur-Mohila) is border between DNR forces and ukrainian army. Left(yellow) is Ukranian guild, right(white) is DNR guild.

To better understand the fighting map again.

And for clothing the map theme, we get right distance and angle relations. But original map is not for plane, so we get offset of location which may be up to few kilometers.

Next step, we must calculate parameters of interception. It is not simple job, so it is unwelcome. And ballistic with aerodynamic was sent to map projection. Instead will be use linear approximation.

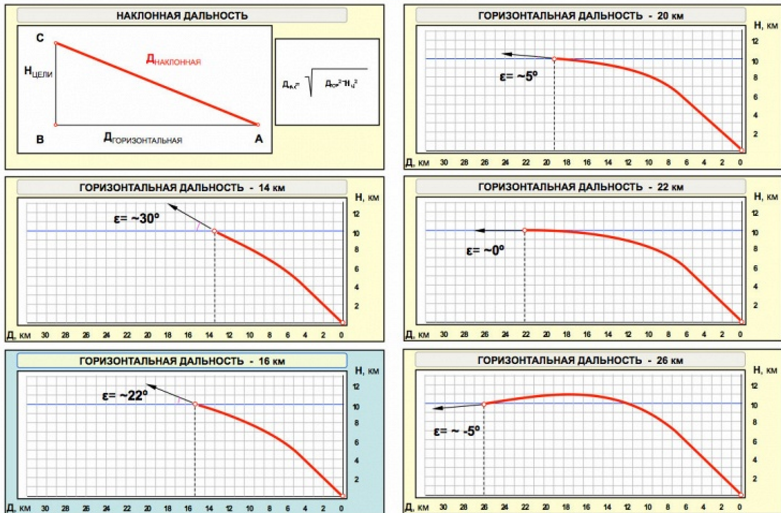

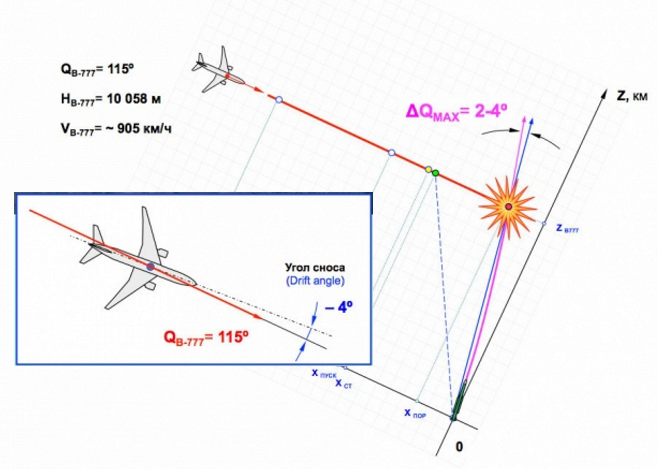

This is a picture from AA presentation.

This is function based on top picture that calculates a epsilon angle (elevation) by distance as linear approximation:

double epsilon(double l)

{

double lp[5]={13, 15, 19, 22, 26};

double ep[5]={30, 22, 5, 0, -5};

int i;

for(i=0; i<=2; i++) if(lp[i+1]>l) break;

return ep + (l-lp)*(ep[i+1]-ep)/(lp[i+1]-lp);

};

For the velocity it is much bad. I take only 2 point, and I don’t sure about second even:

double velocity(double epsilon)

{

double vp[2]={730, 600};

double ep[2]={22, 5};

return vp[0] + (epsilon-ep[0])*(vp[1]-vp[0])/(ep[1]-ep[0]);

};

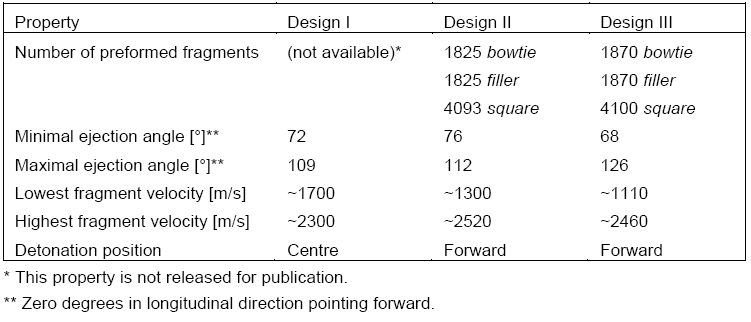

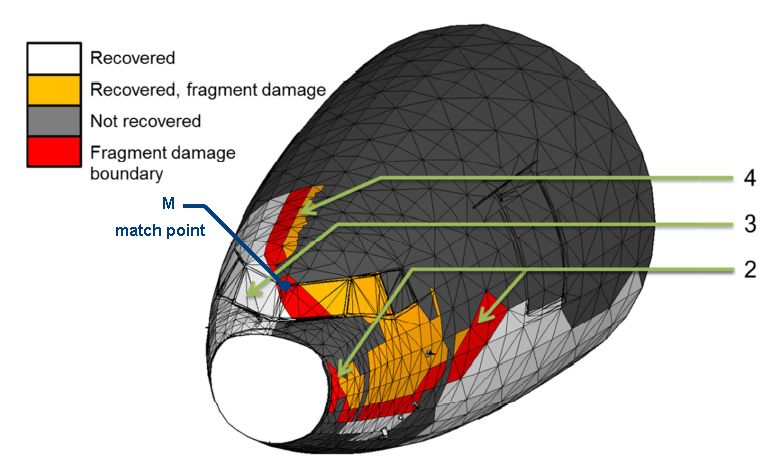

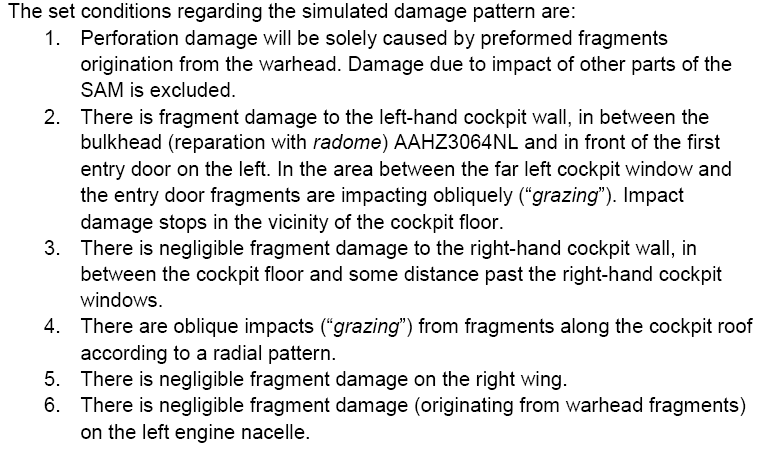

The cone angle of rear fragment direction is next what we need found. I take as a base the warhead II from TNO report.

double cone_angle(double velocity)

{

double cone_zero_velocity=112;

double highest_frag_velocity=2520;

double vn=highest_frag_velocity*sin(cone_zero_velocity*M_PI/180);

double vt=highest_frag_velocity*cos(cone_zero_velocity*M_PI/180);

double vt_new=vt+velocity;

return 90-atan(vt_new/vn)*180/M_PI;

};

This is a code that providing printing epsilon, velocity and cone angle.

ofstream f;

f.open("res.txt");

for(double l=10; l<=35; l=l+1)

{

f<<l<<" "

<<epsilon(l)<<" "

<<velocity(epsilon(l))<<" "

<<cone_angle(velocity(epsilon(l)))

<<endl;

}

f.close();

Result is (distance [km], epsilon[degree], velocity[m/s], cone_angle[degree]):

10 42 882.941 91.4972

11 38 852.353 92.2464

12 34 821.765 92.9949

13 30 791.176 93.7424

14 26 760.588 94.4886

15 22 730 95.2333

16 17.75 697.5 96.0226

17 13.5 665 96.8096

18 9.25 632.5 97.594

19 5 600 98.3756

20 3.33333 587.255 98.6813

21 1.66667 574.51 98.9864

22 0 561.765 99.2911

23 -1.25 552.206 99.5192

24 -2.5 542.647 99.7471

25 -3.75 533.088 99.9746

26 -5 523.529 100.202

27 -6.25 513.971 100.429

28 -7.5 504.412 100.655

29 -8.75 494.853 100.881

30 -10 485.294 101.107

31 -11.25 475.735 101.333

32 -12.5 466.176 101.558

33 -13.75 456.618 101.783

34 -15 447.059 102.007

35 -16.25 437.5 102.231

So, it is vary by distance like truth, but value may have a big mistake.

This is value for each of them corresponds to current pixel as start location.

double epsilon_blast=epsilon(l);

double v_blast=velocity(epsilon_blast);

double cone_blast=cone_angle(v_blast);

Additionally, take a drift and Q elaboration for beta (see AA presentation).

Q must be variable for distance and azimuth, but who care about it through such rough calculation.

double beta_drift=-4;

double beta_q=2;

double beta_blast=beta_nominal-beta_drift-beta_q;

And now, let’s talk about a “matching”. First, I will give my example.

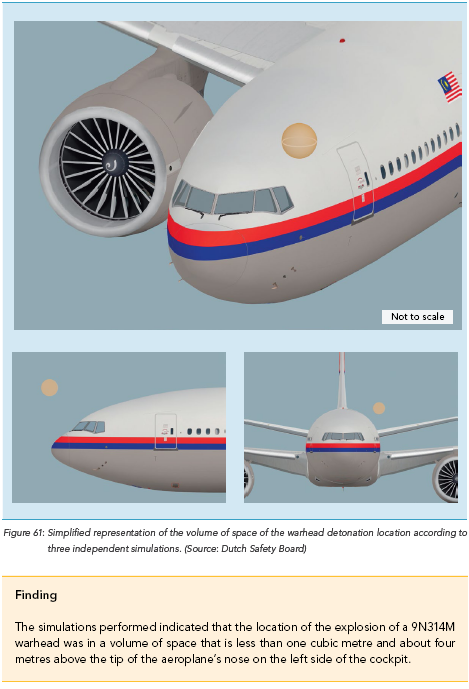

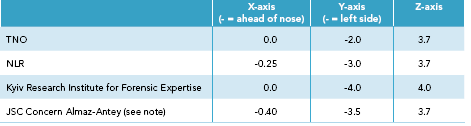

I take as a base the blast coordinates because I like strings much more then any computer modeling.

And because MH17 report have the conclusion about it.

If I were in DSB shoes, I would not accept the results contrary string model. I think, three independent simulation is a strings, microphones and one of blast computer simulation. And make note “volume less then one cubic metre”.

And as I see the blast position from DSB report look like NLR coordinates

So, code is:

Point3D blast_point;

blast_point.X=-0.25;

blast_point.Y=-3.0;

blast_point.Z=3.7;

Point3D is my struct which have add function.

struct Point3D

{

double X, Y, Z;

};

Point3D operator+(Point3D a, Point3D b)

{

Point3D c;

c.X=a.X+b.X;

c.Y=a.Y+b.Y;

c.Z=a.Z+b.Z;

return c;

};

And now, my match condition is coincidence of rear cone for fragments and the top of the nose septum between left and right cabin window. It is my “match point”.

I select this point because it is look like a truth, and because top of window is a good mark when you get a coordinate value by pixel on B777 picture. Result is:

Point3D match_point;

match_point.X=1.59;

match_point.Y=0;

match_point.Z=1.56;

Axis is that:

And note: Match is expert arbitrariness, and it is theme to discuss.

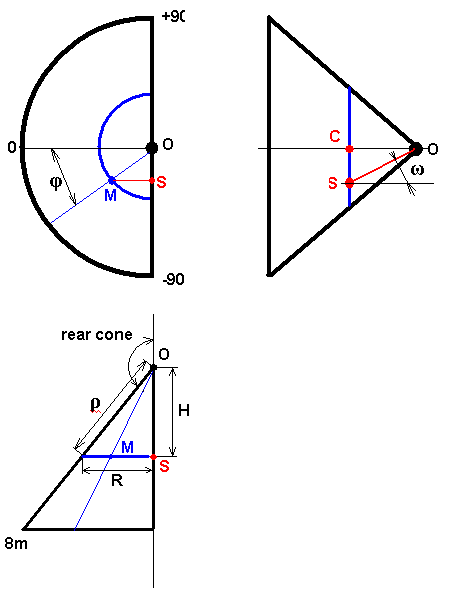

Farther, let's assume that match point arbitrarily located on rear cone at some distance (rho) tovertex and at some angle (phi) from down to up. The range make 0-8 metrs for rho, +/- 90 degrees for phi (left side of cone).

This is cycles for exhaustive search:

for(rho=0.1; rho<=8.0; rho=rho+0.1)

{

for(phi=-90; phi<=90; phi=phi+1)

{

}

}

Step is 0.1m for rho and 1 degree for phi.

For each pair of rho and phi we must calculate h, r:

double r=rho*sin(cone_blast*M_PI/180);

double h=-rho*cos(cone_blast*M_PI/180);

And we can now calculate length of line segment for MS, CS, CO, SO.

double MS=r*cos(phi*M_PI/180);

double CS=r*sin(phi*M_PI/180);

double CO=h;

double SO=sqrt(CO*CO+CS*CS);

As result we must found omega angle.

double omega=-atan(CS/CO)*180/M_PI;

And make correction on epsilon(elevation) of cone axis we get theta, it is elevation from S point to vertex of the cone (O point).

double theta=omega+epsilon_blast;

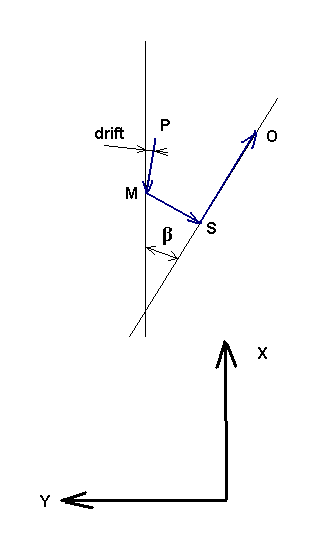

And now go to vectors. Note: S point at same XY plane that M point, and MS is perpendicular to OS.

Point3D Vector_MS;

Vector_MS.Z=0;

Vector_MS.X=-MS*sin(beta_blast*M_PI/180);

Vector_MS.Y=-MS*cos(beta_blast*M_PI/180);

Point3D Vector_SO;

Vector_SO.Z=SO*sin(theta*M_PI/180);

Vector_SO.X=SO*cos(theta*M_PI/180)*cos(beta_blast*M_PI/180);

Vector_SO.Y=-SO*cos(theta*M_PI/180)*sin(beta_blast*M_PI/180);

Sings must be match to vector direction.

Correction on Boeing moving (PM). T is time that need to fragments that fly from O to M with 2520 m/s. 252 is boeing speed in m/s.

double t=rho/2520;

double PM=t*252;

Point3D Vector_PM;

Vector_PM.Z=0;

Vector_PM.X=-PM*cos(beta_drift*M_PI/180);

Vector_PM.Y=PM*sin(beta_drift*M_PI/180);

I am not sure about this, if our blast position is “strings position” we don’t need take into consideration of PM.

All vectors must be added to match point coordinates, and we found possible position of vertex of cone for some phi and rho. It is our warhead point.

Point3D warhead_point;

warhead_point=match_point+Vector_PM+Vector_MS+Vector_SO;

So it is end, let’s take as “match function” distance from warhead point and to blast position from report.

double miss=sqrt((warhead_point.X-blast_point.X)*(warhead_point.X-blast_point.X)

+(warhead_point.Y-blast_point.Y)*(warhead_point.Y-blast_point.Y)

+(warhead_point.Z-blast_point.Z)*(warhead_point.Z-blast_point.Z)

);

For each pixel we must found minimal value.

if(miss<miss_min) miss_min=miss;

Last step is joint color to each pixel based on miss value. As base we take r_blast – radius for volume from report (1m3). Black color will be used for very good marching <0.5 r_blast, red for matching, yellow for miss, green for big miss, white for very big miss.

double r_blast= pow((3/(4*M_PI)), 1./3);

Color additionalColour;

if(miss_min<0.5*r_blast) additionalColour=additionalColour.Black;

else if(miss_min<1*r_blast) additionalColour=additionalColour.Red;

else if(miss_min<2*r_blast) additionalColour=additionalColour.Yellow;

else if(miss_min<3*r_blast) additionalColour=additionalColour.Green;

else additionalColour=additionalColour.White;

Color pixelColour=Map->GetPixel(X_Launch, Y_Launch);

pixelColour=pixelColour.FromArgb(JointColours(pixelColour, additionalColour));

Map->SetPixel(X_Launch, Y_Launch, pixelColour);

Final result is that.

I attach the VS project as zip file. You may check. The full calculation takes about 1-2 hour. So I add Map_Step parameter, make it equal 5 for fast calculation. But picture will be bad.

And now let’s talk about matching from TNO in context of this topic about search of launch location.

1. For a discussion of the location and guilt made sense all matching condition must be represented as math function and published (remember about expert arbitrariness).

2. It is a very bad choice – search for matching warhead angles that use it for search matching to launch location. The matching function must be project on map directly and performed as contour lines. The warhead angle is extra essence for location search. In the a prefect world we have to get rid of blast coordinates, and get something like a microphones out of sync delay projected on a map.

3. Such map must be represented for each match condition separately.

4. We should not rely on the accuracy, the good matching is not have a special sense. In contrast the bad matching with optimization is real bad for position.

5. We do not need search location as best matching for all conditions, we must exclude the field where one of it is not performed.

Now about the result map, it is a better result then may look. As a sample, we take only one condition, however the “grazing” condition must be done for our good matching points for wide angle (thanks to strings).

Both debatable position looks like a bad position. I don’t want to make conclusions about it.My math needs to be tested (insidious signs). However, I steel think that we should not expect that the simulation can uniquely say about the DNR guilt. In contrast, the ukranian guilt may be uniquely defined by tech expertise.

So I do not expect a rapid investigation. Towards Russia is not very promising, on the part of Ukraine is politically unacceptable. Who are thinking about of impartiality, look as TNO exam AA version (I wrote earlier in topic about AA experiment). It is look like an italian strike. And fork instead lancet raises the question of competence, no matter right or wrong but it is result that must confuse.

Like that:

ukrainian intelligence detects and fixes Buk on DNR side,

ukranian Buk shootdown MH17,

ukrainian intelligence post evidence about DNR Buk,

PROFIT!

Also speaking about the versions, I like area between Shahtersk and Amvrosievka, because there are fewer people, then near Torez or Snegnoe. And I like idea about long distance launch, because some witnesses say about airplane near MH17. It is make sense if we accept that they “airplain” is a BUK missile with off engine.

It is all, what I have to say now.

However, to show the essence of the objection I had to do a calculation to find the starting position. I do not strive for accuracy, on the contrary, I used a very rough approximation for brevity and laconic calculation. But the results are qualitatively similar to the true (If I dont make a dumbest mistake in my calculation like a wrong sign).

The calculation is VisualStudio C++ project, so if a simplest programming is a fear for you, don’t read more.Also, do not need to tell me about the bad code, the simplest code is our choice.

If anyone wants to make better, the first step is refuse by brut force minimization. About accuracy, I don’t see a big sense to make some parameters better, when other is still rough. To make all it correct is a big job

First is a map. I make screenshot with a launch position picture from MH17 report.

It is our map, 908*740 pixels. The last FDR position I found in Paint as [237;114].

int X_FDR=237;

int Y_FDR=114;

The scale is 10 km / (272-102). Forget about a map projection, ground is plane.

double x_scale=10./(272-102);

double y_scale=x_scale;

We will find a launch area by fingering each pixel to south of FDR position.

for(int X_Launch=0; X_Launch<=907; X_Launch++)

{

for(int Y_Launch=Y_FDR+1; Y_Launch<=739; Y_Launch++)

{

}

}

For each pixel, distance by axis to FDR point is:

double dx= x_scale*(X_FDR-X_Launch);

double dy=-y_scale*(Y_FDR-Y_Launch);

Y with “-“ because pixels orientation for Y is reverse to latitude.

A line distance is:

double l=sqrt(dx*dx+dy*dy);

Azimuth is (in degrees as it is for all other angles further):

double az=asin(dx/l)*180/M_PI;

Beta is angle of crossing Boeing axis, like 72-78 from AA presentation.

double beta_nominal=(az+(180-115));

This is conditions that set limitations to possible area on map by the distance and the angle:

if(l>35||l<20) continue;

if(beta_nominal>50||beta_nominal<0) continue;

This is code that joint the pixel color to white color making the selected area more white:

Color additionalColour;

additionalColour=additionalColour.White;

Color pixelColour=Map->GetPixel(X_Launch, Y_Launch);

pixelColour=pixelColour.FromArgb(JointColours(pixelColour, additionalColour));

Map->SetPixel(X_Launch, Y_Launch, pixelColour);

And now, all of is entirely:

Bitmap^ Map = gcnew Bitmap(pictureBox1->Image);

int X_FDR=237;

int Y_FDR=114;

double x_scale=10./(272-102);

double y_scale=x_scale;

for(int X_Launch=0; X_Launch<=907; X_Launch++)

{

for(int Y_Launch=Y_FDR+1; Y_Launch<=739; Y_Launch++)

{

double dx= x_scale*(X_FDR-X_Launch);

double dy=-y_scale*(Y_FDR-Y_Launch);

double l=sqrt(dx*dx+dy*dy);

double az=asin(dx/l)*180/M_PI;

double beta_nominal=(az+(180-115));

if(l>35||l<20) continue;

if(beta_nominal>50||beta_nominal<0) continue;

Color additionalColour;

additionalColour=additionalColour.White;

Color pixelColour=Map->GetPixel(X_Launch, Y_Launch);

pixelColour=pixelColour.FromArgb(JointColours(pixelColour, additionalColour));

Map->SetPixel(X_Launch, Y_Launch, pixelColour);

}

}

pictureBox1->Image=Map;

Map->Save("MH17_map_test.bmp");

The result is that map:

It is look like our selected area 20-35 km and 0-50 degree close to MH17 report launch area.

This map have increased angle range to 90, and the area with beta>30 degrees colored with yellow.

The 30 degrees beta direction (to Saur-Mohila) is border between DNR forces and ukrainian army. Left(yellow) is Ukranian guild, right(white) is DNR guild.

To better understand the fighting map again.

And for clothing the map theme, we get right distance and angle relations. But original map is not for plane, so we get offset of location which may be up to few kilometers.

Next step, we must calculate parameters of interception. It is not simple job, so it is unwelcome. And ballistic with aerodynamic was sent to map projection. Instead will be use linear approximation.

This is a picture from AA presentation.

This is function based on top picture that calculates a epsilon angle (elevation) by distance as linear approximation:

double epsilon(double l)

{

double lp[5]={13, 15, 19, 22, 26};

double ep[5]={30, 22, 5, 0, -5};

int i;

for(i=0; i<=2; i++) if(lp[i+1]>l) break;

return ep + (l-lp)*(ep[i+1]-ep)/(lp[i+1]-lp);

};

For the velocity it is much bad. I take only 2 point, and I don’t sure about second even:

double velocity(double epsilon)

{

double vp[2]={730, 600};

double ep[2]={22, 5};

return vp[0] + (epsilon-ep[0])*(vp[1]-vp[0])/(ep[1]-ep[0]);

};

The cone angle of rear fragment direction is next what we need found. I take as a base the warhead II from TNO report.

double cone_angle(double velocity)

{

double cone_zero_velocity=112;

double highest_frag_velocity=2520;

double vn=highest_frag_velocity*sin(cone_zero_velocity*M_PI/180);

double vt=highest_frag_velocity*cos(cone_zero_velocity*M_PI/180);

double vt_new=vt+velocity;

return 90-atan(vt_new/vn)*180/M_PI;

};

This is a code that providing printing epsilon, velocity and cone angle.

ofstream f;

f.open("res.txt");

for(double l=10; l<=35; l=l+1)

{

f<<l<<" "

<<epsilon(l)<<" "

<<velocity(epsilon(l))<<" "

<<cone_angle(velocity(epsilon(l)))

<<endl;

}

f.close();

Result is (distance [km], epsilon[degree], velocity[m/s], cone_angle[degree]):

10 42 882.941 91.4972

11 38 852.353 92.2464

12 34 821.765 92.9949

13 30 791.176 93.7424

14 26 760.588 94.4886

15 22 730 95.2333

16 17.75 697.5 96.0226

17 13.5 665 96.8096

18 9.25 632.5 97.594

19 5 600 98.3756

20 3.33333 587.255 98.6813

21 1.66667 574.51 98.9864

22 0 561.765 99.2911

23 -1.25 552.206 99.5192

24 -2.5 542.647 99.7471

25 -3.75 533.088 99.9746

26 -5 523.529 100.202

27 -6.25 513.971 100.429

28 -7.5 504.412 100.655

29 -8.75 494.853 100.881

30 -10 485.294 101.107

31 -11.25 475.735 101.333

32 -12.5 466.176 101.558

33 -13.75 456.618 101.783

34 -15 447.059 102.007

35 -16.25 437.5 102.231

So, it is vary by distance like truth, but value may have a big mistake.

This is value for each of them corresponds to current pixel as start location.

double epsilon_blast=epsilon(l);

double v_blast=velocity(epsilon_blast);

double cone_blast=cone_angle(v_blast);

Additionally, take a drift and Q elaboration for beta (see AA presentation).

Q must be variable for distance and azimuth, but who care about it through such rough calculation.

double beta_drift=-4;

double beta_q=2;

double beta_blast=beta_nominal-beta_drift-beta_q;

And now, let’s talk about a “matching”. First, I will give my example.

I take as a base the blast coordinates because I like strings much more then any computer modeling.

And because MH17 report have the conclusion about it.

If I were in DSB shoes, I would not accept the results contrary string model. I think, three independent simulation is a strings, microphones and one of blast computer simulation. And make note “volume less then one cubic metre”.

And as I see the blast position from DSB report look like NLR coordinates

So, code is:

Point3D blast_point;

blast_point.X=-0.25;

blast_point.Y=-3.0;

blast_point.Z=3.7;

Point3D is my struct which have add function.

struct Point3D

{

double X, Y, Z;

};

Point3D operator+(Point3D a, Point3D b)

{

Point3D c;

c.X=a.X+b.X;

c.Y=a.Y+b.Y;

c.Z=a.Z+b.Z;

return c;

};

And now, my match condition is coincidence of rear cone for fragments and the top of the nose septum between left and right cabin window. It is my “match point”.

I select this point because it is look like a truth, and because top of window is a good mark when you get a coordinate value by pixel on B777 picture. Result is:

Point3D match_point;

match_point.X=1.59;

match_point.Y=0;

match_point.Z=1.56;

Axis is that:

And note: Match is expert arbitrariness, and it is theme to discuss.

Farther, let's assume that match point arbitrarily located on rear cone at some distance (rho) tovertex and at some angle (phi) from down to up. The range make 0-8 metrs for rho, +/- 90 degrees for phi (left side of cone).

This is cycles for exhaustive search:

for(rho=0.1; rho<=8.0; rho=rho+0.1)

{

for(phi=-90; phi<=90; phi=phi+1)

{

}

}

Step is 0.1m for rho and 1 degree for phi.

For each pair of rho and phi we must calculate h, r:

double r=rho*sin(cone_blast*M_PI/180);

double h=-rho*cos(cone_blast*M_PI/180);

And we can now calculate length of line segment for MS, CS, CO, SO.

double MS=r*cos(phi*M_PI/180);

double CS=r*sin(phi*M_PI/180);

double CO=h;

double SO=sqrt(CO*CO+CS*CS);

As result we must found omega angle.

double omega=-atan(CS/CO)*180/M_PI;

And make correction on epsilon(elevation) of cone axis we get theta, it is elevation from S point to vertex of the cone (O point).

double theta=omega+epsilon_blast;

And now go to vectors. Note: S point at same XY plane that M point, and MS is perpendicular to OS.

Point3D Vector_MS;

Vector_MS.Z=0;

Vector_MS.X=-MS*sin(beta_blast*M_PI/180);

Vector_MS.Y=-MS*cos(beta_blast*M_PI/180);

Point3D Vector_SO;

Vector_SO.Z=SO*sin(theta*M_PI/180);

Vector_SO.X=SO*cos(theta*M_PI/180)*cos(beta_blast*M_PI/180);

Vector_SO.Y=-SO*cos(theta*M_PI/180)*sin(beta_blast*M_PI/180);

Sings must be match to vector direction.

Correction on Boeing moving (PM). T is time that need to fragments that fly from O to M with 2520 m/s. 252 is boeing speed in m/s.

double t=rho/2520;

double PM=t*252;

Point3D Vector_PM;

Vector_PM.Z=0;

Vector_PM.X=-PM*cos(beta_drift*M_PI/180);

Vector_PM.Y=PM*sin(beta_drift*M_PI/180);

I am not sure about this, if our blast position is “strings position” we don’t need take into consideration of PM.

All vectors must be added to match point coordinates, and we found possible position of vertex of cone for some phi and rho. It is our warhead point.

Point3D warhead_point;

warhead_point=match_point+Vector_PM+Vector_MS+Vector_SO;

So it is end, let’s take as “match function” distance from warhead point and to blast position from report.

double miss=sqrt((warhead_point.X-blast_point.X)*(warhead_point.X-blast_point.X)

+(warhead_point.Y-blast_point.Y)*(warhead_point.Y-blast_point.Y)

+(warhead_point.Z-blast_point.Z)*(warhead_point.Z-blast_point.Z)

);

For each pixel we must found minimal value.

if(miss<miss_min) miss_min=miss;

Last step is joint color to each pixel based on miss value. As base we take r_blast – radius for volume from report (1m3). Black color will be used for very good marching <0.5 r_blast, red for matching, yellow for miss, green for big miss, white for very big miss.

double r_blast= pow((3/(4*M_PI)), 1./3);

Color additionalColour;

if(miss_min<0.5*r_blast) additionalColour=additionalColour.Black;

else if(miss_min<1*r_blast) additionalColour=additionalColour.Red;

else if(miss_min<2*r_blast) additionalColour=additionalColour.Yellow;

else if(miss_min<3*r_blast) additionalColour=additionalColour.Green;

else additionalColour=additionalColour.White;

Color pixelColour=Map->GetPixel(X_Launch, Y_Launch);

pixelColour=pixelColour.FromArgb(JointColours(pixelColour, additionalColour));

Map->SetPixel(X_Launch, Y_Launch, pixelColour);

Final result is that.

I attach the VS project as zip file. You may check. The full calculation takes about 1-2 hour. So I add Map_Step parameter, make it equal 5 for fast calculation. But picture will be bad.

And now let’s talk about matching from TNO in context of this topic about search of launch location.

1. For a discussion of the location and guilt made sense all matching condition must be represented as math function and published (remember about expert arbitrariness).

2. It is a very bad choice – search for matching warhead angles that use it for search matching to launch location. The matching function must be project on map directly and performed as contour lines. The warhead angle is extra essence for location search. In the a prefect world we have to get rid of blast coordinates, and get something like a microphones out of sync delay projected on a map.

3. Such map must be represented for each match condition separately.

4. We should not rely on the accuracy, the good matching is not have a special sense. In contrast the bad matching with optimization is real bad for position.

5. We do not need search location as best matching for all conditions, we must exclude the field where one of it is not performed.

Now about the result map, it is a better result then may look. As a sample, we take only one condition, however the “grazing” condition must be done for our good matching points for wide angle (thanks to strings).

Both debatable position looks like a bad position. I don’t want to make conclusions about it.My math needs to be tested (insidious signs). However, I steel think that we should not expect that the simulation can uniquely say about the DNR guilt. In contrast, the ukranian guilt may be uniquely defined by tech expertise.

So I do not expect a rapid investigation. Towards Russia is not very promising, on the part of Ukraine is politically unacceptable. Who are thinking about of impartiality, look as TNO exam AA version (I wrote earlier in topic about AA experiment). It is look like an italian strike. And fork instead lancet raises the question of competence, no matter right or wrong but it is result that must confuse.

I think that correct expertise have more weight then something else, even youtube videos. And at its base are already being built version.Saur-Mohila - a strategic height ( 277.9 m)

From its peak visible area with a radius of 30-40 kilometers

In good weather, with Saur-Mohila seen Azov Sea, located 90 km to the south

In July 2014 Saur-Mohila was stronghold of the pro-russian rebels, and it is well exposed to fire all of the surrounding area

Now tell us how Armed Forces of Ukraine dragged BUK to the foot of the Saur-Mohila and before the eyes of astonished pro-russian rebels shot by Boeing ) )

Like that:

ukrainian intelligence detects and fixes Buk on DNR side,

ukranian Buk shootdown MH17,

ukrainian intelligence post evidence about DNR Buk,

PROFIT!

Also speaking about the versions, I like area between Shahtersk and Amvrosievka, because there are fewer people, then near Torez or Snegnoe. And I like idea about long distance launch, because some witnesses say about airplane near MH17. It is make sense if we accept that they “airplain” is a BUK missile with off engine.

It is all, what I have to say now.

Attachments

Last edited: