Those bumps in cloud speed seem to be due to the clouds stopping for a few frames when bumps happen just before the step rotations.

Based on some math I've done, the clouds really don't seem to be stopping or suddenly changing speed. There are a some known factors that create an appearance of them doing so:

- The image rotates around its center due to some combination of pod roll / derotation / banking, with sudden oscillating rotations near the bumps. This skews the average motion over the clouds.

- The object, along with the entire image, moves off center near the bumps, so if it moves in the opposite direction of the clouds then it appears to decrease the speed.

I tried to formalize these. It's not clear yet if there's a real difference between the motion of the clouds in the back vs the front, but it's probably small enough that we can model the measured motion of the clouds as one linear displacement V followed by a rotation R around the center C of the next image. I'm assuming it's rotating around the center of the image, not around the center of mass of the object, as the rotations are due to the pod and the jet, not the object. So going from a point P1 in the current image to a point P2 in the next image is given by:

P2 = R * (P1 + V - C) + C

This can be rewritten as:

P2 = R * P1 + (R * (V- C) + C) = R * P1 + T

To find R,T I use a

function to estimate a partial affine transform that looks like:

P2 = s * R' * P1 + T'

I've seen it suggested that I can just ignore the scale, use R = R', ignore T' and recalculate the translation as T = P2 - R * P1 . I'm not entirely sure if that's the best solution, but it seems to work here, particularly since abs(s-1.0) < 0.03. So we have:

R * (V- C) + C = P2 - R * P1

V = Rinv * (P2 - C) - P1 + C

Finally, if the object's center of mass moves from point O1 to O2 then V', the real motion of the clouds, is:

V' = V - (O2 - O1)

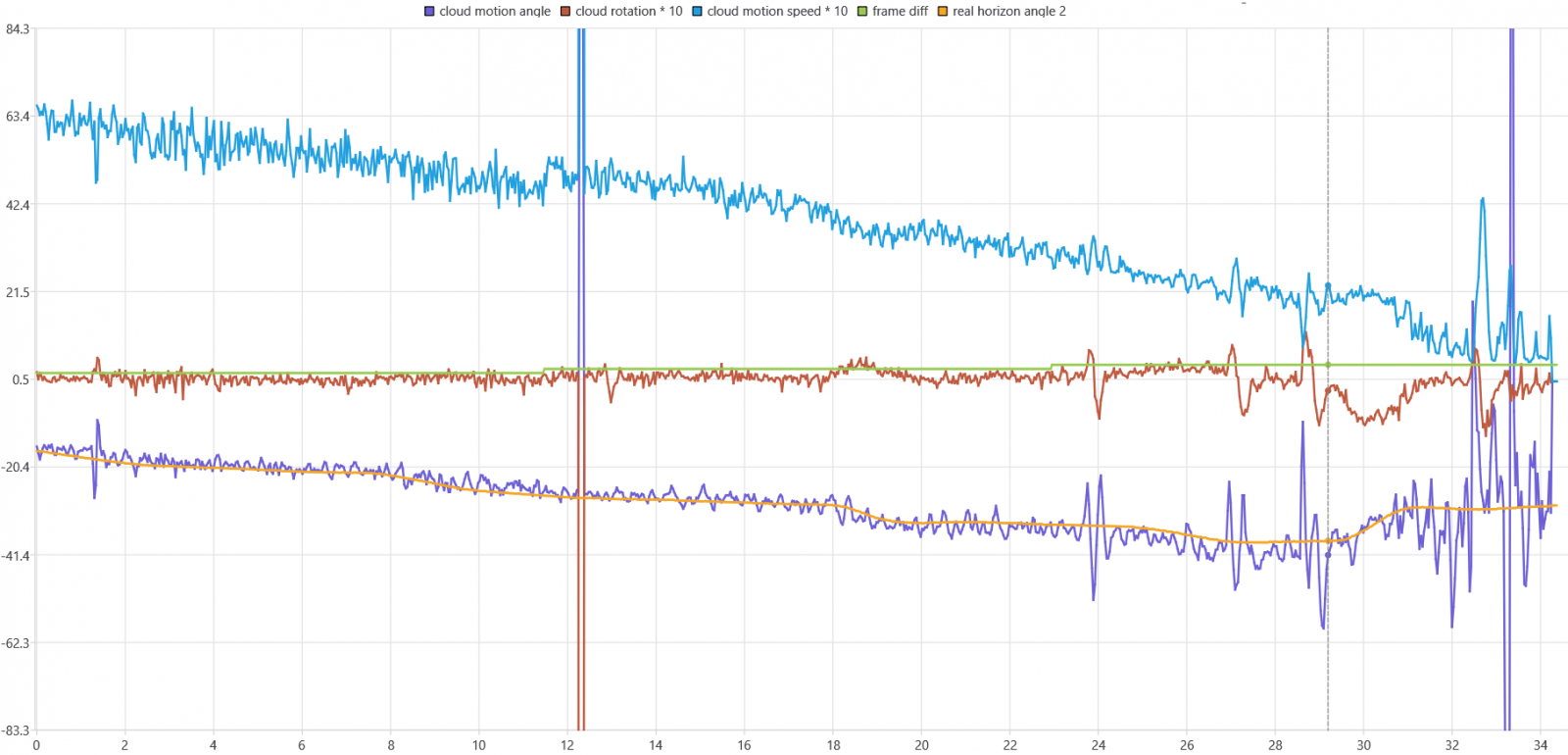

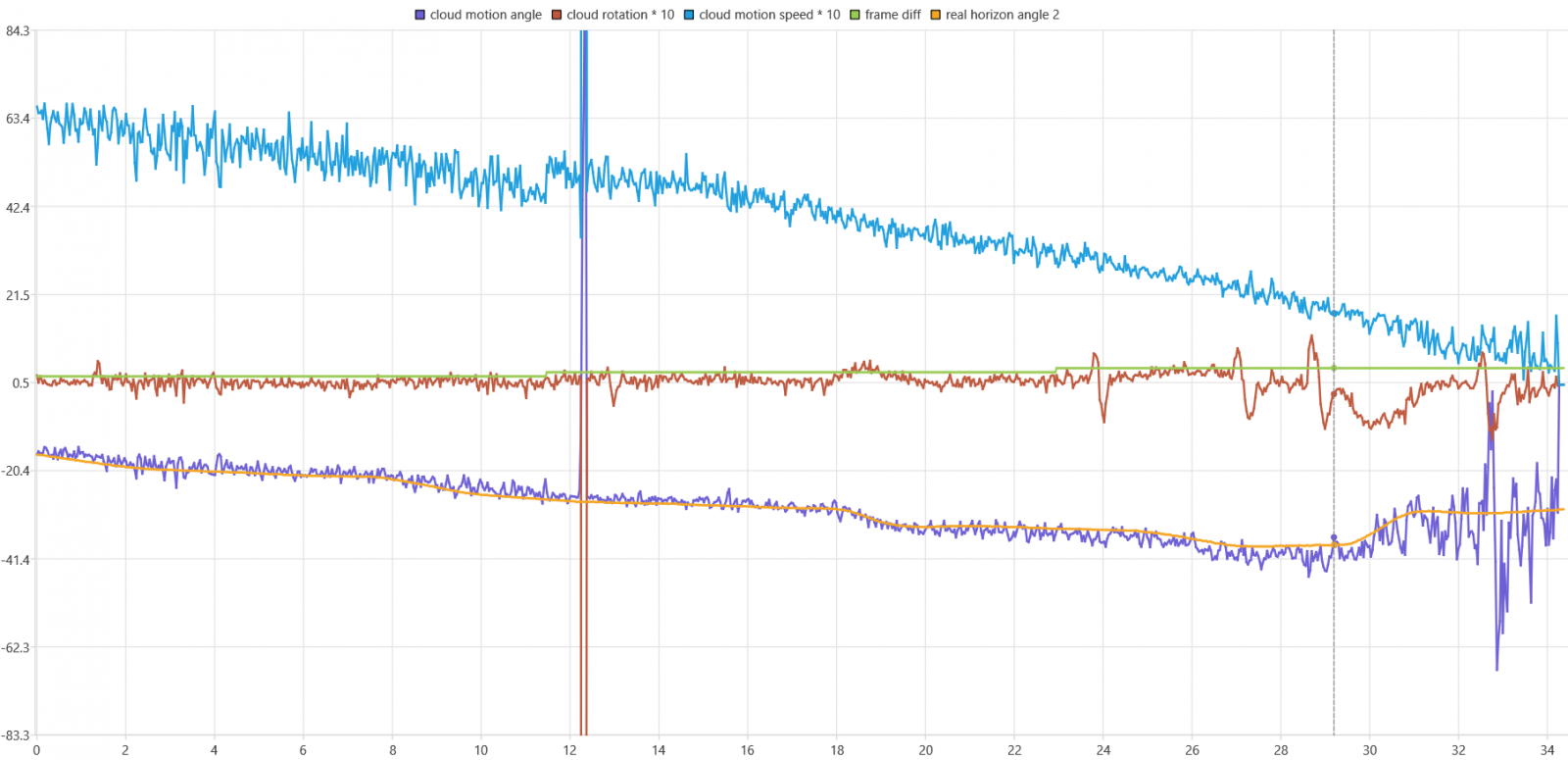

The average angle and speed from just the inliers of the partial affine transform estimation (with 2-4 frame diff) is similar to the results from the homography estimation, but now the rotation of the clouds (in degrees) can also be shown. (

data)

When the angle and speed is adjusted with the math from above the bumps in the speed and angle at 24, 27, 29 seconds almost entirely disappear. Around 33 seconds the angles are less bumpy than before but some remaining errors due to interlacing and the object going behind the trackbar are expected there. (

data)

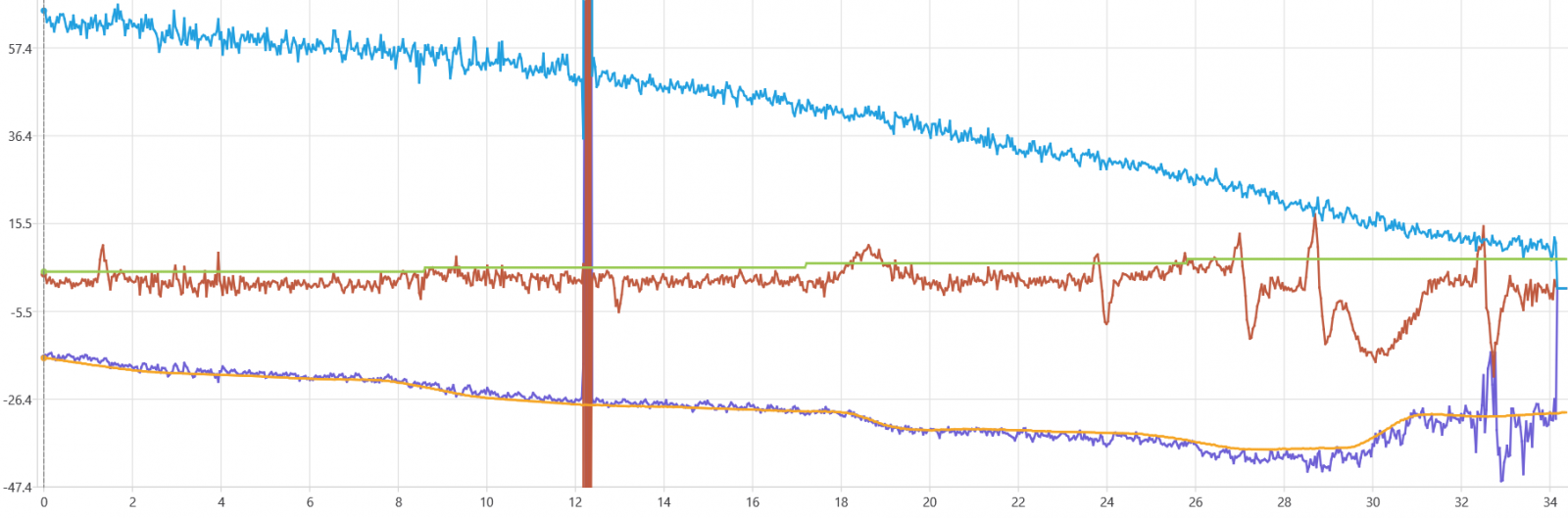

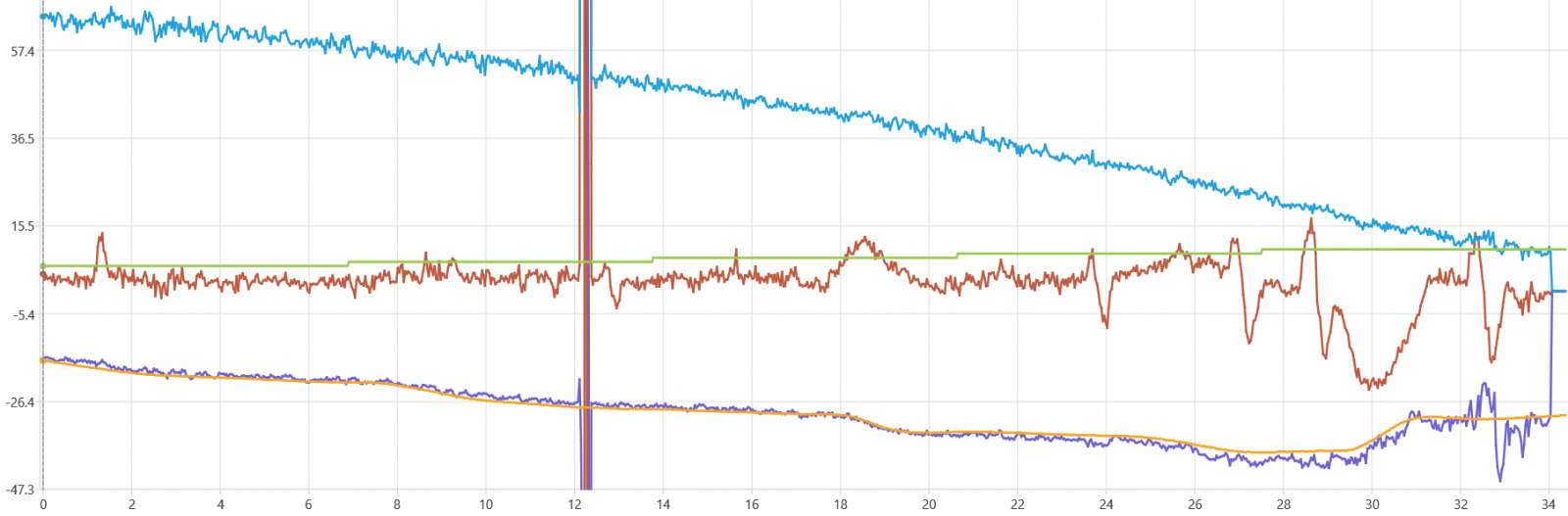

It's not clear what causes the apparent discontinuity when the frame diff changes from 2 to 3 at 11 seconds here, but as before it gets straightened out when higher frame diff is used. Here are the adjusted results for frame diff 4-7. (

data unadjusted,

data adjusted) Notice how we get the same general trend for the speed as the horizon stabilized versions before, but this time entirely based on the measured rotations, without using the calculated horizon angle.

Here are the adjusted results for frame diff 6-10. (

data unadjusted,

data adjusted)

Some further work that could refine this:

- There's a question of where the center of the image is exactly. I used (213, 210) as that's what the object generally tends to oscillate around in my graph

here (the values shown are (x or y - 210) * 20), although the trend shifts a bit. Maybe that can be refined.

- Although the adjustment effectively does full stabilization already, it could still be better to run the optical flow on the stabilized version of the video as the motion doesn't slow down quite as much there.

- Maybe some algorithm could be used for

deinterlacing the video ?

- The function for estimating the partial affine transform doesn't have a version that uses MAGSAC in OpenCV, so perhaps a better estimator could be used, particularly one that doesn't try to estimate a scale.

- I haven't quantified this yet but it seems like the whole image might be flickering in brightness. If so, either normalizing that brightness (not just between black hot / white hot) or just using a better optical flow method that is more invariant to illumination changes could perhaps help.

@Mick West What is the y axis for the cloud speed graph in Sitrec ? If I convert from pixels/frame maybe these results could be used to refine the path there ?